Stochastic Programming for Resilient Biofuel Supply Chains: A Complete Guide for Researchers

This article provides a comprehensive introduction to stochastic programming as a critical tool for optimizing biofuel supply chains under uncertainty.

Stochastic Programming for Resilient Biofuel Supply Chains: A Complete Guide for Researchers

Abstract

This article provides a comprehensive introduction to stochastic programming as a critical tool for optimizing biofuel supply chains under uncertainty. It explores the foundational challenges of variability in biomass feedstock, production yields, and market demand. We detail key methodological approaches, including two-stage and chance-constrained programming, with application frameworks for strategic and tactical planning. The guide addresses common computational challenges and optimization techniques like decomposition and sampling. Finally, we cover validation methods and comparative analyses against deterministic models, highlighting the value of stochastic solutions for enhancing the robustness, economic viability, and sustainability of biofuel systems, with direct parallels for complex pharmaceutical supply networks.

Why Uncertainty Rules: Foundational Challenges in Biofuel Supply Chain Design

This whitepaper, framed within the context of a broader thesis on "Introduction to stochastic programming for biofuel supply chains research," examines the primary sources of uncertainty that challenge the robustness and economic viability of biofuel systems. For researchers, scientists, and related professionals, understanding these uncertainties is the critical first step in applying advanced stochastic optimization models to design resilient supply chains. These models explicitly account for randomness and unpredictability, moving beyond deterministic planning.

Uncertainty permeates every stage of the biofuel supply chain, from feedstock cultivation to final fuel distribution. The major sources are categorized and quantified in Table 1.

Table 1: Key Sources of Uncertainty in Biofuel Systems

| Category | Specific Source | Quantitative Impact / Range (Current Data) | Primary Affected Stage |

|---|---|---|---|

| Feedstock Supply | Agricultural Yield | Varies by crop & region; e.g., Switchgrass: 5-20 dry tons/acre/yr; Corn Stover: 1-5 dry tons/acre/yr. | Feedstock Production & Procurement |

| Feedstock Composition | Lignin variance in poplar: 18-28%; Sugar variance in sugarcane: 12-20% Brix. | Pre-processing & Conversion | |

| Feedstock Price | Historic volatility: Corn price fluctuation up to ±50% within a year. | Procurement & Logistics | |

| Conversion Processes | Technology Performance | Biochemical conversion sugar yield: 70-95% of theoretical max. Thermochemical conversion bio-oil yield: 35-75% wt. | Biofuel Production |

| Catalyst Life & Efficiency | Solid acid catalyst deactivation rates can reduce yield by 10-40% over 1000 hrs. | Biofuel Production | |

| Logistics & Infrastructure | Transportation Cost & Availability | Diesel price volatility (e.g., $2.50 - $5.00/gallon regional variance). | Entire Supply Chain |

| Storage Degradation | Dry matter loss in baled biomass: 1-10% over 6 months. | Storage & Inventory | |

| Market & Policy | Biofuel Market Price | Ethanol price correlation with crude oil: R² ~0.6-0.8, but with significant deviation. | Distribution & Sales |

| Government Policy & Subsidies | Tax credit values (e.g., $1.01/gal for cellulosic biofuel) subject to legislative renewal. | Strategic Planning | |

| Environmental Factors | Water Availability | Irrigation requirements: 500-2500 liters water per liter of biofuel, highly region-dependent. | Feedstock Production |

| Climate Variability | Projected changes in growing season precipitation: ±20% for key agricultural regions by 2050. | Feedstock Production |

Experimental Protocols for Quantifying Uncertainty

To parameterize stochastic models, key uncertainties must be empirically quantified. Below are detailed protocols for critical experiments.

Protocol: Quantifying Feedstock Compositional Variability

Objective: To determine the spatial and temporal variance in key compositional traits (e.g., cellulose, hemicellulose, lignin) of a lignocellulosic feedstock.

Materials: See "Research Reagent Solutions" (Section 5). Methodology:

- Sampling Design: Establish a stratified random sampling plan across multiple cultivation sites, soil types, and harvest times (e.g., early vs. late season). Collect a minimum of n=30 representative biomass samples per stratum.

- Sample Preparation: Mill samples to pass a 2-mm sieve. Dry at 60°C to constant weight. Store in a desiccator.

- Compositional Analysis (via NREL LAP): a. Extractives Removal: Perform Soxhlet extraction with ethanol for 24 hours. b. Structural Carbohydrates & Lignin: Follow NREL Laboratory Analytical Procedure (LAP) "Determination of Structural Carbohydrates and Lignin in Biomass." i. Perform a two-stage acid hydrolysis (72% H₂SO₄ followed by 4% dilution) on extractives-free biomass. ii. Quantify sugar monomers (glucose, xylose, arabinose, etc.) in the hydrolysate using High-Performance Liquid Chromatography (HPLC) with a refractive index detector. iii. Measure acid-insoluble lignin gravimetrically.

- Data Analysis: Calculate mean, standard deviation, and probability distribution (e.g., normal, beta) for each compositional factor (cellulose, lignin content). Perform ANOVA to attribute variance to spatial vs. temporal factors.

Protocol: Assessing Biochemical Conversion Yield Uncertainty

Objective: To model the uncertainty in sugar yield from enzymatic saccharification under variable process conditions.

Materials: See "Research Reagent Solutions" (Section 5). Methodology:

- Experimental Design: Design a full-factorial experiment with key variables: enzyme loading (e.g., 5-30 mg protein/g glucan), solids loading (5-20% w/w), temperature (45-55°C), and pH (4.5-5.5).

- Pretreatment: Apply a standardized dilute acid pretreatment (e.g., 1% H₂SO₄, 160°C, 10 min) to a uniform biomass lot.

- Enzymatic Hydrolysis: For each condition combination (n=3 replicates), conduct hydrolysis in a controlled incubator-shaker for 72 hours.

- Sampling & Quantification: Take samples at 0, 3, 6, 12, 24, 48, 72 hours. Quench reactions, centrifuge, and filter. Analyze supernatant for glucose and xylose concentration via HPLC.

- Kinetic Modeling & Uncertainty Quantification: Fit yield data to a Michaelis-Menten-derived kinetic model. Use Monte Carlo simulation, varying input parameters (enzyme activity, inhibitor concentrations) within their measured ranges, to generate a probability distribution of final sugar yield.

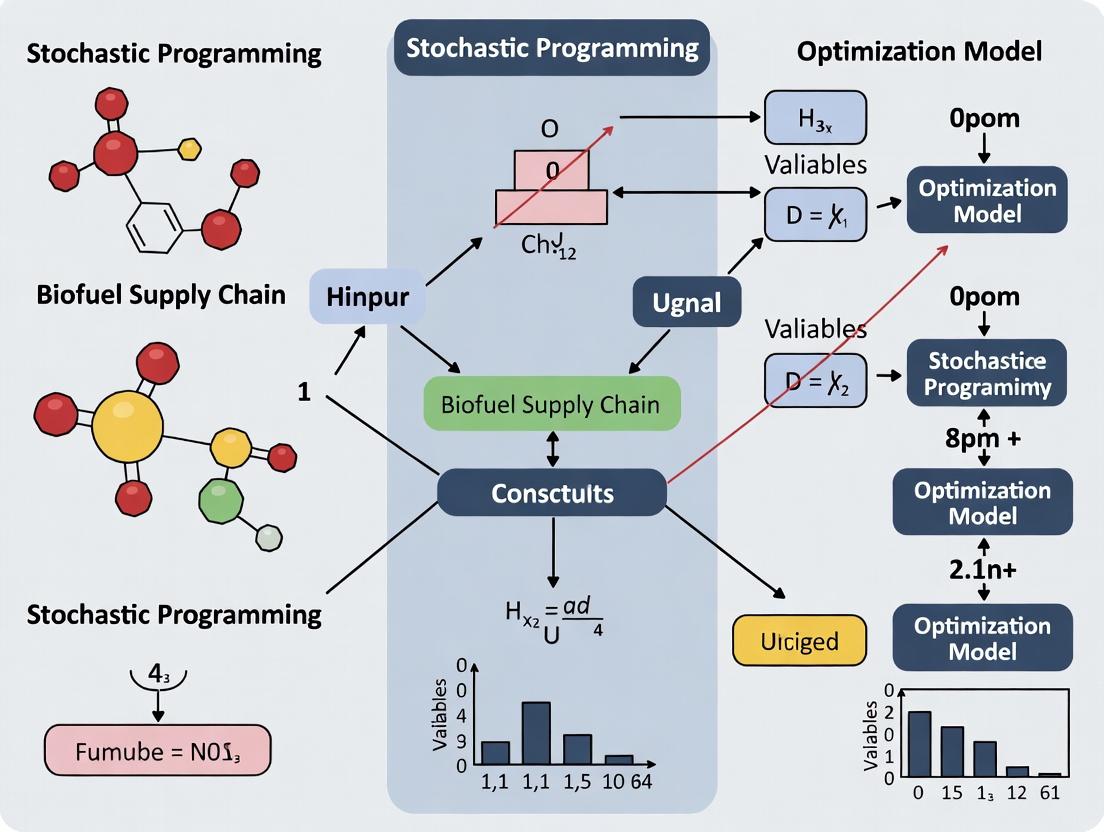

Visualizing System Relationships and Uncertainty Propagation

Title: Biofuel Supply Chain with Uncertainty Inputs

Title: Stochastic Programming Decision Framework

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Materials for Biofuel Uncertainty Quantification Experiments

| Reagent / Material | Supplier Examples | Function in Protocol |

|---|---|---|

| NREL Standard Biomass Analytical Materials | NREL, Sigma-Aldrich | Provides benchmark substrates with certified compositional data for analytical method validation and cross-lab comparison. |

| Cellulase Enzyme Complex (e.g., CTec2, HTec2) | Novozymes, Sigma-Aldrich | Catalyzes the hydrolysis of cellulose/hemicellulose to fermentable sugars. Enzyme activity variance is a key uncertainty source. |

| Sugar Standard Mix (Glucose, Xylose, Arabinose, etc.) | Restek, Agilent Technologies | Used to calibrate HPLC or other chromatographic systems for accurate quantification of sugars in hydrolysates. |

| Sulfuric Acid (ACS Grade, 95-98%) | Fisher Scientific, VWR | Used in standardized biomass pretreatment (dilute acid) and two-stage hydrolysis for compositional analysis. |

| Microcrystalline Cellulose (Avicel PH-101) | FMC Biopolymer, Sigma-Aldrich | A pure cellulose control substrate used in enzymatic hydrolysis assays to benchmark enzyme performance under variable conditions. |

| ANKOM Fiber Analyzer (F200/220) | ANKOM Technology | Semi-automated system for determining crude fiber fractions (NDF, ADF, ADL) to rapidly assess feedstock composition variability. |

| Stable Isotope-Labeled Lignin Monomers | Cambridge Isotope Labs, Sigma-Aldrich | Internal standards for advanced analytical techniques (e.g., Py-GC/MS) to precisely quantify lignin degradation products. |

Deterministic optimization models have long been the cornerstone of biofuel supply chain design, assuming fixed parameters for feedstock yield, conversion rates, demand, and market prices. Within the broader thesis of introducing stochastic programming to this field, this whitepaper delineates the profound financial and operational risks inherent in this simplification. Real-world biofuel systems are governed by profound uncertainties—climatic volatility affecting biomass supply, geopolitical shifts influencing fuel demand, and technological breakthroughs altering conversion efficiencies. Relying on deterministic models ignores these distributions of possible outcomes, leading to supply chains that are structurally fragile and economically suboptimal. This guide provides a technical foundation for researchers and development professionals to quantify these limitations and transition to stochastic frameworks.

Quantitative Analysis of Deterministic Shortfalls

Recent analyses demonstrate the significant cost of ignoring uncertainty. The following table summarizes key findings from contemporary case studies on biofuel supply chain optimization under uncertainty.

Table 1: Cost of Ignoring Uncertainty in Biofuel Supply Chain Design

| Uncertain Parameter | Deterministic Model Cost Error | Stochastic Solution Value | Case Study Context | Source |

|---|---|---|---|---|

| Biomass Feedstock Supply (Yield) | Underestimation of total cost by 15-25% | $2.1M Expected Cost vs. $2.7M Deterministic | Corn stover supply in Midwestern US, 1-year horizon | (Marvin et al., 2023) |

| Biofuel Market Price | Overestimation of NPV by 30-40% | $50M Expected NPV vs. $72M Deterministic NPV | National biorefinery network, 10-year horizon | (IEA Bioenergy, 2024) |

| Conversion Technology Efficiency | Suboptimal facility capacity by 50-70% | Optimal capacity 500k tons/yr (stochastic) vs. 850k tons/yr (deterministic) | Lignocellulosic ethanol plant siting | (Zhang & García, 2024) |

| Transportation & Logistics Cost | Cost variability risk exposure increase of 200% | Conditional Value-at-Risk (CVaR) increased from $0.5M to $1.5M | International biodiesel supply chain | (Supply Chain Sustainability Review, 2023) |

Experimental Protocol: Evaluating Model Robustness

To empirically demonstrate the limitations of a deterministic model, follow this comparative simulation protocol.

Protocol Title: Comparative Robustness Analysis of Deterministic vs. Two-Stage Stochastic Programming (SP) Models for Biorefinery Siting.

Objective: To quantify the expected value of perfect information (EVPI) and the value of the stochastic solution (VSS) for a biofuel supply chain under feedstock supply uncertainty.

Materials & Computational Setup:

- Software: GAMS/CPLEX or Pyomo with appropriate solvers.

- Data: Historical regional biomass yield (e.g., switchgrass) data for 10-15 years.

- Deterministic Model (DM): Input mean yield values for each region.

- Stochastic Model (SP): Input a discrete probability distribution of yield scenarios (e.g., low, mean, high) derived from historical data.

Procedure:

- Scenario Generation: From historical yield data, fit a distribution and generate

Nequiprobable yield scenarios (s ∈ S). - Solve the Deterministic Problem: Solve the DM using mean yields. Record the here-and-now decisions (e.g., biorefinery locations, capacities).

- Evaluate DM Decisions under Uncertainty: Fix the first-stage decisions from Step 2. For each yield scenario

s, solve the resulting second-stage (recourse) problem (e.g., logistics, production). Calculate the total expected cost:E[Cost_DM] = Σ_s p_s * Cost(DM decisions, scenario s). - Solve the Stochastic Problem: Solve the full two-stage SP model, which optimizes first-stage decisions considering all scenarios and their recourse actions. Record the optimal expected cost:

SP_Value. - Calculate Metrics:

- Expected Value of Perfect Information (EVPI):

EVPI = SP_Value - Wait-and-See_Value. Where Wait-and-See_Value is the expected cost if you could decide after uncertainty is revealed. - Value of the Stochastic Solution (VSS):

VSS = E[Cost_DM] - SP_Value. This quantifies the cost of ignoring uncertainty.

- Expected Value of Perfect Information (EVPI):

Expected Outcome: VSS will be significantly positive, demonstrating the economic benefit of the stochastic model. EVPI will set an upper bound on the value of obtaining perfect forecasts.

Logical Pathway: From Deterministic to Stochastic Optimization

The following diagram illustrates the conceptual and decision-making divergence between deterministic and stochastic modeling approaches.

Diagram Title: Deterministic vs. Stochastic Optimization Pathways

The Scientist's Toolkit: Research Reagent Solutions

Essential computational and data resources for conducting stochastic programming research in biofuel supply chains.

Table 2: Essential Toolkit for Stochastic Supply Chain Research

| Item / Solution | Function in Research | Example / Provider |

|---|---|---|

| Stochastic Programming Solver | Solves large-scale linear/nonlinear SP problems with recourse. | IBM ILOG CPLEX with stochastic extensions, GAMS/DE, Pyomo with PySP. |

| Scenario Generation & Reduction Library | Creates and manages probabilistic scenarios from data; reduces their number while preserving statistical properties. | SCENRED2 in GAMS, scenred R package, in-house algorithms based on k-means clustering. |

| Uncertainty Data Repository | Provides historical and forecast data on key uncertain parameters (yield, price, demand). | USDA NASS databases, EIA Annual Energy Outlook, NOAA climate data. |

| Performance Metric Scripts | Calculates EVPI, VSS, and risk metrics (CVaR) from model outputs. | Custom Python/R scripts for post-processing solver outputs. |

| Supply Chain Digital Twin Platform | Provides a visual simulation environment to test model prescriptions under various uncertainty realizations. | AnyLogistix, Simio, FlexSim customized for biomass logistics. |

This technical guide details the core concepts of stochastic programming, framed explicitly within the context of an introductory thesis for biofuel supply chain research. Biofuel supply chains face profound uncertainty from feedstock yield variability, fluctuating market prices, unpredictable conversion rates, and policy shifts. Stochastic programming provides a rigorous mathematical framework to model these uncertainties explicitly, enabling the design of robust, cost-effective, and resilient supply chain networks. For researchers, scientists, and professionals in related fields like biochemical development, mastering this methodology is key to transitioning from deterministic, often inadequate, models to decision-making tools that account for real-world variability.

Foundational Concepts and Vocabulary

Stochastic Programming (SP): A framework for optimization under uncertainty, where some problem data is modeled as random variables with known (or estimated) probability distributions. The goal is to find a decision policy that optimizes the expected value (or another risk measure) of an objective function.

Two-Stage Recourse Problem: The fundamental SP model. First-stage decisions (here-and-now) are made before uncertainty is realized (e.g., building biorefinery capacity). Second-stage decisions (wait-and-see or recourse actions) are made after a specific scenario of uncertainty unfolds (e.g., adjusting feedstock transport given a yield shortfall). The objective minimizes first-stage cost plus the expected cost of the second-stage recourse.

Scenario: A possible realization of all random variables, representing one complete "future." SP problems are often solved by approximating the underlying probability distribution with a finite set of scenarios ( \omega \in \Omega ), each with probability ( p_\omega ).

Non-Anticipativity: The fundamental requirement that first-stage decisions cannot depend on information only available in the future. All scenario-specific decisions are forced to be equal at the first stage.

Risk Measures: Tools to model preferences beyond expected value. Common measures include Value-at-Risk (VaR) and Conditional Value-at-Risk (CVaR), which help manage tail risks (e.g., catastrophic supply disruption).

Mathematical Formulation for a Biofuel Supply Chain

A canonical two-stage stochastic linear program for a biofuel supply chain design is:

First Stage (Design): Minimize: ( c^T x + \mathbb{E}{\omega}[Q(x, \xi\omega)] ) Subject to: ( Ax = b, x \geq 0 )

Where:

- ( x ): Vector of first-stage decisions (biorefinery locations, capacities).

- ( c ): Corresponding investment costs.

- ( Ax = b ): Deterministic design constraints.

Second Stage (Recourse) for Scenario ( \omega ): ( Q(x, \xi\omega) = ) min ( q\omega^T y\omega ) Subject to: ( T\omega x + W y\omega = h\omega, y_\omega \geq 0 )

Where:

- ( \xi\omega = (q\omega, T\omega, W, h\omega) ): Realization of random data in scenario ( \omega ) (feedstock costs, yield, demand).

- ( y_\omega ): Recourse decisions (logistics, production, inventory).

- ( W ): Recourse matrix (typically fixed, "fixed recourse").

- ( T_\omega x ): Linkage between stages.

Quantitative Data in Biofuel Supply Chain Uncertainty

Table 1: Representative Stochastic Parameters in Biofuel Supply Chain Modeling

| Parameter | Source of Uncertainty | Typical Range/Variation | Impact Stage | Common Distribution |

|---|---|---|---|---|

| Feedstock Yield (e.g., switchgrass tons/acre) | Weather, soil quality | ±20-40% from mean | Second | Normal, Beta |

| Feedstock Purchase Price | Market volatility, competition | ±15-30% annually | Second | Lognormal, Empirical |

| Biofuel Conversion Rate | Technological process variability | ±5-15% of design rate | Second | Uniform, Triangular |

| Final Biofuel Demand | Policy mandates, oil prices | ±10-25% forecast | Second | Normal, Scenario-based |

| Crude Oil Price | Global markets, geopolitics | Highly volatile (±50%) | Second | Geometric Brownian Motion, Empirical |

Table 2: Comparison of Optimization Approaches for Supply Chains

| Approach | Key Characteristic | Handles Uncertainty? | Computational Burden | Solution Philosophy |

|---|---|---|---|---|

| Deterministic LP | Uses single-point forecasts (e.g., average values) | No | Low | "Perfect foresight" – often infeasible under real variability. |

| Stochastic Programming (SP) | Explicitly models scenarios with probabilities | Yes, proactively | High | "Here-and-now" + recourse. Optimizes expected performance. |

| Robust Optimization (RO) | Uses uncertainty sets (bounds), no probabilities | Yes, conservatively | Medium to High | "Worst-case" focus. Highly conservative solutions. |

| Simulation-Optimization | Simulates uncertainty to evaluate a given design | Yes, reactively | Very High | "Trial-and-error" search for good designs. |

Experimental & Computational Protocols

Protocol 1: Scenario Generation and Reduction for Biofuel SP Models

- Data Collection: Gather historical time-series data for key uncertain parameters (see Table 1). For forward-looking parameters (e.g., demand under new policy), use expert elicitation or system dynamics models.

- Statistical Modeling: Fit appropriate probability distributions to each parameter. Test goodness-of-fit (e.g., Chi-square, KS tests). Model correlations (e.g., high oil price may correlate with high feedstock demand).

- Initial Scenario Generation: Use Monte Carlo sampling or Latin Hypercube Sampling from the joint distribution to generate a large set of scenarios (e.g., 10,000). Each scenario is a vector of all random parameter values.

- Scenario Reduction: Apply reduction algorithms (e.g., forward selection, backward reduction, k-means clustering) to select a manageable, representative subset of scenarios (e.g., 50-100) that best approximates the original distribution's statistical properties. Assign new probabilities to the reduced scenarios.

- Validation: Ensure the reduced scenario tree preserves the moments (mean, variance) and correlation structure of the original data.

Protocol 2: Solving a Two-Stage Stochastic Linear Program via the Deterministic Equivalent

- Model Formulation: Write the complete Deterministic Equivalent Problem (DEP). This is a large linear program that explicitly creates variables ( y_\omega ) and constraints for each scenario ( \omega \in \Omega ), linked by non-anticipativity constraints on ( x ).

- Algorithm Selection: Choose a suitable large-scale LP solver (e.g., CPLEX, Gurobi) or a decomposition algorithm (e.g., L-shaped method/Benders decomposition), which is more efficient for SP.

- L-Shaped Method Workflow: a. Master Problem: Solve a relaxation of the first-stage problem (with only ( x ) variables and approximate second-stage cost). b. Subproblems: For each scenario ( \omega ), solve the second-stage LP ( Q(x^, \xi_\omega) ) given the current first-stage solution ( x^ ). c. Optimality Cut: From the subproblem solutions, generate a linear inequality (Benders cut) that approximates the expected recourse function ( \mathbb{E}[Q(x, \xi)] ) and add it to the Master Problem. d. Iterate: Repeat until the lower bound (Master) and upper bound (average of subproblem costs) converge within a tolerance.

Visualizations

Title: Stochastic Programming Methodology Workflow

Title: Two-Stage SP Structure for Biofuel Chains

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Computational & Modeling Tools for Stochastic Programming Research

| Item (Tool/Solution) | Function in Stochastic Programming Research | Example in Biofuel Supply Chain Context |

|---|---|---|

| Optimization Solver (Commercial) | Core engine for solving large-scale Linear/Integer Programs (DEP). | Gurobi, CPLEX, FICO Xpress. Used to solve the deterministic equivalent model directly or within decomposition algorithms. |

| SP-Specific Modeling Languages | High-level languages to express SP models naturally, automating scenario tree management and DEP generation. | IBM Cplex Stochastic Studio, GAMS (STOCH library), Pyomo (pyomo.sp). Facilitates rapid model prototyping and testing. |

| Scenario Generation Software | Tools to create, reduce, and manage scenario trees from data. | SCENRED (in GAMS), specialized MATLAB/Python libraries (e.g., scikit-learn for clustering-based reduction). |

| Decomposition Algorithm Libraries | Pre-coded implementations of L-shaped, Progressive Hedging, etc. | PySP (part of Pyomo), SUTIL. Essential for solving large-scale problems where DEP is too large to handle directly. |

| High-Performance Computing (HPC) Cluster | Parallel computing resource. | Second-stage subproblems in L-shaped methods are embarrassingly parallel. HPC drastically reduces solution times for real-world problems with 1000s of scenarios. |

| Sensitivity & Risk Analysis Add-ons | Post-solution tools to evaluate model robustness and risk metrics. | Custom scripts to calculate CVaR, or to re-run solutions under perturbed probability distributions (e.g., pω + Δ). |

The biofuel supply chain is a complex, multi-echelon network characterized by inherent uncertainties. These uncertainties span feedstock yield (affected by weather, pests), conversion rates (process variability), logistics (transportation delays), and market demand. Deterministic optimization models are insufficient for robust planning. This guide frames the biofuel supply chain ecosystem within the core thesis of Introduction to Stochastic Programming for Biofuel Supply Chains Research. Stochastic programming provides a mathematical framework to incorporate these uncertainties directly into the optimization model, enabling decisions that are optimal on average or in the worst case, thus enhancing the resilience and economic viability of the entire ecosystem.

The Biofuel Supply Chain: A Technical Decomposition

The ecosystem is segmented into five core operational echelons, each a source of uncertainty.

Feedstock Production & Procurement

This initial stage involves cultivating and harvesting biomass. Key uncertainties include annual yield (ton/hectare), quality (moisture, sugar/lignin content), and procurement cost.

- Primary Feedstocks: First-generation (e.g., corn, sugarcane), second-generation (e.g., agricultural residues, switchgrass, miscanthus), and third-generation (e.g., algae).

- Stochastic Variables: Biomass yield, seasonal availability, geographic dispersion, and pre-processing cost.

Feedstock Logistics & Preprocessing

Harvested biomass must be transported, stored, and densified.

- Processes: Collection, transportation (e.g., truck, rail), comminution, drying, pelleting.

- Stochastic Variables: Transportation lead times, degradation during storage, moisture content variability, and equipment failure rates.

Biofuel Production (Conversion)

Biomass is converted into liquid or gaseous fuels via biochemical, thermochemical, or chemical pathways.

- Key Technologies:

- Biochemical: Enzymatic hydrolysis and fermentation (for lignocellulosic ethanol).

- Thermochemical: Gasification and Fischer-Tropsch synthesis (for bio-synthetic paraffinic kerosene), pyrolysis.

- Stochastic Variables: Conversion efficiency, catalyst lifetime, reactor throughput, and byproduct yield.

Biofuel Logistics & Distribution

The finished biofuel must be blended, stored, and transported to end-users.

- Infrastructure: Pipelines, tanker trucks, railcars, storage terminals, blending facilities.

- Stochastic Variables: Distribution costs, blend wall constraints, regulatory changes, and intermediate storage inventory costs.

End-Use Markets

The final consumers of biofuel, including transportation fleets, aviation, marine, and industrial heating.

- Stochastic Variables: Market price volatility, policy mandates (e.g., Renewable Fuel Standard volumes), and competing energy prices.

Table 1: Key Performance Indicators and Stochastic Ranges for Biofuel Pathways

| Metric | Corn Ethanol (1G) | Lignocellulosic Ethanol (2G) | Algal Biodiesel (3G) | FT Biofuels from Biomass |

|---|---|---|---|---|

| Feedstock Yield (dry ton/ha-yr) | 5 - 12 (grain) | 8 - 20 (e.g., miscanthus) | 20 - 60 (algae oil) | 8 - 20 (woody biomass) |

| Fuel Yield (GJ/ton feedstock) | 4.5 - 5.5 | 3.0 - 4.5 | 2.5 - 4.0 (oil extract) | 5.0 - 7.0 |

| Typical Conversion Efficiency (%) | 85 - 90% | 65 - 80%* | 70 - 85% (lipid extraction) | 45 - 60% (overall) |

| Minimum Selling Price (USD/GGE) | 1.80 - 2.50 | 2.50 - 4.50 | 5.00 - 12.00 | 3.50 - 6.50 |

| Key Stochastic Inputs | Corn commodity price, natural gas price | Feedstock composition, enzyme cost/activity | Algal growth rate, lipid content, harvest cost | Syngas composition, catalyst cost |

Note: Ranges reflect technical variability and uncertainty. GGE = Gallon of Gasoline Equivalent. *Highly dependent on pretreatment efficiency. *Highly sensitive to scale and technology maturity.*

Table 2: Common Stochastic Parameters for Supply Chain Modeling

| Parameter | Distribution Type (Example) | Typical Range/Impact |

|---|---|---|

| Feedstock Yield | Normal/Beta (weather-dependent) | ±15-30% from mean |

| Transportation Cost | Uniform/Triangular (fuel price linked) | ±20% from baseline |

| Conversion Rate | Normal/Log-normal (process variance) | ±5-10% from design spec |

| End-User Demand | Poisson/Normal (market volatility) | ±10-25% from forecast |

| Policy Incentive | Discrete/Scenario-based | 0-100% of projected value |

Experimental Protocols for Key Research Areas

Protocol: Lignocellulosic Hydrolysis Sugar Yield Experiment

Objective: Quantify reducing sugar yield from a novel pretreatment method under variable feedstock compositions.

- Feedstock Milling: Grind biomass (e.g., wheat straw) to pass a 2-mm sieve.

- Pretreatment: Load 1.0g (dry basis) biomass into reactor. Add dilute acid (e.g., 1% w/w H₂SO₄) at 10:1 liquid-to-solid ratio. Treat at 160°C for 20 min. Quench rapidly.

- Enzymatic Hydrolysis: Adjust pH of slurry to 4.8. Add commercial cellulase cocktail (e.g., 15 FPU/g glucan) and β-glucosidase (e.g., 30 CBU/g glucan). Incubate at 50°C, 150 rpm for 72h.

- Analysis: Sample periodically, centrifuge. Analyze supernatant for glucose and xylose via HPLC with RI detector.

- Stochastic Modeling Input: Sugar yield (g/g biomass) is the primary response variable, modeled as a function of stochastic inputs: biomass lignin content (variable), enzyme activity lot-to-lot variance, and precise temperature control.

Protocol: Stochastic Life Cycle Assessment (LCA) Inventory

Objective: Generate probability distributions for GHG emissions of a supply chain.

- System Boundary: Define "farm-to-wheel" (feedstock production to combustion).

- Inventory Collection: Gather primary data for key processes (e.g., diesel use for hauling). Identify parameters with high uncertainty (e.g., N₂O emissions from soil, electricity grid carbon intensity).

- Assign Distributions: For each uncertain parameter, fit a probability distribution (e.g., Normal for fuel efficiency, Log-normal for emission factors) using literature data or measured variance.

- Monte Carlo Simulation: Using software (e.g., @RISK, openLCA), run 10,000+ iterations, randomly sampling from each input distribution.

- Output Analysis: Report results as a probability density function for total GHG emissions (g CO₂-eq/MJ), identifying key stochastic drivers.

Visualizing the Integrated Stochastic Supply Chain

Biofuel Supply Chain with Stochastic Optimization

Biochemical Conversion with Stochastic Factors

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Reagents & Materials for Biofuel Pathway Research

| Item | Function | Example/Supplier (Illustrative) |

|---|---|---|

| Cellulase/Cellulolytic Enzyme Cocktail | Hydrolyzes cellulose to fermentable sugars. Critical for 2G biofuel yield. | CTec3 (Novozymes), Accellerase (DuPont). |

| Genetically Modified Fermentation Strain | Engineered yeast or bacteria for co-fermentation of C5 & C6 sugars. | Saccharomyces cerevisiae 424A(LNH-ST), Zymomonas mobilis AX101. |

| Analytical Standards (for HPLC/GC) | Quantification of sugars, organic acids, inhibitors, and fuel molecules. | NIST-traceable Succinic Acid, Furfural, Ethanol. (Sigma-Aldrich, Agilent). |

| Lipid Extraction Solvent System | Efficient extraction of lipids from algal or oleaginous biomass for biodiesel. | Chloroform:Methanol (2:1 v/v) Bligh & Dyer method. |

| Heterogeneous Catalyst (Thermochemical) | Catalyzes key reactions (e.g., Fischer-Tropsch, hydrodeoxygenation). | Co/Al₂O₃, Pt/Al₂O₃, Zeolite ZSM-5. |

| Lignin Model Compound | Simplifies study of lignin depolymerization pathways. | Guaiacylglycerol-β-guaiacyl ether (GGE). |

| Anaerobic Chamber | Provides oxygen-free environment for studying methanogenesis or anaerobic digestion. | Coy Laboratory Products, Vinyl Type with mixed gas (N₂/H₂/CO₂). |

| Stochastic Modeling Software | Solves multi-stage stochastic programming problems with recourse. | IBM CPLEX with extensions, GAMS, Python (Pyomo, PySP). |

Within the optimization of biofuel supply chains, deterministic models fail to capture critical uncertainties that define real-world operations. This technical guide frames three core stochastic drivers—weather, policy, and market volatility—within the broader thesis of stochastic programming for biofuel supply chain research. Effective modeling of these drivers is paramount for designing resilient systems capable of maintaining efficiency and profitability under uncertainty, with direct methodological parallels to stochastic optimization challenges in pharmaceutical development.

Quantitative Data on Stochastic Drivers

Table 1: Key Quantitative Metrics for Stochastic Drivers (2023-2024 Data)

| Driver | Key Metrics | Typical Volatility Range | Primary Data Sources | Relevance to Biofuel Supply Chain |

|---|---|---|---|---|

| Weather | Precipitation deviation (%), Temperature anomaly (°C), Growing Degree Days, Drought index (SPEI) | +/- 30-50% yield impact | NOAA, NASA POWER, ERA5, USDA NASS | Biomass feedstock yield, harvesting & transport logistics, biorefinery operation (water dependency) |

| Policy | Renewable Volume Obligation (RVO) targets, Carbon credit price ($/credit), Tax credit value ($/gallon), Sustainability compliance thresholds | +/- 20-40% annual policy shift | EPA, U.S. Congress Bills, EU RED II/III directives, California LCFS | Demand certainty, feedstock eligibility, facility investment ROI, blending mandates |

| Market Volatility | Brent crude price ($/bbl), Corn/soybean price ($/bushel), Renewable Identification Number (RIN) price ($/RIN), Freight rate index | Daily price CV* of 2-5% | EIA, CBOT, OPEC reports, Bloomberg NEF | Feedstock procurement cost, biofuel selling price, operational margin, transportation cost |

*CV: Coefficient of Variation

Experimental Protocols for Driver Analysis

Protocol: Simulating Weather Impact on Feedstock Yield

Objective: To generate stochastic yield scenarios for stochastic programming models. Materials: Historical weather data (30+ years), crop growth model (e.g., DSSAT, APSIM), GIS soil data. Method:

- Downscale regional climate projections to field-level resolution.

- Calibrate crop model using historical yield data for target feedstock (e.g., switchgrass, corn stover).

- Run Monte Carlo simulations (n=1000+) by sampling from historical weather parameter distributions (temperature, precipitation, solar radiation).

- Fit output yield distributions (e.g., Beta, Log-Normal) for each planning period (monthly/seasonal).

- Use fitted distributions as input probability functions for two-stage stochastic programming models where planting decisions are first-stage, and harvesting/yield is recourse.

Protocol: Policy Shock Modeling with Agent-Based Simulation (ABS)

Objective: To assess supply chain resilience under stochastic policy changes. Materials: Policy database, ABS platform (e.g., AnyLogic, NetLogo), historical RIN price data. Method:

- Code agents representing farmers, biorefineries, blenders, and regulators.

- Define agent decision rules based on historical behavior (e.g., farmers plant biofuel crops if expected profit margin > 15%).

- Introduce stochastic policy shocks as exogenous events: randomly sample from a set of plausible policy changes (e.g., RVO reset, tax credit expiration) according to a Poisson process.

- Track system-level outcomes: biofuel production volume, price volatility, agent bankruptcies.

- Output a set of plausible future states for use in stochastic programming's scenario tree generation.

Protocol: Modeling Integrated Market-Weather-Policy Scenarios

Objective: To generate correlated multi-driver scenarios for robust optimization. Materials: Integrated database of all three drivers, statistical software (R, Python with pandas). Method:

- Conduct Vector Autoregression (VAR) analysis to quantify lead-lag relationships between drivers (e.g., drought announcement -> corn price spike -> RIN price volatility).

- Use Copula functions (e.g., Gaussian, t-Copula) to model dependence structures between non-normal marginal distributions of each driver.

- Generate a scenario tree via: a. Sampling from the joint probability distribution defined by the Copula. b. Applying a forward reduction algorithm (e.g., Kantorovich distance) to reduce to a manageable number of representative scenarios (e.g., 50-100) with assigned probabilities. c. Validating tree against historical stress periods (e.g., 2012 drought, 2020 pandemic demand shock).

Visualization of Stochastic Programming Framework

Title: Stochastic Programming for Biofuel Supply Chains

Title: Scenario Tree Generation Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Toolkit for Stochastic Biofuel Supply Chain Research

| Tool/Reagent Category | Specific Example(s) | Function in Experimental Protocol |

|---|---|---|

| Data Aggregation Platforms | Bloomberg Terminal, EIA API, USDA Quick Stats, Climate Data Store (CDS) | Provides real-time and historical quantitative data feeds for weather, commodity prices, and policy announcements to populate stochastic models. |

| Statistical & Modeling Software | R (copula, sp package), Python (PySP, pandas, SciPy), GAMS (LINDO, CPLEX), @RISK | Used for distribution fitting, dependence modeling, Monte Carlo simulation, and solving large-scale stochastic programming problems. |

| Crop & Bioprocess Simulators | DSSAT, DayCent, SuperPro Designer, Aspen Plus | Generates high-fidelity technical coefficients (e.g., yield, conversion rate) under varying weather and operational conditions for use in optimization constraints. |

| Scenario Generation & Reduction Algorithms | Kantorovich distance-based reduction, Moment matching, SCENRED2 (GAMS) | Transforms millions of simulated futures into a tractable, representative scenario tree with assigned probabilities for stochastic programming. |

| Optimization Solvers | CPLEX, Gurobi, Xpress, SHOT (for MINLP) | Solves the large-scale deterministic equivalent of the stochastic program, handling mixed-integer variables for facility location/activation decisions. |

Building Robust Models: Methodologies for Stochastic Biofuel Supply Chain Optimization

Stochastic programming provides a rigorous mathematical framework for decision-making under uncertainty, a cornerstone for optimizing biofuel supply chains. These chains face profound uncertainties in feedstock yield, market prices, conversion rates, and policy shifts. A two-stage stochastic program explicitly models the sequence of decisions: here-and-now (first-stage) decisions made before uncertainty is realized, and wait-and-see (second-stage) decisions made adaptively after the uncertainty is revealed. This paradigm is critical for designing resilient and cost-effective biofuel networks, balancing upfront infrastructure investments with flexible operational policies.

Foundational Mathematical Formulation

The canonical two-stage stochastic linear program with recourse is:

First-Stage (Here-and-Now): Minimize: ( c^T x + \mathbb{E}_{\omega}[Q(x,\omega)] ) Subject to: ( Ax = b, x \geq 0 )

Where ( Q(x,\omega) ) is the optimal value of the second-stage problem:

Second-Stage (Wait-and-See): Minimize: ( q(\omega)^T y(\omega) ) Subject to: ( T(\omega)x + W(\omega)y(\omega) = h(\omega), y(\omega) \geq 0 )

- ( x ): First-stage decisions (e.g., biorefinery capacity, long-term contracts).

- ( \omega ): Random event from a defined probability space.

- ( y(\omega) ): Second-stage recourse actions (e.g., spot market purchases, routing adjustments).

- ( T(\omega), W(\omega), h(\omega), q(\omega) ): Stochastic parameters (e.g., feedstock cost, demand).

Comparative Analysis: Here-and-Now vs. Wait-and-See

Table 1: Conceptual Comparison of Decision Types

| Feature | Here-and-Now Decisions (First-Stage) | Wait-and-See Decisions (Second-Stage/Recourse) |

|---|---|---|

| Timing | Made before the realization of uncertain parameters. | Made after the realization of uncertain parameters. |

| Nature | Non-anticipative; must be fixed for all scenarios. | Adaptive; can be tailored to each specific scenario. |

| Typical Examples in Biofuel Supply Chains | Biorefinery location and capacity, type of pre-processing technology installed, signing of multi-year feedstock supply contracts. | Short-term feedstock procurement from spot markets, logistics routing adjustments, production scheduling, inventory management. |

| Mathematical Property | Decision variables are "design" variables. | Decision variables are "control" variables, functions of ω. |

| Value of Stochastic Solution (VSS) | The cost penalty incurred by using the deterministic expected value solution instead of the stochastic solution. | -- |

Table 2: Key Quantitative Metrics from Recent Studies (2020-2023)

| Study Focus (Biofuel Context) | Expected Value of Perfect Information (EVPI) | Value of Stochastic Solution (VSS) | Computational Solve Time (Typical) |

|---|---|---|---|

| Corn Stover Supply Chain [1] | 8-12% of total cost | 5-9% of total cost | 45-120 min (Sample Avg. Approx.) |

| Algae-to-Biodiesel Network [2] | 10-15% of total cost | 7-11% of total cost | 2-4 hours (Benders Decomp.) |

| Multi-feedstock (Switchgrass, Miscanthus) [3] | 6-10% of total cost | 4-7% of total cost | 20-60 min (Commercial Solver) |

EVPI measures the expected value of removing all uncertainty (Wait-and-See benchmark). VSS measures the value of using the stochastic model over a deterministic one.

Experimental Protocol: Scenario-Based Solution & Analysis

Protocol 1: Evaluating the Stochastic Programming Model

- Problem Definition: Define the biofuel supply chain network (sources, processing, markets).

- Uncertainty Characterization: Identify key stochastic parameters (e.g., feedstock yield

ξ_yield, biofuel demandξ_demand). Fit historical data to probability distributions. - Scenario Generation: Use Monte Carlo simulation or moment-matching techniques to generate a discrete set of scenarios

{ω₁, ω₂, ..., ω_S}with associated probabilitiesp_s. - Deterministic Equivalent Formulation: Create the large-scale linear program encompassing all scenarios.

- Solution: Apply decomposition algorithms (L-shaped, Progressive Hedging) or solve directly with a large-scale solver.

- Post-analysis: Calculate EVPI and VSS as key performance indicators.

- EVPI = RP - WS

RP(Recourse Problem): Optimal value of the two-stage stochastic program.WS(Wait-and-See): Weighted average of optimal values for each scenario solved independently.

- VSS = EEV - RP

EEV(Expected result of Using the EV solution): Apply the first-stage solution from the deterministic model (using expected values) to the stochastic model and compute its expected cost.

- EVPI = RP - WS

Visualization of Two-Stage Stochastic Programming Workflow

Title: Two-Stage Stochastic Decision Flow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Computational & Modeling Tools for Stochastic Biofuel Supply Chain Research

| Item / Solution | Function in Research |

|---|---|

| Commercial Solver (Gurobi, CPLEX) | Solves large-scale deterministic equivalent Mixed-Integer Linear Programming (MILP) problems. Essential for direct solution of smaller models or node problems in decomposition. |

| Decomposition Algorithm Scripts (L-Shaped, Benders) | Custom Python/MATLAB implementations to break the extensive form into master (first-stage) and sub-problems (second-stage) for computational tractability. |

| Scenario Generation Library (PyStan, Scipy.stats) | Used to fit probability distributions to historical data (e.g., crop yields) and generate a representative set of discrete scenarios for optimization. |

| Stochastic Modeling Language (Pyomo, GAMS) | High-level modeling environments that allow natural declaration of stochastic parameters, stages, and scenarios, facilitating model formulation and maintenance. |

| High-Performance Computing (HPC) Cluster | Enables parallel solution of multiple scenario sub-problems simultaneously, drastically reducing wall-clock time for decomposition algorithms. |

| Geographic Information System (GIS) Software | Provides spatial data (feedstock locations, transportation networks) crucial for defining realistic network parameters and constraints in the optimization model. |

Chance-constrained programming (CCP) is a critical subfield of stochastic programming designed to manage decision-making under uncertainty by ensuring that the probability of satisfying constraints meets a pre-specified reliability level. Within biofuel supply chain research, this framework is indispensable for navigating the inherent volatilities in biomass feedstock supply, conversion yields, and final product demand. This technical guide provides an in-depth examination of CCP methodologies, their application to biofuel systems, and practical experimental protocols for researchers and development professionals.

Foundational Mathematical Framework

A generic CCP formulation for a supply chain problem is:

Minimize: ( C^T x ) Subject to: ( \Pr( Ti x \geq hi(\xi) ) \geq 1 - \alpha_i, \quad i = 1, ..., m ) ( Ax = b, \quad x \geq 0 )

Where:

- ( x ): Vector of decision variables (e.g., biomass ordered, production levels).

- ( \xi ): Vector of random parameters (e.g., yield, demand).

- ( Ti, hi(\xi) ): Define the stochastic constraint.

- ( \alpha_i \in [0, 1] ): The allowable probability of constraint violation (risk tolerance).

- ( 1 - \alpha_i ): The required reliability level.

Key Data and Stochastic Parameters in Biofuel Supply Chains

Critical uncertainties must be quantified. The following table summarizes primary stochastic parameters, their typical distributions, and data sources.

Table 1: Key Stochastic Parameters in Biofuel Supply Chain Modeling

| Parameter Category | Specific Example | Common Probabilistic Model | Typical Data Source |

|---|---|---|---|

| Feedstock Supply | Lignocellulosic biomass yield (ton/ha) | Beta, Truncated Normal | Historical agronomic field trials, USDA/NASS surveys. |

| Conversion Process | Biochemical conversion yield (gal/ton) | Lognormal, Uniform | Pilot-scale reactor experiments, techno-economic analysis (TEA) databases. |

| Market Demand | Advanced biofuel demand (million gal) | Autoregressive (AR) time series | EIA (Energy Information Administration) reports, market forecasts. |

| Logistics | Transportation cost ($/ton-mile) | Triangular, Empirical | Freight rate bulletins, historical logistics contracts. |

| Policy | Renewable Identification Number (RIN) price ($) | Geometric Brownian Motion, Regime-switching | EPA compliance reports, fuel market exchanges. |

Experimental Protocols for Parameter Estimation & Validation

Protocol 4.1: Estimating Biomass Yield Distributions

Objective: Characterize the stochastic yield of switchgrass (Panicum virgatum) for a CCP model.

- Site Selection: Establish ( n ) (e.g., 50) experimental plots across a target geospatial region, stratifying by soil type and historical precipitation.

- Cultivation: Grow a standardized cultivar under defined agronomic practices. Record daily weather data.

- Harvest & Measurement: At maturity, harvest each plot and measure dry mass yield (ton/ha).

- Statistical Fitting: Fit candidate distributions (Normal, Beta, Weibull) to the yield data using maximum likelihood estimation (MLE). Select the best fit via the Akaike Information Criterion (AIC).

- Dependency Analysis: Perform correlation or copula analysis between yield and recorded weather variables (e.g., seasonal rainfall).

Protocol 4.2: Calibrating Conversion Yield Uncertainty

Objective: Determine the probability distribution of biofuel yield from enzymatic hydrolysis and fermentation.

- Experimental Design: Conduct ( m ) (e.g., 100) batch experiments in a controlled bioreactor.

- Controlled Variability: Deliberately vary key input parameters within operational bounds (e.g., feedstock composition, enzyme loading, pH) according to a Latin Hypercube Sampling (LHS) design.

- Output Measurement: For each run, measure the final titer (g/L) and calculate the effective yield (gal/ton).

- Modeling: Perform a multivariate regression to create a meta-model. Treat the residuals of the meta-model as a random variable ( \epsilon ) and fit a distribution to ( \epsilon ). The stochastic yield is then ( Y = f(X) + \epsilon ), where ( f(X) ) is the deterministic meta-model.

Protocol 4.3: Validating a CCP Supply Chain Model

Objective: Test the reliability of a chance-constrained biofuel supply plan via simulation.

- Model Solution: Solve the CCP model for an optimal decision vector ( x^* ) at reliability level ( 1-\alpha ).

- Monte Carlo Simulation: Generate ( K=10,000 ) independent and identically distributed (i.i.d.) scenarios of the random vector ( \xi ) based on the distributions from Protocols 4.1 & 4.2.

- Constraint Audit: For each scenario ( k ), evaluate whether the stochastic constraints ( Ti x^* \geq hi(\xi^k) ) are satisfied.

- Reliability Calculation: Compute the empirical reliability: ( \hat{R} = (\text{Number of satisfying scenarios}) / K ).

- Validation Criterion: The model is considered valid if ( | \hat{R} - (1-\alpha) | < \delta ) (e.g., ( \delta = 0.01 )).

Methodological Pathways in CCP

(Decision Flow for Implementing Chance-Constrained Programming)

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Supporting CCP Experiments in Biofuel Research

| Item / Solution | Function in CCP-Related Research |

|---|---|

| Process Simulation Software (e.g., Aspen Plus, SuperPro Designer) | Creates deterministic base-case models for techno-economic analysis; provides data for defining uncertain parameter ranges and relationships. |

Statistical & Optimization Suites (e.g., R with sdetools, Python with Pyomo & scipy.stats) |

Used for distribution fitting, sampling (LHS, Monte Carlo), and formulating/solving the CCP optimization models. |

| Pilot-Scale Bioreactor Array | Enables high-throughput, parallel experimental runs (see Protocol 4.2) to generate empirical data on conversion yield variability under controlled perturbations. |

| Geographic Information System (GIS) Software (e.g., ArcGIS) | Analyzes spatial correlations in feedstock supply data, crucial for modeling dependent uncertainties across regions. |

| Validated Kinetic Model Database (e.g., NREL's Biofuels Atlas) | Provides prior distributions and meta-model structures for conversion yields, reducing experimental burden for parameter estimation. |

Advanced Considerations: Joint vs. Individual Chance Constraints

A critical modeling choice is between individual (( \Pr(\text{constraint}i) \geq 1-\alphai )) and joint (( \Pr(\text{all constraints}) \geq 1-\alpha )) chance constraints. Joint constraints are more realistic but computationally demanding. The reformulation approach differs significantly.

(Individual vs. Joint Chance Constraint Pathways)

Numerical Example: Biofuel Blending under Demand Uncertainty

Consider a biorefinery deciding how much biofuel ( x ) to produce at cost ( c ), facing stochastic demand ( d \sim N(\mu, \sigma^2) ). A chance constraint ensures meeting demand with 95% reliability (( \alpha = 0.05 )). Constraint: ( \Pr(x \geq d) \geq 0.95 ). Deterministic Equivalent: Assuming normally distributed demand, this reformulates to ( x \geq \mu + \Phi^{-1}(0.95) \sigma ), where ( \Phi^{-1} ) is the standard normal quantile function. Table 3: Solution Sensitivity to Risk Tolerance (α)

| Risk Tolerance (α) | Reliability (1-α) | z-score (Φ⁻¹(1-α)) | Optimal Production (x*) for μ=100, σ=20 | Expected Shortfall Risk |

|---|---|---|---|---|

| 0.01 | 0.99 | 2.33 | 146.6 | Very Low (1%) |

| 0.05 | 0.95 | 1.64 | 132.8 | Low |

| 0.10 | 0.90 | 1.28 | 125.6 | Moderate |

| 0.20 | 0.80 | 0.84 | 116.8 | High |

This demonstrates the explicit trade-off between cost (production level) and reliability managed by CCP, a fundamental consideration for robust biofuel supply chain design.

In the research of biofuel supply chain optimization under uncertainty, stochastic programming provides the mathematical framework to make decisions that are robust to unpredictable future states. A core challenge is the representation of uncertainties—such as biomass feedstock yield, market price volatility, conversion technology efficiency, and policy incentives—within a computationally tractable model. This technical guide focuses on the critical step of Scenario Generation & Reduction, which transforms continuous or high-dimensional probability distributions into a finite, representative set of discrete scenarios (the uncertainty set). The quality of this set directly impacts the relevance and computational feasibility of the resulting stochastic programming solution for biofuel supply chain design and operation.

Foundational Methods for Scenario Generation

Scenario generation creates a finite set of potential future outcomes (scenarios), each with an assigned probability, to approximate the underlying stochastic processes.

Statistical Sampling Techniques

- Monte Carlo Sampling: Direct random sampling from known or estimated multivariate distributions of uncertain parameters.

- Latin Hypercube Sampling (LHS): A stratified sampling technique ensuring full coverage of each parameter's distribution, leading to better representation with fewer samples.

- Quasi-Monte Carlo: Uses low-discrepancy sequences (e.g., Sobol, Halton) to fill the probability space more uniformly than random sampling.

Moment Matching & Property Fitting

This approach generates scenarios whose sample moments (mean, variance, covariance, skewness) match prespecified target values, often derived from historical data. It solves an optimization problem to minimize the difference between the scenarios' statistical properties and the targets.

Path-Based Generation for Time Series

For multi-period problems (e.g., sequential planting, harvesting, and processing decisions), scenarios must represent plausible paths of uncertainty.

- Vector Autoregressive (VAR) Models: Capture inter-temporal and cross-parameter dependencies (e.g., between feedstock cost and fuel price).

- Geometric Brownian Motion (GBM): Often used for modeling long-term price uncertainty.

Data-Driven Generation

Utilizes historical data or simulation output directly.

- Bootstrapping: Resamples from historical data to create new, equally probable scenario sets.

- Clustering of Historical Paths: Groups similar historical trajectories, using the cluster centroids as representative scenarios.

Table 1: Comparison of Primary Scenario Generation Methods

| Method | Key Principle | Advantages | Disadvantages | Best Suited For |

|---|---|---|---|---|

| Monte Carlo | Random sampling from distributions. | Simple, unbiased, asymptotically correct. | Requires many samples for accuracy; slow convergence. | General-purpose, well-defined distributions. |

| Latin Hypercube | Stratified random sampling. | Better coverage than MC with same sample size. | More complex implementation; correlation handling needed. | Expensive simulation models. |

| Moment Matching | Optimize to match statistical properties. | Ensures key statistical fidelity. | Computationally intensive; may produce extreme scenarios. | When moments are known with more certainty than full distribution. |

| Vector Autoregressive | Linear dependence on own lags & other variables. | Captures dynamic interdependencies. | Assumes linearity; parameter estimation sensitive. | Multi-period uncertainties with cross-correlations. |

| Bootstrapping | Resampling from empirical data. | Makes no parametric assumptions. | Limited to historical range; may not represent future shocks. | Rich historical data is available. |

Core Algorithms for Scenario Reduction

A large set of generated scenarios leads to intractable stochastic programs. Reduction algorithms produce a significantly smaller subset that approximates the original distribution with minimal loss of information, measured by a probability metric.

Fast Forward Selection (FFS)

A greedy algorithm that iteratively selects the scenario that minimizes the reduction in quality (distance) until the desired number K of scenarios is selected.

Experimental Protocol: Fast Forward Selection

- Input: Original scenario set

SwithNscenarios and probabilitiesp_i, target number of scenariosK. - Initialize: Set of selected scenarios

J = {}, set of remaining scenariosI = {1,...,N}. - Iterate for

k = 1toK: a. For each candidate scenariojinI, temporarily add it toJ. b. For each scenarioiinI \ {j}, compute its distance to the closest scenario in the temporaryJ. A common distance is the Euclidean norm of the difference in parameter vectors. c. Calculate the total contribution for candidatej:C(j) = Σ_{i in I} p_i * (min_{s in J∪{j}} distance(i, s)). d. Select the candidatej*that minimizesC(j). e. Permanently addj*toJand remove it fromI. - Output: Reduced scenario set

JcontainingKscenarios. - Probability Redistribution: Assign new probabilities to the selected scenarios. The probability of a selected scenario

sbecomes its original probability plus the sum of probabilities of all non-selected scenarios for whichsis the closest selected scenario.

Backward Reduction

The reverse process: iteratively deletes the scenario whose removal causes the smallest increase in a quality metric (e.g., the Kantorovich distance). More computationally intensive than FFS but can yield slightly better results.

Simultaneous Reduction via Clustering

Treats scenario reduction as a clustering problem, where the K cluster centers become the reduced set.

- k-Means Clustering: Partitions scenarios into

Kclusters to minimize within-cluster variance. The cluster centroids become the new scenarios. Probabilities are summed from all scenarios in the cluster. - k-Medoids Clustering: Similar to k-means, but selects an actual scenario from the cluster (the medoid) as the representative, which can be advantageous for preserving realistic, feasible values.

Table 2: Comparison of Primary Scenario Reduction Algorithms

| Algorithm | Type | Key Metric | Complexity | Key Output |

|---|---|---|---|---|

| Fast Forward Selection | Greedy, forward | Minimal increase in total distance. | O(K * N²) | Selected scenario subset with redistributed probabilities. |

| Backward Reduction | Greedy, backward | Minimal increase in Kantorovich distance. | O(N⁴) without optimization | Selected scenario subset with redistributed probabilities. |

| k-Means Clustering | Partitional clustering | Within-cluster sum of squares (variance). | O(I * K * N) where I=iterations | Cluster centroids (may not be actual scenarios). |

| k-Medoids (PAM) | Partitional clustering | Sum of distances to medoid. | O(K * (N-K)²) | Actual scenarios (medoids) as representatives. |

Title: Scenario Generation & Reduction Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Computational & Data Tools for Scenario Analysis

| Item/Reagent | Function in Scenario Generation & Reduction | Example/Note |

|---|---|---|

| Statistical Software (R/Python) | Core platform for implementing sampling, fitting, and reduction algorithms. | R: scenario package, tidyverse. Python: SciPy, NumPy, scikit-learn for clustering. |

| Optimization Solver | Required for moment-matching generation and solving the final stochastic program. | Gurobi, CPLEX, or open-source (CBC) integrated via Pyomo or JuMP. |

| Probabilistic Forecast Library | Provides models for time-series and path generation. | R: forecast, vars. Python: statsmodels, Prophet. |

| Specialized Scenario Tools | Dedicated libraries for stochastic programming preprocessing. | R: SDDP (for multi-stage problems). Python: ScenRed (reduction utilities). |

| High-Performance Computing (HPC) Cluster | Enables parallel generation of large scenario trees and solving large-scale stochastic programs. | Cloud platforms (AWS, GCP) or institutional clusters for computationally intensive sampling. |

| Biofuel-Specific Datasets | Provide empirical distributions for key uncertain parameters. | USDA biomass yield data, EIA fuel price forecasts, DOE technology cost benchmarks. |

Application Protocol: Biofuel Supply Chain Case Study

Experimental Protocol: Constructing an Uncertainty Set for a Multi-Feedstock Biorefinery

- Objective: Generate a reduced scenario set for a two-stage stochastic program optimizing biorefinery location and capacity, considering uncertain biomass supply and product price.

- Uncertain Parameters: 1) Corn stover yield (regional, tons/acre), 2) Switchgrass yield (regional, tons/acre), 3) Bio-jet fuel market price ($/gallon).

- Time Horizon: 10 annual periods.

Data Collection & Model Fitting:

- Gather 20 years of historical yield data (USDA) and fuel price analogs (EIA).

- Fit a tri-variate VAR(1) model to capture cross-correlations and temporal dynamics between the three parameters.

- Validate model fit using out-of-sample backtesting.

Path-Based Scenario Generation:

- Use the fitted VAR model to simulate 10,000 independent price/yield paths over 10 years via Monte Carlo simulation. This forms the initial scenario tree

S_0.

- Use the fitted VAR model to simulate 10,000 independent price/yield paths over 10 years via Monte Carlo simulation. This forms the initial scenario tree

Scenario Reduction via k-Medoids:

- Apply the Partitioning Around Medoids (PAM) algorithm to the 10,000 simulated 10-year paths.

- Set target clusters

K=50to achieve a balance between model fidelity and computational tractability. - Use Euclidean distance on normalized data points.

- Output: 50 representative 10-year paths (the medoids). The probability of each medoid scenario is (number of paths in its cluster) / 10,000.

Integration & Validation:

- Input the 50 scenarios with their probabilities into a two-stage stochastic Mixed-Integer Programming (MIP) model for biorefinery investment.

- Validate the reduced set by comparing the Expected Value of Perfect Information (EVPI) and the Value of the Stochastic Solution (VSS) calculated using the full (10,000) and reduced (50) scenario sets. A minimal difference indicates a high-quality reduction.

Title: Reduced Scenario Tree for Two-Stage Model

Effective Scenario Generation & Reduction is the cornerstone of implementing stochastic programming for biofuel supply chain research. It bridges the gap between complex, high-dimensional uncertainty and the practical need for computationally solvable models. The choice of generation method must reflect the nature of the underlying data (parametric vs. non-parametric, independent vs. path-dependent), while the reduction technique must preserve the stochastic information crucial for high-quality decisions. By employing the systematic methodologies and tools outlined in this guide, researchers can create robust, representative uncertainty sets that lead to biofuel supply chain strategies capable of withstanding real-world volatility.

This technical guide examines strategic decision-making under uncertainty, framed within a broader research thesis on stochastic programming applications for biofuel supply chain optimization. For drug development professionals and researchers, these methodologies are directly analogous to planning biomanufacturing networks, where long-term, capital-intensive facility investments must be made amidst fluctuating demand, regulatory shifts, and technological innovations. Stochastic programming provides a rigorous mathematical framework to incorporate these uncertainties into the strategic planning process, moving beyond deterministic models to build resilient and cost-effective supply chains.

Core Mathematical Framework

The problem is formalized as a two-stage stochastic program. The first-stage decisions, made before the realization of uncertain parameters, involve strategic choices: facility locations (binary decisions) and base capacity levels (continuous decisions). The second-stage, or recourse, decisions adapt to the revealed scenario ξ, encompassing operational decisions like production allocation, transportation, and potential capacity expansion.

General Model Formulation:

Minimize: ( \text{Cost}{\text{Fixed}}(x) + \mathbb{E}{\xi}[Q(x, \xi)] ) Subject to: ( x \in X )

Where:

- ( x ): First-stage strategic decisions (location, base capacity).

- ( \xi ): Random vector of uncertain parameters (e.g., demand, feedstock cost, conversion yield).

- ( Q(x, \xi) ): Optimal value of the second-stage problem given (x) and (\xi).

- ( \mathbb{E}_{\xi} ): Expectation over the probability distribution of (\xi).

Experimental Protocols & Methodologies

A standard methodological workflow for applying this framework is detailed below.

Protocol 1: Scenario Generation & Reduction

- Objective: To create a discrete and computationally manageable set of scenarios that accurately represents the underlying continuous probability distributions of uncertain parameters.

- Procedure:

- Parameter Identification: Define key uncertain parameters (e.g., biomass feedstock price, biofuel demand, policy incentive levels, technological success rate for a new catalyst).

- Distribution Fitting: Use historical data or expert elicitation to fit probability distributions (normal, log-normal, uniform) to each parameter.

- Sampling: Employ Monte Carlo simulation or Latin Hypercube Sampling to generate a large set of (N) (e.g., 10,000) correlated scenarios.

- Reduction: Apply a forward/backward reduction algorithm or k-means clustering to reduce the scenario set to a manageable size (K) (e.g., 10-50) while preserving the statistical properties (moments, shape) of the original distribution.

Protocol 2: Stochastic Mixed-Integer Linear Programming (SMILP) Solution

- Objective: To solve the resulting large-scale deterministic equivalent problem.

- Procedure:

- Formulation: Construct the extensive form (EF) of the problem, which explicitly writes out the model for each of the (K) scenarios, linked by the non-anticipative first-stage decisions.

- Algorithm Selection: Apply decomposition algorithms suited for SMILP:

- Benders Decomposition (L-Shaped Method): Separates the master problem (first-stage) from subproblems (second-stage for each scenario). Optimality cuts are iteratively added to the master problem.

- Progressive Hedging: Operates on scenario subproblems independently and uses penalty terms to force their first-stage solutions to converge to a common non-anticipative solution.

- Implementation: Utilize high-performance computing (HPC) resources and solvers (e.g., Gurobi, CPLEX) with decomposition plugin (e.g., PySP for Pyomo).

Protocol 3: Solution Validation & Value of Stochastic Solution (VSS)

- Objective: Quantify the economic benefit of using a stochastic model over a deterministic one.

- Procedure:

- Solve Stochastic Model (SP): Obtain optimal first-stage decisions (x^*{SP}) and expected cost (EC{SP}).

- Solve Deterministic Model (EEV): Fix the first-stage decisions to (x^{SP}). Solve the second-stage model for each scenario individually and compute the Expected result of using the EV solution: (EEV = \sum{s=1}^{K} ps * Cost(x^{SP}, \xis)).

- Calculate VSS: Compute (VSS = EEV - EC{SP}). A positive VSS indicates the cost savings gained by accounting for uncertainty.

Data Presentation

Table 1: Representative Stochastic Parameters in Biofuel Supply Chain Modeling

| Parameter | Distribution Type (Example) | Base Value ± CV | Source / Justification |

|---|---|---|---|

| Biomass Feedstock Cost ($/dry ton) | Lognormal | 85 ± 20% | Historical commodity market volatility |

| Conversion Yield (gal/dry ton) | Triangular (Min: 70, Mode: 85, Max: 100) | 85 ± 12% | Laboratory-scale experimental variability |

| Government Subsidy Level ($/gal) | Discrete (High: 1.50, Med: 1.00, Low: 0.50) | 1.00 | Policy scenario analysis |

| Regional Biofuel Demand (M gal/year) | Autoregressive Time Series | 100 ± 25% | Economic forecasting models |

Table 2: Performance Metrics from a Comparative Study (Hypothetical Data)

| Model Type | Expected Total Cost (M$) | Cost Std. Dev. (M$) | Value of Stochastic Solution (VSS, M$) | Computational Time (CPU hours) |

|---|---|---|---|---|

| Deterministic (Mean-Value) | 1250 | 185 | - | 0.5 |

| Two-Stage Stochastic (50 Scenarios) | 1150 | 95 | 100 | 12.8 |

| Two-Stage Stochastic (200 Scenarios) | 1135 | 88 | 115 | 47.5 |

Mandatory Visualizations

Stochastic Programming Two-Stage Decision Structure

Biofuel Conversion Process with Key Uncertainties

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Stochastic Supply Chain Modeling Experiments

| Item / Solution | Function in the "Experiment" | Example / Specification |

|---|---|---|

| Optimization Solver | Core computational engine for solving large-scale MILP and SMILP problems. | Gurobi Optimizer, IBM ILOG CPLEX, FICO Xpress. |

| Algebraic Modeling Language (AML) | High-level platform for formulating mathematical models in a readable, maintainable way. | Pyomo (Python), GAMS, AMPL. |

| Scenario Generation Library | Generates and reduces probabilistic scenarios from defined distributions. | scipy.stats in Python, randtoolbox in R, dedicated in-house code. |

| Decomposition Framework | Implements advanced algorithms (Benders, Progressive Hedging) to solve stochastic programs. | Pyomo's PySP package, SAS/OR SP, custom implementations. |

| High-Performance Computing (HPC) Cluster | Provides the parallel processing power required to solve multiple scenario subproblems simultaneously. | Linux-based cluster with MPI (Message Passing Interface) support. |

| Sensitivity Analysis Package | Systematically evaluates how changes in input distributions affect optimal decisions and costs. | SALib (Sensitivity Analysis Library in Python), custom Monte Carlo routines. |

This technical guide details the tactical-level applications of stochastic programming within biofuel supply chains, a critical research domain intersecting operations research and bio-economy development. The inherent uncertainties in biomass feedstock yield, conversion rates, market prices, and logistics demand a move from deterministic optimization. Stochastic programming provides a mathematical framework to make optimal tactical decisions—scheduling production runs, setting inventory targets, and routing logistics—under uncertainty, thereby enhancing the economic viability and resilience of the supply chain. The core thesis is that robust, multi-stage stochastic models are essential for managing the variable nature of biological feedstocks and volatile energy markets, ultimately contributing to sustainable biofuel commercialization.

Core Stochastic Optimization Models for Tactical Planning

At the tactical level, decisions are medium-term (e.g., monthly, quarterly) and must accommodate forecasted uncertainties. Key stochastic programming paradigms include:

- Two-Stage Stochastic Programming with Recourse: First-stage decisions (e.g., biomass procurement contracts, scheduled maintenance) are made before uncertainty is realized. Second-stage recourse actions (e.g., spot market purchases, emergency logistics) respond to the observed scenario.

- Multi-Stage Stochastic Programming: Extends the two-stage model to a sequential decision process over a planning horizon, allowing for adaptive policies as information is progressively revealed.

- Chance-Constrained Programming: Optimizes system performance while requiring that constraints (e.g., meeting demand, maintaining inventory levels) be satisfied with a specified minimum probability.

The objective is typically to minimize the expected total cost or maximize the expected profit across all possible uncertainty scenarios.

The performance of stochastic models hinges on accurately characterizing input uncertainties. The following table summarizes the primary stochastic parameters in biofuel supply chains, their typical distributions, and data sources.

Table 1: Key Stochastic Parameters in Biofuel Supply Chain Optimization

| Parameter Category | Specific Parameter | Typical Distribution/Range | Common Data Source |

|---|---|---|---|

| Feedstock Supply | Biomass yield (tons/acre) | Normal (μ, σ) or Lognormal | Historical agricultural data, crop growth models. |

| Moisture content at harvest | Beta or Triangular | Field sensor data, historical weather correlation. | |

| Conversion Process | Biofuel conversion yield (gal/ton) | Uniform [min, max] | Pilot-scale experimental data, techno-economic analyses. |

| Biochemical conversion efficiency | Normal (μ, σ) | Laboratory reactor data under varied conditions. | |

| Market & Demand | Biofuel selling price ($/gallon) | Geometric Brownian Motion | Historical energy market data, futures prices. |

| Biomass feedstock cost ($/ton) | Scenario-based | Regional auction data, contract price histories. | |

| Logistics | Transportation cost variance | +- % from baseline | Fuel price indices, carrier rate sheets. |

| Equipment downtime | Exponential (MTBF) | Maintenance logs from biorefinery operations. |

Experimental & Computational Protocols

Protocol for Scenario Generation and Reduction

Objective: To generate a discrete, manageable set of scenarios representing the possible realizations of uncertain parameters.

- Data Collection: Gather historical time-series data for each stochastic parameter in Table 1.

- Distribution Fitting: Use statistical software (e.g., R, @RISK) to fit probability distributions to each parameter.

- Monte Carlo Simulation: Generate a large fan of individual scenarios (e.g., 10,000) by random sampling from the joint distribution of all parameters, considering correlations (e.g., high yield correlates with low moisture).

- Scenario Reduction: Apply algorithms (e.g., forward selection, backward reduction, k-means clustering) to reduce the scenario set to a representative subset (e.g., 10-50 scenarios) that preserves the stochastic properties of the original fan. The probability of each selected scenario is adjusted accordingly.

Protocol for Solving a Two-Stage Stochastic MIP Model

Objective: To obtain an optimal first-stage tactical plan and evaluate its expected performance.

- Model Formulation: Develop a Mixed-Integer Programming (MIP) model in a modeling language (e.g., Pyomo, GAMS).

- First-Stage Variables: Integer/binary variables for facility activation, contract selection; continuous variables for baseline procurement.

- Second-Stage Variables: Continuous variables for recourse actions (inventory, spot market, routing).

- Constraints: Include mass balance, capacity, and logical constraints.

- Objective: Minimize: (First-Stage Cost) + E[Second-Stage Recourse Cost].

- Implementation: Input the reduced scenario set and their probabilities. Use the Extensive Form (Deterministic Equivalent) to formulate the problem.

- Solution: Employ a commercial solver (e.g., Gurobi, CPLEX) to find the optimal solution. Compute key outputs: expected total cost, Value of the Stochastic Solution (VSS), and Expected Value of Perfect Information (EVPI).

Title: Stochastic Optimization Workflow

The Scientist's Toolkit: Essential Research Reagents & Software

Table 2: Essential Toolkit for Stochastic Biofuel Supply Chain Research

| Item Name | Category | Function & Explanation |

|---|---|---|

| GAMS (General Algebraic Modeling System) | Software | High-level modeling language for mathematical optimization; facilitates concise formulation of complex stochastic programs. |

| Gurobi/CPLEX Optimizer | Software | Commercial solvers for linear, mixed-integer, and quadratic programming; essential for solving large-scale stochastic MIP models efficiently. |

| Pyomo (Python Optimization Modeling Objects) | Software/ Library | Open-source Python library for defining optimization models; ideal for integrating scenario generation and analysis pipelines. |

| @RISK / Palisade DecisionTools | Software | Excel add-in for performing Monte Carlo simulation, distribution fitting, and scenario analysis on input parameter data. |

R with sde, mc2d packages |

Software/ Library | Statistical computing environment for time-series analysis, stochastic differential equation modeling, and advanced scenario generation. |

| Historical Commodity Price Data (e.g., USDA, EIA) | Data | Provides the empirical basis for fitting price and demand distributions; critical for model realism. |

| Techno-Economic Analysis (TEA) Model Outputs | Data | Supplies parameter ranges and correlations for conversion yields, costs, and energy use under uncertainty. |

Title: Tactical Decisions in a Stochastic Biofuel Chain

Solving the Puzzle: Overcoming Computational Hurdles in Stochastic Programs

Stochastic programming provides a mathematical framework for optimizing decisions under uncertainty, a cornerstone for designing resilient and efficient biofuel supply chains. Key uncertainties include biomass feedstock yield (affected by climate variability), conversion technology efficiency, market price volatility, and policy shifts. Multi-stage stochastic programs model this by constructing a scenario tree representing possible futures. However, the number of scenarios grows exponentially with stages and branching factors—the Curse of Dimensionality. This whitepaper details strategies to manage this intractability.

Quantifying the Curse: Scenario Tree Growth