Building Unbreakable Biofuel Pipelines: Advanced Strategies for Supply Chain Resilience Against Critical Node Failures

This article provides a comprehensive analysis for researchers and industry professionals on fortifying biofuel supply chains against node disruptions.

Building Unbreakable Biofuel Pipelines: Advanced Strategies for Supply Chain Resilience Against Critical Node Failures

Abstract

This article provides a comprehensive analysis for researchers and industry professionals on fortifying biofuel supply chains against node disruptions. We explore the foundational vulnerabilities inherent in biomass-to-fuel pathways, including feedstock production, conversion facilities, and logistics hubs. The article details methodological frameworks for risk assessment, digital twin simulation, and AI-driven contingency planning. We examine common failure modes and present optimization techniques for redundancy, diversification, and agile response. Finally, we review validation case studies and comparative analyses of resilience strategies, culminating in actionable insights for creating robust, adaptable biofuel networks that ensure consistent production and distribution amidst growing global uncertainties.

Understanding the Weak Links: A Deep Dive into Biofuel Supply Chain Vulnerability Hotspots

Technical Support Center: Troubleshooting & FAQs

FAQ 1: What constitutes a "Node Disruption" in a lignocellulosic biofuel supply chain, and how is it quantified for research modeling?

Answer: A node disruption is any event that interrupts the planned flow, quality, or quantity of biomass feedstock from a production farm to the intake gate of a biorefinery. For research quantification, we measure it through key performance indicators (KPIs). Common disruptions include drought (affecting yield), machinery breakdown (affecting harvest/window), and logistical failures (affecting delivery timing).

Table 1: Quantitative Metrics for Defining Node Disruption Severity

| Disruption Type | Primary Metric | Measurement Method | Severity Threshold (Example) |

|---|---|---|---|

| Agronomic (e.g., Drought) | Biomass Yield Reduction | Dry ton/acre vs. 5-yr average | >25% loss = High Severity |

| Harvest Operational | Daily Harvest Rate Delay | GPS & telematics from equipment | >48 hr delay = Critical |

| Transport Logistics | Delivery Window Adherence | Weighbridge & timestamp data | >15% missed windows = High Severity |

| Feedstock Quality | Moisture Content / Contaminants | NIR spectroscopy at gate | MC >20% or >5% foreign matter |

Experimental Protocol for Quantifying Moisture-Based Disruption:

- Sampling: Collect 10 random core samples from 5 distinct bales or piles per delivery lot.

- Preparation: Immediately place samples in sealed, pre-weighed containers. Mill a sub-sample to pass a 2mm screen.

- Drying: Weigh 10g of milled material (W_wet) into a pre-dried aluminum dish. Dry in a forced-air oven at 105°C for 24 hours.

- Calculation: Re-weigh (Wdry). Moisture Content (%) = [(Wwet - Wdry) / Wwet] * 100.

- Analysis: Compare the lot average to the biorefinery's contract specification (e.g., 15%). A lot average exceeding 20% is logged as a quality disruption event.

FAQ 2: During a simulated supply disruption, our laboratory-scale pretreatment reactor shows inconsistent sugar yields. What are the key troubleshooting steps?

Answer: Inconsistent yields during simulated disrupted feedstock (e.g., variable moisture, composition) often stem from unreported changes in biomass effective loading or enzyme inhibition.

Troubleshooting Guide:

- Verify Feedstock Characterization: Re-measure the moisture content of the actual biomass used in each run. Re-calculate the true dry mass loading into the reactor.

- Check for Inhibitors: Analyze hydrolysate for fermentation inhibitors (e.g., furfural, HMF, acetic acid) via HPLC. Spikes may indicate over-severity pretreatment compensating for poor-quality feedstock.

- Standardize Enzymatic Hydrolysis: Run a standardized enzymatic saccharification assay on the pretreated solid separately to decouple pretreatment performance from hydrolysis variables.

- Calibrate Sensors: Verify calibration of in-line pH, temperature, and pressure sensors in the pretreatment reactor.

Experimental Protocol for Standardized Saccharification Assay (NREL LAP):

- Material: Take 1.0 g (dry weight equivalent) of washed, pretreated biomass.

- Buffer: Add 10 mL of 0.1M sodium citrate buffer (pH 4.8) to a 50 mL Erlenmeyer flask.

- Enzyme Loading: Add cellulase enzyme cocktail at a standard loading of 15 FPU/g glucan. Add β-glucosidase at 30 CBU/g glucan.

- Hydrolysis: Incubate at 50°C with orbital shaking (150 rpm) for 72 hours.

- Analysis: Sample at 0, 24, 48, 72 hrs. Filter (0.2 µm) and analyze supernatant for glucose via HPLC (Aminex HPX-87P column, 80°C, water mobile phase at 0.6 mL/min). Compare glucose yield curves across different disruption simulation batches.

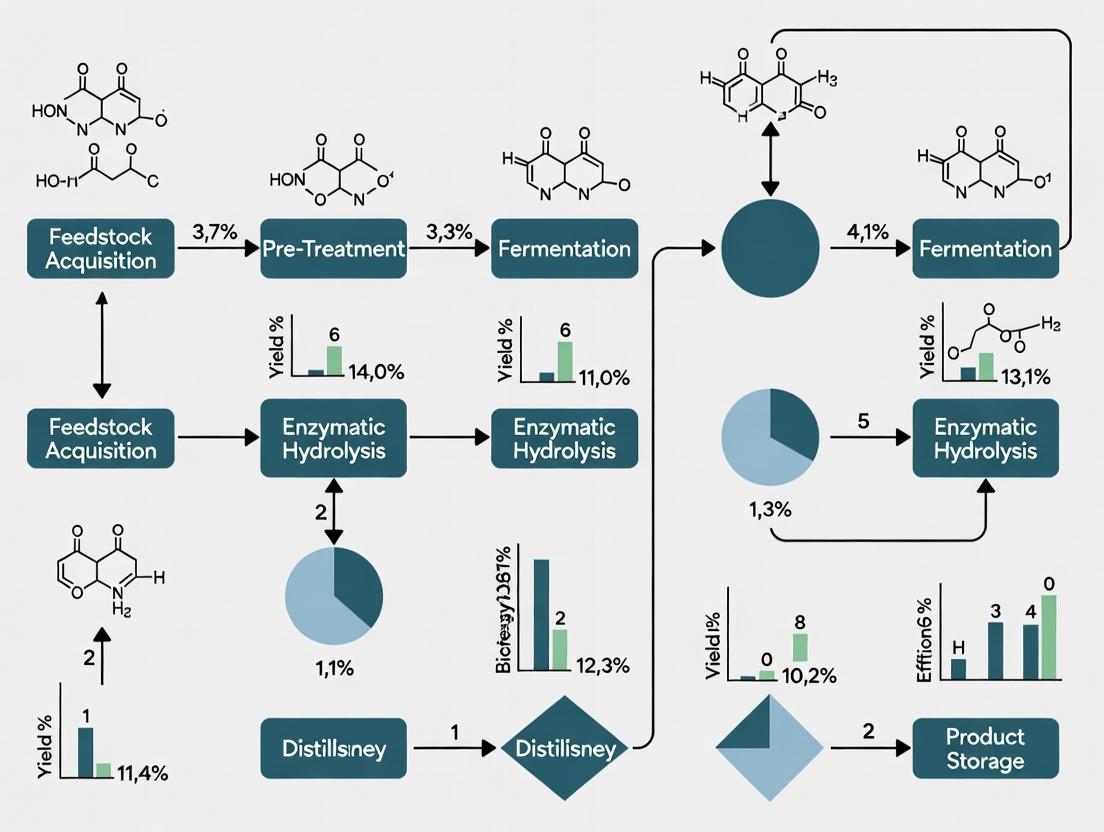

Diagram Title: Node Disruption Impact on Biomass Supply Chain Flow

Diagram Title: Workflow for Isolating Disruption Impact on Sugar Yield

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Node Disruption Simulation Experiments

| Item / Reagent | Function in Experiment | Key Consideration |

|---|---|---|

| Custom Biomass Blends | Simulate compositional variability (e.g., high lignin, high ash) from poor-growing seasons. | Source from characterized feedstocks; maintain a compositional database. |

| Cellulase Enzyme Cocktail (e.g., CTec3) | Standardized hydrolysis of pretreated biomass to measure convertible glucan. | Keep activity constant (FPU/mL) across batches to isolate feedstock variable. |

| Aminex HPX-87P HPLC Column | Separation and quantification of monomeric sugars (glucose, xylose) in hydrolysate. | Requires dedicated system with de-ashing guard column; water mobile phase only. |

| In-Line pH/Temperature Sensors | Monitor and ensure consistency of pretreatment severity factor across runs. | Critical for autohydrolysis or dilute acid pretreatment simulations. |

| Moisture Analyzer (NIR or Oven) | Accurately determine true dry mass of "as-received" disrupted feedstock. | Essential for normalizing all loadings (biomass, enzyme, catalysts). |

| Process Modeling Software (e.g., ASPEN Plus) | Integrate experimental data to model system-wide impacts of a node failure. | Requires robust mass/energy balance parameters from lab-scale data. |

Bio-Network Technical Support Center

Welcome, Researcher. This support center is part of the broader thesis research on Improving biofuel supply chain resilience to node disruptions. The following guides address common experimental failures that mirror network cascades in biofuel systems, from microbial fermentation to enzymatic catalysis.

Troubleshooting Guides & FAQs

Q1: My consolidated bioprocessing (CBP) fermentation yield has dropped by >60% after a minor contamination event was supposedly cleared. What's happening? A: This mirrors a cascade from a pathogen node. The initial contaminant may have been suppressed, but it likely secreted antimicrobials or acids that persistently inhibited your engineered feedstock-degrading microbes. This is a metabolic pathway disruption.

- Protocol: Diagnosing Persistent Inhibition

- Sample: Aseptically take 1 mL from the low-yield fermentation broth.

- Centrifuge: Spin at 10,000 x g for 5 min. Filter-sterilize (0.22 µm) the supernatant.

- Assay: Use this sterile supernatant as the liquid base for a fresh 10 mL mini-culture of your production strain. Inoculate at standard OD600.

- Control: A parallel mini-culture in fresh, standard media.

- Monitor: Track growth (OD600) and product formation (e.g., via HPLC) over 24-48h. Significant lag or reduced product in the test culture confirms persistent inhibitory compounds.

Q2: Enzymatic hydrolysis efficiency of pretreated biomass collapses when switching to a new batch of a common reagent (e.g., buffer salt). How can I verify the reagent as the single-point failure? A: This is a direct input node failure. Impurities in the reagent can poison catalysts.

- Protocol: Reagent Failure Isolation Test

- Matrix Design: Set up a 2x2 experiment in microplate or tube format.

- Variables: Test the new batch of the suspect reagent vs. the old, functional batch. Test each in the full hydrolysis cocktail and in a buffer-only + enzyme control.

- Run: Perform the hydrolysis reaction on a standardized aliquot of your pretreated biomass (e.g., 1% w/v glucan) under standard conditions (pH, temp, time).

- Analyze: Measure reducing sugar yield. A significant drop only in conditions containing the new batch pinpoints it as the failure node.

Q3: My co-culture system for direct microbial conversion of biomass becomes unstable, with one strain consistently dying off. How do I debug this inter-species network failure? A: This simulates a node collapse in a mutualistic network. The failure likely stems from metabolite imbalance or resource competition.

- Protocol: Co-Culture Stability Assay

- Separate vs. Together: Grow each strain axenically in the co-culture media. Grow them in co-culture under standard conditions.

- Time-Point Sampling: At T=0, 6, 12, 24h, sample the co-culture.

- Quantify Populations: Use strain-specific selective plates, qPCR with unique primers, or flow cytometry with fluorescent tags to count each population.

- Metabolite Tracking: Parallelly, use HPLC/GC to measure key metabolites (e.g., sugars, acids, alcohols, inhibitors).

- Correlate: The data will show if one strain is over-consuming a critical resource or producing a toxic byproduct at a inhibitory threshold.

Table 1: Impact of Reagent-Grade Purity on Cellulase Hydrolysis Yield

| Reagent Grade (Buffer Salt) | Purity (%) | Mean Sugar Yield (g/L) | Yield Reduction vs. Highest Grade |

|---|---|---|---|

| Molecular Biology Grade | ≥99.5% | 48.7 ± 1.2 | 0% (Baseline) |

| ACS Grade | ≥98.0% | 47.9 ± 1.5 | 1.6% |

| Technical Grade | ≥95.0% | 35.2 ± 3.1 | 27.7% |

Table 2: Cascade Effect of Trace Contaminant in Fermentation

| Contaminant Introduced | Concentration (ppm) | Final Biofuel Titer (g/L) | Latent Inhibition Confirmed? |

|---|---|---|---|

| None (Control) | 0 | 72.5 ± 2.1 | No |

| Acetic Acid | 50 | 70.1 ± 1.8 | No |

| Lactobacillus sp. | 10^3 cells/mL | 25.4 ± 4.3 | Yes |

| Furfural | 10 | 65.3 ± 2.5 | No |

Pathway & Workflow Visualizations

Title: Cascade from Microbial Contamination to Yield Loss

Title: Reagent Failure Isolation Workflow

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function & Relevance to Network Resilience |

|---|---|

| Defined Hydrolysis Cocktail | Standardized enzyme mix (cellulase, β-glucosidase) to create a baseline for detecting performance cascades from input failures. |

| Sterile-Filtered Supernatant | Used in inhibition assays to identify soluble, persistent inhibitors left behind after a node (contaminant) is removed. |

| Strain-Specific Selective Media | Enables precise quantification of individual populations in a co-culture network to diagnose collapse points. |

| High-Purity Buffer Salts (Molecular Bio Grade) | Minimizes introduction of trace metal ions (e.g., Cu²⁺) that can deactivate enzymatic catalysts, a common hidden failure. |

| Internal Standard (e.g., 2-Furoic Acid) | Added to all HPLC/GC samples for quantitative metabolite tracking; ensures analytical consistency when debugging. |

| Fluorescent Cell Stain (Viability Kit) | Rapidly assesses cell viability in co-cultures or post-stress, visualizing node health in near real-time. |

Technical Support Center: Troubleshooting & FAQs

FAQ 1: Yield Volatility in Lignocellulosic Feedstock Pre-treatment Under Variable Climatic Conditions

- Issue: Inconsistent sugar yields from biomass pre-treatment during experiments simulating drought-stressed crops.

- Root Cause: Altered lignin-to-cellulose ratio and secondary metabolite accumulation in stress-induced feedstock.

- Solution: Implement dynamic pre-treatment. Use a rapid Neutral Detergent Fiber (NDF) assay to adjust acid/alkali concentration and residence time in real-time. See Protocol 1.

- Thesis Context: This directly addresses node disruption at the feedstock origin, enhancing resilience by developing adaptive pre-processing protocols.

FAQ 2: Fermentation Inhibition During Simulated Supply Chain Disruption

- Issue: Microbial fermentation halts when experimental batches use alternative substrate blends (e.g., switching from corn to sorghum syrup) due to trace contaminants.

- Root Cause: Geopolitical-induced feedstock switching can introduce heavy metals or pesticides not present in primary substrates, inhibiting enzymatic and microbial activity.

- Solution: Pre-batch testing with a microbial biosensor array (see Toolkit). If inhibition is detected, employ a cationic polymer flocculation step (Protocol 2) to remove contaminants before inoculation.

FAQ 3: Catalyst Deactivation in Catalytic Upgrading Under Intermittent Operation

- Issue: Rapid deactivation of hydrotreating catalysts (e.g., NiMo/Al₂O₃) in experiments simulating pandemic-related staggered biorefinery operation.

- Root Cause: Coke formation and sulfide oxidation during idle periods (“shock-and-stall” cycles) when H2 flow is interrupted.

- Solution: Implement a controlled passivation protocol during idle phases: purge with 2% O2 in N2 at 150°C to form a protective oxide layer. Reactivate with a standard sulfidation procedure (Protocol 3) before restart.

FAQ 4: Data Pipeline Failure from Remote Monitoring Sensors

- Issue: Loss of sensor data (pH, dissolved O2, temperature) from bench-scale bioreactors during simulated cyber-physical disruption.

- Root Cause: Inconsistent time-stamping and packet loss from edge devices using default configurations.

- Solution: Configure all sensors to use the Network Time Protocol (NTP) with a local server. Implement a store-and-forward algorithm on the edge gateway device to buffer data during network outages.

Table 1: Impact of Climatic Stressors on Feedstock Composition

| Feedstock | Condition | Lignin Increase (%) | Cellulose Decrease (%) | Reference Sugar Yield (g/g) |

|---|---|---|---|---|

| Switchgrass | Drought Stress | +22.5 | -8.7 | 0.32 |

| Corn Stover | Heat Wave | +15.1 | -10.3 | 0.41 |

| Miscanthus | Flooding | +18.3 | -12.9 | 0.38 |

| Control Baseline | Optimal | 0.0 | 0.0 | 0.51 |

Table 2: Catalyst Performance Degradation in Intermittent Operation

| Catalyst | Continuous Runtime (hrs) | Simulated Staggered Runtime (hrs) | Activity Loss (%) | Regeneration Success (%) |

|---|---|---|---|---|

| NiMo/Al₂O₃ | 500 | 500 (5 cycles) | 45 | 92 |

| CoMo/P-Al₂O₃ | 500 | 500 (5 cycles) | 38 | 95 |

| Pt/ZSM-5 | 500 | 500 (5 cycles) | 60 | 85 |

Experimental Protocols

Protocol 1: Dynamic Acid Pre-treatment for Stress-Adjusted Biomass

- Sample: Mill feedstock to 2mm particles.

- Assay: Perform a micro-scale NDF assay on a 1g sub-sample to determine acid-neutral detergent lignin.

- Calculate: Adjust 1% H₂SO₄ volume using formula: V_adj = V_std * [1 + (L_actual - L_std)/100], where Lactual is assayed lignin %, Lstd is standard lignin %.

- Treat: Load 10g biomass in a pressure tube, add adjusted acid volume for a 10:1 liquid-to-solid ratio.

- React: Heat at 160°C in an autoclave for a residence time (minutes) calculated as: t = 60 - (L_actual - L_std).

- Neutralize & Recover: Filter, neutralize hydrolysate with CaCO₃, and quantify reducing sugars via HPLC.

Protocol 2: Cationic Polymer Flocculation for Contaminant Removal

- Sample: Prepare 1L of alternative substrate hydrolysate.

- Test: Use microbial biosensor (see Toolkit) to confirm inhibition.

- Flocculate: Under gentle stirring (100 rpm), add 10 ppm of polyDADMAC (high charge density cationic polymer).

- Settle: Stop stirring, allow to settle for 30 minutes.

- Separate: Carefully decant or filter (0.45μm) the clarified liquid.

- Verify: Re-test clarified substrate with biosensor; proceed to fermentation if inhibition <5%.

Protocol 3: Passivation & Sulfidation of Deactivated Hydrotreating Catalysts

- Passivate (Idle Phase): After stopping H2 flow, purge reactor with N2 for 15 min. Introduce a 2% O2 in N2 stream at 2 L/min. Ramp temperature to 150°C at 5°C/min, hold for 2 hours. Cool under N2 to room temperature.

- Sulfidate (Restart Phase): Load passivated catalyst. Introduce a sulfiding agent (e.g., 2% DMDS in hexadecane) at 2 mL/min under 5 bar H2. Ramp temperature from 150°C to 320°C at 3°C/min, hold for 4 hours. Cool to operating temperature under H2 flow.

Visualizations

Diagram 1: Adaptive pre-treatment for climate-stressed feedstock.

Diagram 2: Mitigation pathway for contamination-induced fermentation failure.

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Biofuel Resilience Research |

|---|---|

| Microbial Biosensor Array (e.g., B. subtilis-based) | Rapid, pre-fermentation detection of inhibitory contaminants in alternative feedstocks. |

| Neutral & Acid Detergent Fiber Kits (ANKOM Technology) | Quantifies lignin, cellulose, hemicellulose for dynamic pre-treatment calibration. |

| PolyDADMAC (Poly-diallyldimethylammonium chloride) | High-charge cationic polymer for flocculating anionic contaminants from hydrolysates. |

| Dimethyl Disulfide (DMDS) | Safe, liquid sulfiding agent for regenerating hydrotreating catalysts during restart protocols. |

| NTP Server Hardware (Raspberry Pi based) | Local time-server for synchronizing sensor data in offline/remote bioreactor setups. |

Critical Review of Recent High-Profile Biofuel Supply Chain Failures (2023-2024)

This review synthesizes recent, high-profile disruptions in the biofuel supply chain, framing them as critical case studies for research into node resilience. The analysis is presented through the lens of a technical support center, providing structured diagnostics and methodologies applicable to experimental research in supply chain vulnerability and mitigation.

Technical Support Center: Troubleshooting Biofuel Supply Chain Node Failures

FAQ 1: What were the primary causes of feedstock node failure in the U.S. renewable diesel sector in 2023?

- Answer: The primary cause was a Feedstock Availability-Specification Mismatch. Rapid expansion of renewable diesel capacity increased demand for waste oils (UCO, animal fats). This led to supply shortages, price volatility, and documented incidents of feedstock fraud, where virgin oils were mislabeled as "waste," disrupting refinery input specifications and economic models.

FAQ 2: How did geopolitical node disruption in 2023-2024 impact biofuel policy compliance in the EU?

- Answer: The conflict in Ukraine triggered a Policy-Feedstock Cascade Failure. EU biofuel policies heavily relied on Ukrainian rapeseed oil. The disruption forced a rapid re-evaluation of policy targets (like RED III) and triggered emergency measures, exposing critical over-dependence on a single geographical node for policy stability.

FAQ 3: What is a common methodological flaw in assessing "second-generation" biorefinery node resilience?

- Answer: A key flaw is Monoculture Feedstock Modeling. Many techno-economic analyses (TEAs) and life cycle assessments (LCAs) assume a single, consistent lignocellulosic feedstock (e.g., corn stover). Experiments that fail to model seasonal, regional, and compositional variability in feedstock blends will overestimate node reliability and underpredict pretreatment failures.

Experimental Protocol 1: Stress-Testing Feedstock Formulation Blends Objective: To simulate and quantify the impact of feedstock inconsistency on pretreatment efficiency.

- Prepare Feedstock Variants: Source a primary feedstock (e.g., wheat straw) and create variants by contaminating it with defined percentages (e.g., 5%, 15%, 25% w/w) of a common contaminant (e.g., soil, alternative crop residue, non-target biomass).

- Standardized Pretreatment: Apply a consistent dilute-acid or steam explosion protocol to all variants. Key parameters: temperature (e.g., 160°C, 180°C), residence time (10 min), catalyst concentration.

- Analyze Outputs: Measure and compare:

- Inhibitor Formation: Quantify furfural and HMF via HPLC.

- Sugar Yield: Perform enzymatic hydrolysis and measure glucose/xylose release via DNS assay or HPLC.

- Slurry Rheology: Record viscosity changes post-pretreatment.

- Data Correlation: Correlate contaminant percentage with inhibitor levels and sugar yield reduction to establish failure thresholds.

Experimental Protocol 2: Simulating Logistics Node Disruption via Agent-Based Modeling (ABM) Objective: To model the cascade effects of a single hub (e.g., port, rail terminal) failure.

- Define Network Nodes: Map a regional supply chain as agents: Farms, Collection Hubs, Primary Processing, Biorefinery.

- Set Parameters: Assign capacity, inventory levels, processing rates, and transportation routes/lags between nodes.

- Introduce Disruption: Select a key hub agent and simulate its failure (capacity reduction to 0%) for a defined period (e.g., 30 model days).

- Run Simulation & Monitor: Track key performance indicators (KPIs): biorefinery idle time, feedstock inventory depletion at all nodes, system-wide cost inflation.

- Test Mitigation: Re-run simulation with alternative routing rules or strategic inventory buffers at specific nodes and compare KPI recovery rates.

Table 1: Documented Feedstock Node Failures & Metrics

| Failure Case | Region | Key Metric Pre-Disruption | Key Metric Post-Disruption | Data Source (Example) |

|---|---|---|---|---|

| UCO Supply Shortage | North America | UCO Price: ~$0.40/lb | UCO Price: Peaked at ~$0.70/lb | USDA GAIN Reports, 2023 |

| Animal Fat Divergence | Global | Fat-to-Diesel Spread: ~$0.10/gal | Spread: Widened to >$0.50/gal | Argus Media, BBI Biofuels |

| Brazilian Soybean Flow Shift | South America | EU Import Share: ~65% | EU Import Share: Fell to ~40% | Eurostat, 2024 |

Table 2: Experimental Reagent Solutions for Resilience Research

| Research Reagent / Solution | Function in Experiment |

|---|---|

| Lignocellulosic Feedstock Blends | Provides realistic, heterogeneous material for pretreatment and hydrolysis resilience testing. |

| Standard Inhibitor Mix (e.g., Furfural, HMF, Acetic Acid) | Spikes hydrolysate to simulate stress conditions on fermentative organisms. |

| Agent-Based Modeling Software (e.g., AnyLogic, NetLogo) | Platforms to build digital twins of supply chains for disruption simulation. |

| Tracer Elements (e.g., Sr isotopes in soil) | Used in feedstock authentication studies to detect geographic fraud. |

| Robust Fermentation Strains (e.g., S. cerevisiae PE-2, engineered Z. mobilis) | Tolerant microbial chassis for testing inhibitor-laden hydrolysates. |

Visualizations: Analytical Pathways & Workflows

Title: Feedstock Failure Cascade & Research Intervention

Title: Agent-Based Modeling Workflow for Node Failure

From Theory to Resilience: Methodologies for Modeling and Mitigating Disruption Risks

Quantitative Risk Assessment (QRA) Frameworks for Biofuel Supply Nodes

Technical Support Center: Troubleshooting QRA Model Implementation

FAQs & Troubleshooting Guides

Q1: My QRA model for a biomass preprocessing node yields an improbably high Mean Risk Score (>90). What are the primary failure points to check? A: An inflated Mean Risk Score typically indicates an issue with risk parameter quantification or aggregation logic. Follow this diagnostic protocol:

- Check Input Data Scales: Ensure all parameter scores (e.g., likelihood, consequence severity) are normalized to a consistent scale (e.g., 1-5 or 1-10). Mismatched scales (e.g., mixing 1-5 likelihood with 1-100 consequence) cause calculation errors.

- Verify Consequence Weights: Validate the weight assignments for different consequence categories (Operational, Financial, Safety, Environmental). The sum of all weights must equal 1.0. Incorrect weighting disproportionately skews results.

- Inspect Risk Aggregation Formula: The most common formula is

Risk = Likelihood × Σ(Weight_i × Consequence_i). Confirm the formula is correctly implemented in your software (e.g., Python, MATLAB, Excel). - Re-calibrate Consequence Scores: Use the reference table below to ensure consequence estimates are realistic and evidence-based.

Table 1: Standardized Consequence Severity Scales for Biofuel Preprocessing Nodes

| Severity Level | Operational (Production Delay) | Financial (Loss USD) | Safety (TRIR*) | Environmental (CO2-eq kg) |

|---|---|---|---|---|

| 1 (Negligible) | < 24 hours | < 10,000 | 0 | < 100 |

| 2 (Low) | 1-7 days | 10,000 - 50,000 | 0 - 0.5 | 100 - 1,000 |

| 3 (Moderate) | 1-2 weeks | 50,001 - 200,000 | 0.6 - 2.0 | 1,001 - 10,000 |

| 4 (High) | 2-4 weeks | 200,001 - 500,000 | 2.1 - 5.0 | 10,001 - 50,000 |

| 5 (Critical) | > 1 month | > 500,000 | > 5.0 | > 50,000 |

*TRIR: Total Recordable Incident Rate per 200,000 work hours.

Q2: During disruption simulation, how do I validate the "Time-to-Recovery" (TTR) probability distribution for a feedstock storage node? A: Validating TTR distributions requires historical data or expert elicitation. Use this experimental protocol: Protocol: Empirical TTR Distribution Calibration

- Data Collection: Gather historical maintenance logs, incident reports, or supplier reliability data for the specific node (e.g., silo failure, conveyor belt breakdown).

- Categorize Disruptions: Classify events by root cause (Mechanical, Logistic, Biological e.g., feedstock spoilage, Regulatory).

- Calculate TTR: For each event, record the downtime in hours from onset to full operational recovery.

- Statistical Fitting: Input the TTR data into statistical software (e.g., R, @Risk). Fit the data to common distributions (Weibull, Lognormal, Exponential) using Maximum Likelihood Estimation (MLE).

- Goodness-of-Fit Test: Apply the Kolmogorov-Smirnov or Anderson-Darling test to select the best-fitting distribution. The distribution with the highest p-value (>0.05) is validated for use in your Monte Carlo simulation.

Q3: The risk interdependence matrix between nodes is causing cyclical calculation errors. How do I resolve this? A: Cyclical errors occur when Node A's risk depends on Node B, and Node B's risk simultaneously depends on Node A. Implement the following solution:

- Map Dependencies: Clearly diagram all node dependencies (see Diagram 1).

- Apply a Layering Algorithm: Use an iterative process. In the first simulation run, calculate risks for source nodes (those with no upstream dependencies) using base values. In the next iteration, use these calculated values to assess risks for dependent downstream nodes.

- Use a Solver Tool: Employ matrix-based solvers in MATLAB or Python (NumPy) that can handle systems of linear equations, ensuring convergence criteria are set to break cycles after a minimal change threshold (e.g., ΔRisk < 0.01).

Key Experiment Protocol: Monte Carlo Simulation for Node Resilience

Objective: Quantify the probabilistic risk exposure of a biofuel conversion (e.g., transesterification) node to multi-hazard disruptions. Methodology:

- Define Model Variables: Identify key input variables with uncertainty: Feedstock Quality (acid value), Catalyst Concentration, Process Temperature, Mechanical Availability.

- Assign Probability Distributions:

- Feedstock Quality: Normal distribution (Mean=2.0 mg KOH/g, SD=0.5).

- Catalyst Load: Triangular distribution (Min=0.5%, Mode=1.0%, Max=1.5% wt).

- Mechanical Availability: Bernoulli distribution (Availability=0.95).

- Establish Risk Function: Create a computational function that outputs a risk score based on input variables. For example:

IF(Feedstock_Quality > 3.0 OR Availability == 0) THEN Consequence = 4 ELSE Consequence = 1. - Run Simulation: Execute ≥10,000 iterations using software like Palisade @Risk or Python's SciPy.

- Analyze Output: Generate a histogram and cumulative distribution function (CDF) of output risk scores. Calculate the Value at Risk (VaR) at 95% confidence.

Table 2: Research Reagent Solutions for Biofuel Node QRA

| Item/Category | Function in QRA Experiment |

|---|---|

| Process Historian Data (e.g., OSIsoft PI) | Provides time-series operational data for parameter distribution fitting. |

| Expert Elicitation Protocol (Cooke's Method) | Structurally captures subjective probability estimates from domain experts. |

| Risk Aggregation Software (e.g., @Risk, ModelRisk) | Enables advanced probabilistic modeling and Monte Carlo simulation. |

| Supply Chain Mapping Tool (e.g., anyLogistix, R/igraph) | Visualizes node linkages and dependencies for interdependence analysis. |

| Failure Mode Database (e.g., FMEA, historical incident logs) | Serves as a baseline for identifying likelihood and consequence of events. |

Title: Biofuel Supply Node Disruption Cascades

Title: QRA Framework Workflow for Supply Nodes

Technical Support Center: Troubleshooting Digital Twin Experiments

This support center provides assistance for researchers conducting simulation experiments for biofuel supply chain resilience under node disruptions. The FAQs and guides below address common technical and methodological issues.

FAQs & Troubleshooting Guides

Q1: My agent-based simulation (ABS) model shows extreme volatility in biofuel yield output when a pre-processing facility node fails. How can I determine if this is a realistic scenario or a model artifact?

A: This is a common calibration issue. First, validate your input parameter distributions.

- Check Input Data: Ensure the feedstock variability (e.g., moisture content, carbohydrate yield) data fed into the digital twin's "pre-processing" module uses empirically derived distributions, not uniform or assumed normal distributions. Resample from your real-world data set.

- Sensitivity Analysis Protocol: Perform a global sensitivity analysis using the Sobol method to rank influential parameters.

- Method: Define a bounded range for each key input parameter (e.g., downtime distribution, buffer inventory levels, alternative routing capacity).

- Run the simulation >1000 times using a quasi-random sequence to explore the parameter space.

- Calculate the first-order (main effect) and total-order indices (including interactions) for the output variance in total system yield.

- Interpretation: If the failure node's downtime parameter has a total-order index > 0.7, the volatility is likely realistic and indicates a critical vulnerability. If less, check the interaction between routing logic and inventory policy.

Q2: When integrating real-time IoT sensor data (e.g., from bioreactor vats) into the simulation for live updating, the digital twin becomes unresponsive. What are the potential bottlenecks?

A: This indicates a data pipeline or model architecture issue.

- Troubleshooting Steps:

- Throttle Data Ingestion: Implement a data queue (e.g., Apache Kafka) and a "update threshold" to batch changes. The twin should not recalculate on every single sensor tick.

- Check Fidelity vs. Speed: Your "live" twin may be using an overly high-fidelity model. Implement a multi-fidelity approach: use a simple regression model for live updates and trigger the high-fidelity, physics-based simulation only when key thresholds are breached.

- Resource Monitoring: Monitor CPU (compute for agent logic) and RAM (for holding state of all entities). Profile your code to identify if the bottleneck is in the data assimilation step or the simulation logic itself.

Q3: How do I quantitatively validate that my disruption scenarios are producing statistically significant insights for resilience planning?

A: Validation requires moving beyond single-scenario reporting to rigorous scenario ensemble analysis.

- Experimental Protocol:

- Define a Resilience Metric: For example,

System Throughput Recovery (STR) = (Actual Post-Disruption Throughput at T) / (Planned Throughput at T). - Create Scenario Matrix: Systematically define scenarios across two axes: Disruption Severity (e.g., 10%, 50%, 100% capacity loss) and Disruption Duration (e.g., 24h, 1 week, 1 month).

- Run Ensemble Simulations: Execute a minimum of 30 replications per scenario cell with different random seeds to account for stochasticity.

- Statistical Test: Perform an Analysis of Variance (ANOVA) on the

STRresults to test if the differences observed between scenarios are greater than the differences within the replications of a single scenario.

- Define a Resilience Metric: For example,

Quantitative Data Summary: Example Scenario Output

Table 1: Simulated Impact of Pre-processing Plant Disruption on System-Wide Biofuel Yield (Annual Basis)

| Disruption Duration | Capacity Loss | Mean Yield Loss (%) | Std. Deviation (%) | 95% Confidence Interval |

|---|---|---|---|---|

| 1 Week | 50% | 12.5 | ±1.8 | [11.8, 13.2] |

| 1 Week | 100% | 48.3 | ±3.5 | [47.9, 50.1] |

| 1 Month | 50% | 51.2 | ±4.1 | [50.5, 52.7] |

| 1 Month | 100% | 92.7 | ±2.9 | [91.6, 93.5] |

Table 2: Key Model Parameters and Data Sources for Validation

| Parameter Category | Example Parameter | Source for Validation Data |

|---|---|---|

| Feedstock Supply | Seasonal yield variability | Historical agronomic data (USDA reports) |

| Node Performance | Mean Time Between Failures (MTBF) | Maintenance logs from partner facilities |

| Transportation | Route-specific delay distributions | GPS logistics data, weather history |

| Market | Biofuel price volatility | EIA (Energy Information Administration) published data |

Experimental Protocol: Building and Validating a Supply Chain Digital Twin

Title: Protocol for a Multi-Method Digital Twin of a Lignocellulosic Biofuel Supply Chain.

Objective: To create a validated digital twin capable of simulating disruption impacts from feedstock source to biorefinery.

Methodology:

System Boundary & Entity Definition:

- Define the physical scope: Feedstock Farms → Collection Hubs → Pre-processing Facilities → Biorefineries → Distribution.

- Model key entities: Trucks (agents), Facility Nodes (state machines), Feedstock Batches (discrete items).

Model Integration (Hybrid Approach):

- Agent-Based Model (ABS): Code in AnyLogic or Python/Mesa to simulate autonomous decision-making (e.g., truck rerouting, facility scheduling).

- Discrete-Event Simulation (DES): Model bulk processes within facilities (e.g., queue for grinding, hydrolysis batch time).

- System Dynamics (SD): Embed a stock-and-flow model for system-wide inventory buffers and price feedback loops.

Data Integration & Calibration:

- Historical Calibration: Use 2 years of operational data (throughput, delays) to tune model parameters via maximum likelihood estimation.

- Real-time Link: Establish an OPC-UA or REST API connection to live IoT data streams (e.g., silo levels, reactor temperatures) for selected nodes.

Disruption Scenario Injection:

- Programmatically disable or degrade nodes (e.g., set throughput to zero) according to the scenario matrix (see Table 1).

- Implement proposed resilience strategies (e.g., dynamic rerouting, buffer activation) as alternative experimental arms.

Output Analysis & Validation:

- Primary Output:

System Service Level(% of demand met on time). - Compare simulation outputs against known historical disruption events for face validity.

- Use statistical comparison (t-tests) of key outputs between different resilience strategies to determine significance.

- Primary Output:

Mandatory Visualizations

Digital Twin Development and Scenario Testing Workflow

Digital Twin Information Flow Upon a Disruption Event

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools & Platforms for Biofuel SC Digital Twin Research

| Tool/Reagent Name | Category | Function in Experiments |

|---|---|---|

| AnyLogic University | Simulation Software | Primary platform for building hybrid (ABS+DES+SD) models with GIS integration. |

| Python (Mesa, SimPy, Pandas) | Programming / Libraries | Custom agent-based modeling, data analysis, and automation of scenario batches. |

| SQL / Time-Series Database (e.g., InfluxDB) | Data Management | Storage and querying of historical operational data and real-time IoT sensor streams. |

| Sobol Sequence Generators | Statistical Library | Creates quasi-random input sequences for efficient global sensitivity analysis. |

| GPower or Similar | Statistical Software | Used a priori to calculate the required number of simulation replications for adequate statistical power. |

| OPC-UA / REST API | Integration Protocol | Standardized communication layer to connect the simulation model with live data sources. |

| High-Performance Computing (HPC) Cluster | Compute Infrastructure | Enables running large-scale scenario ensembles (1000s of runs) in parallel for robust results. |

AI and Machine Learning in Predictive Analytics for Precursor Failure Detection

Troubleshooting Guides & FAQs

Q1: Our ML model for predicting bioreactor sensor drift is experiencing rapid overfitting on a small, imbalanced dataset. What are the most effective strategies to mitigate this specific to time-series sensor data? A1: For time-series sensor data in bioreactor monitoring, employ the following:

- Temporal Data Augmentation: Use techniques like window warping, jittering (adding small noise), and scaling on the training set only to artificially increase diversity without breaking temporal dependencies.

- Algorithm Choice: Utilize models inherently resistant to overfitting on sequential data, such as Long Short-Term Memory (LSTM) networks with dropout layers between LSTM cells and fully connected layers. Gradient Boosting models (XGBoost, LightGBM) with strong regularization (high

min_child_weight, lowmax_depth) are also effective. - Validation Strategy: Implement Blocked Time Series Cross-Validation instead of random K-Fold. This prevents data leakage by ensuring the validation set is always chronologically after the training set.

- Class Imbalance: For failure prediction, use Synthetic Minority Over-sampling Technique for Regression (SMOTER) if forecasting a continuous drift, or assign differential weights to failure/non-failure sequences in the loss function.

Q2: When integrating heterogeneous data (e.g., spectroscopic, metabolic rates, feedstock quality) for precursor failure prediction, how do we handle missing data and scale features without losing biological interpretability? A2: A robust pipeline is required:

- Missing Data: For sensor streams, use forward-fill for short gaps (<3 readings) followed by linear interpolation. For missing batch data (e.g., feedstock lot analysis), flag as a separate categorical category ("missing") to allow the model to learn if missingness correlates with failure.

- Scaling & Fusion: Use different scaling per data type. Z-score normalization for continuous sensor data. Min-Max scaling for bounded readings (e.g., 0-100% saturation). Keep categorical data one-hot encoded. Use early fusion (concatenating scaled features) for simpler models or late fusion (training separate sub-models on each data type and combining predictions) for complex ensembles to preserve traceability.

Q3: The SHAP values for our ensemble tree model are highly volatile between training runs, making feature importance for root-cause analysis unreliable. How can we stabilize them? A3: SHAP instability often stems from model instability or high feature correlation.

- Stabilize the Model: Increase the

n_estimatorsin your Random Forest or XGBoost model significantly and use a fixed random seed. Ensure you are using a sufficient sample size for calculation (approximateortreeexplainer for large datasets). - Address Correlation: Use the

partitionexplainer from theshaplibrary, which groups correlated features together, providing a more stable and accurate attribution of importance to groups of related sensors/features. - Aggregate Results: Calculate SHAP values across multiple model runs (with different seeds) and report the mean importance, with confidence intervals.

Q4: In deploying a real-time failure prediction model to a pilot-scale biorefinery, what is the optimal method to retrain the model with new streaming data without catastrophic forgetting? A4: Implement an Online Learning or Continuous Training Pipeline:

- Schedule: Retrain the model nightly or weekly on a sliding window of the most recent 3-6 months of data, not the entire history.

- Architecture: Consider an online learning algorithm like Stochastic Gradient Descent (SGD) or leverage cloud ML services (e.g., Vertex AI, SageMaker) that support automated retraining pipelines.

- Validation Gate: Before deploying the retrained model, validate its performance against a held-out recent period and an "old" period to ensure it hasn't forgotten significant past failure modes. Only promote the model if it passes both tests.

Key Experimental Protocols

Protocol 1: Developing a Hybrid CNN-LSTM Model for Spectral Time-Series Data Objective: Predict catalyst deactivation in a transesterification reactor using near-infrared (NIR) spectral streams and temperature/pressure data.

- Data Collection: Collect NIR spectra (every 30 seconds) and process sensor data from at least 50 batch runs, including runs that led to failure.

- Preprocessing: Apply Standard Normal Variate (SNV) correction to NIR spectra. Align all time-series to a common batch timeline using Dynamic Time Warping.

- Model Architecture:

- Input 1 (Spectral): 1D convolutional layers (filters=64, kernel=3) to extract local spectral features, followed by a MaxPooling layer.

- Input 2 (Sensor): Raw time-series vector.

- Fusion & Sequence Learning: Concatenate the flattened CNN output with the sensor input. Feed into a two-layer LSTM network (units=50) to learn temporal dependencies.

- Output: A dense layer with a sigmoid activation for binary failure prediction (e.g., "deactivation within next 2 hours").

- Training: Use a balanced binary cross-entropy loss. Optimize with Adam. Validate using chronological block cross-validation.

Protocol 2: Benchmarking Ensemble Methods for Feedstock Impurity-Induced Failure Objective: Compare model performance in predicting yield drop from rapid compositional analysis of incoming biomass.

- Dataset: Compile data from 300+ feedstock lots. Features: lignin content, cellulose crystallinity, moisture, particle size distribution (PSD), and origin. Label: Binary (yield drop >15%).

- Models to Train: Logistic Regression (baseline), Random Forest, XGBoost, and a Feedforward Neural Network.

- Methodology: Split data 70/15/15 (train/validation/test). Use 5-fold CV on the training set for hyperparameter tuning (GridSearchCV). For tree-based models, tune

max_depth,n_estimators, and class weights. - Evaluation: Report Precision, Recall, F1-Score, and AUC-ROC on the held-out test set. Perform a DeLong test to check for statistically significant differences in AUC-ROC curves.

Data Presentation

Table 1: Performance Benchmark of ML Models for Precursor Failure Detection

| Model | Avg. Precision | Recall (Failure Class) | F1-Score | AUC-ROC | Inference Latency (ms) |

|---|---|---|---|---|---|

| Logistic Regression | 0.72 | 0.65 | 0.68 | 0.81 | < 1 |

| Random Forest | 0.88 | 0.82 | 0.85 | 0.93 | 10 |

| XGBoost | 0.91 | 0.85 | 0.88 | 0.95 | 7 |

| Hybrid CNN-LSTM | 0.89 | 0.83 | 0.86 | 0.94 | 25 |

Table 2: Impact of Data Augmentation on Model Generalization (LSTM Model)

| Augmentation Technique | Training Accuracy | Validation Accuracy | Improvement in Recall (vs. Baseline) |

|---|---|---|---|

| Baseline (None) | 0.99 | 0.78 | -- |

| + Jittering | 0.97 | 0.82 | +0.08 |

| + Window Warping | 0.95 | 0.85 | +0.12 |

| + Jittering & Warping | 0.94 | 0.87 | +0.15 |

Diagrams

Title: Predictive Analytics Workflow for Biofuel Supply Chain

Title: Hybrid CNN-LSTM Model Architecture

The Scientist's Toolkit: Research Reagent Solutions

| Item / Reagent | Function in Precursor Failure Research |

|---|---|

| Simulated Failure Datasets (e.g., Tennessee Eastman Process) | Benchmarks and validates ML models on known fault patterns before using proprietary biorefinery data. |

| SHAP (SHapley Additive exPlanations) Python Library | Explains model predictions, identifying key spectral bands or sensor readings leading to a failure call. |

| TensorFlow Extended (TFX) / MLflow | Platforms for building reproducible, automated ML pipelines from data ingestion to model deployment. |

| Online NIR Spectrometer Probe | Provides real-time, inline spectral data for immediate feature extraction and model input. |

| Synthetic Biofuel Precursor Blends | Allows controlled introduction of impurities (e.g., high lignin, acids) to generate failure data for model training in a lab setting. |

| Cloud GPU Compute Instance (e.g., NVIDIA T4) | Enables rapid training and hyperparameter tuning of complex deep learning models (CNNs, LSTMs). |

Designing Agile and Adaptive Logistics Networks for Biofuels

Technical Support Center

This support center is established as part of a research thesis on Improving biofuel supply chain resilience to node disruptions. It provides troubleshooting resources for researchers and scientists developing agile logistics networks for biofuel feedstocks and products.

Troubleshooting Guides & FAQs

Q1: Our model for rerouting feedstock shipments after a biorefinery node failure consistently underestimates recovery time by 30-40%. What key parameters might we be missing? A: This is a common issue in dynamic network modeling. The discrepancy often stems from an oversimplified representation of node "switch-over" time. Beyond transport distance, your model must explicitly incorporate:

- Pre-processing Delay (t_p): Time required for a receiving facility to reconfigure equipment for a different feedstock type (e.g., switchgrass vs. corn stover). Typical lag: 24-72 hours.

- Regulatory & Documentation Check (t_r): Time for environmental compliance verification and bill-of-lading updates for the new route. Can add 12-48 hours.

- Queue Delay (t_q): If using shared 3PL (Third-Party Logistics) assets, factor in their existing commitment density. Model this using a Poisson distribution for asset arrival.

Experimental Protocol for Parameter Calibration:

- Simulation Setup: Use any agent-based modeling software (e.g., AnyLogistix, MATLAB SimEvents).

- Define Baseline Network: Create a digital twin of your test network with 5 feedstock sources, 2 biorefineries (Node A, B), and 4 demand points.

- Induce Disruption: At simulation time T=100hrs, disable Biorefinery A.

- Run Experimental Groups:

- Group 1 (Basic): Reroute logic based on distance/speed only.

- Group 2 (Advanced): Integrate parameters tp, tr, t_q with values sourced from your partner logistics providers.

- Measure: Record Time-to-Full-Throughput-Recovery for each group. Compare to historical disruption data if available.

Q2: When testing a multi-modal (truck-to-rail) contingency plan for biodiesel distribution, we encounter unexpected viscosity-induced clogging in cold-weather scenarios. How do we troubleshoot this in the lab? A: This is a material compatibility issue between the fuel blend and the temperature profile of the alternate logistics path.

Experimental Protocol for Clogging Analysis:

- Sample Preparation: Prepare samples of your biodiesel blend (e.g., B100, B20) as per ASTM D6751.

- Cold Flow Test: Use a Cold Filter Plugging Point (CFPP) analyzer (e.g., PAC LPS ISL CFPP-5CCS). Protocol: a. Cool sample in specified increments (1°C/min). b. At each 1°C interval, apply vacuum to draw sample through a 45-micron filter. c. Record the temperature at which the sample fails to pass through the filter within 60 seconds. This is the CFPP.

- Correlate with Logistics Data: Compare CFPP to minimum ambient temperature (

T_min) of your planned rail route. IfCFPP > T_min, clogging is probable. - Solution Testing: Repeat CFPP test with additives (e.g., cold flow improvers like ethylene-vinyl acetate copolymers) at 0.1%, 0.2%, and 0.3% by volume. Incorporate additive cost and handling into your network agility cost model.

Q3: Our digital twin for supply chain resilience shows high volatility in performance metrics (e.g., Service Level) when we run stochastic simulations of port disruptions. How can we validate if the model is accurate or overly sensitive? A: Volatility indicates high sensitivity to input distributions. You must perform a robustness validation.

Experimental Protocol for Model Validation:

- Define Key Output Variable (KOV): Service Level (% of demand fulfilled).

- Design of Experiments (DOE): Vary three key stochastic input parameters using a Latin Hypercube Sampling design:

Disruption Duration (D): Truncated Log-Normal distribution (Mean: 7 days, Min: 1, Max: 30).Alternative Supplier Lead Time (L): Uniform distribution (Min: 2 days, Max: 10 days).Demand Spike Post-Disruption (S): Normal distribution (Mean: 120%, SD: 10%).

- Run Simulations: Execute 1000 replications for each set of

(D, L, S)parameters from the DOE. - Statistical Analysis: Perform a multiple linear regression where

KOV = β0 + β1*D + β2*L + β3*S + ε. A high R² value (>0.8) suggests the model's volatility is explainable and likely accurate. A low R² indicates unmodeled noise or an error in the simulation logic.

Table 1: Comparison of Biofuel Feedstock Rerouting Strategies Post-Node Failure

| Strategy | Avg. Recovery Time (hrs) | Cost Premium (%) | Data Source (Simulated/Real) | Key Limiting Factor |

|---|---|---|---|---|

| Pre-Contractual 3PL Backup | 48 - 72 | 15 - 25 | Real (Industry Case Study) | Contractual Volume Commitments |

| Dynamic Spot Market Procurement | 24 - 48 | 30 - 50 | Simulated (Agent-Based Model) | Price Volatility & Asset Availability |

| Inter-Network Resource Sharing (Co-op) | 12 - 24 | 5 - 15 | Simulated (Optimization Model) | IT System Compatibility & Trust |

| Pre-Positioned Strategic Buffer Stock | < 12 | 8 - 12 | Real (Military Logistics Model) | High Inventory Holding Cost |

Table 2: Cold Flow Properties of Biofuel Blends with Additives

| Fuel Blend | Baseline CFPP (°C) | CFPP with 0.2% Additive A (°C) | CFPP with 0.2% Additive B (°C) | Viscosity at 10°C (mm²/s) |

|---|---|---|---|---|

| Soy-based B100 | +2 | -5 | -8 | 4.12 |

| Waste-Oil B100 | -1 | -7 | -11 | 4.35 |

| B20 (Petro-Diesel Blend) | -15 | -22 | -24 | 2.98 |

Visualization: Network Response Logic

Title: Decision Logic for Agile Network Response to Disruption

Title: Workflow for Testing Logistics Network Resilience

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Biofuel Logistics Network Experiments

| Item | Function in Research | Example Product/Specification |

|---|---|---|

| Agent-Based Simulation Platform | To create a digital twin of the supply network for stress-testing under disruptions. | AnyLogistix, Anylogic, MATLAB SimEvents. |

| Geospatial Analysis Software | To model and optimize transport routes, accounting for terrain, infrastructure, and real-time conditions. | ArcGIS Network Analyst, QGIS with ORS tools. |

| Cold Filter Plugging Point (CFPP) Analyzer | To determine the low-temperature operability limits of biofuel blends in contingency routes. | PAC LPS ISL CFPP-5CCS, per ASTM D6371. |

| Dynamic Viscosity Meter | To measure fuel viscosity under varying temperature regimes predicted in alternate logistics paths. | Anton Paar SVM 3001 Stabinger Viscometer. |

| Supply Chain Stress-Test Dataset | Historical or synthetic data on port closures, weather events, and demand spikes for model calibration. | RESILIENCE database, FRED economic data. |

| Cloud Computing Credits | For running high-volume stochastic simulations (Monte Carlo) and machine learning optimization models. | AWS EC2, Google Cloud Compute Engine. |

Diagnosing Failures and Fortifying Networks: Proactive Solutions for Chain Robustness

Common Failure Modes in Feedstock Sourcing & Preprocessing Hubs

Troubleshooting Guides & FAQs

FAQ 1: What are the primary indicators of feedstock quality degradation upon arrival at a preprocessing hub, and how can they be rapidly assessed?

- Answer: Key indicators include moisture content >15% (wet basis), visible microbial contamination, foreign material (e.g., rocks, metal) presence >5% by weight, and abnormal color/odor. For rapid assessment, implement a three-step protocol: (1) Use a portable near-infrared (NIR) spectrometer for immediate moisture and compositional analysis on 10 random samples from the delivery lot. (2) Perform a bulk density test using a standard bushel tester; a deviation >10% from the standard suggests improper handling or contamination. (3) Conduct a simple ethanol soak test for lignocellulosic feedstocks; delayed or reduced solubilization of extractives can indicate pre-decomposition.

FAQ 2: Our preprocessing hub is experiencing inconsistent particle size reduction, leading to downstream enzymatic hydrolysis yield variability. What are the likely causes and corrective actions?

Answer: Inconsistent particle size typically stems from (A) wear and tear of mill/grinder blades/screens, (B) fluctuating feedstock moisture content causing clogging or uneven grinding, or (C) improper calibration of feed-rate controllers.

Corrective Protocol:

- Mechanical Inspection: Measure blade clearance and screen integrity every 40 operational hours. Replace components if wear exceeds manufacturer's tolerance (typically >2mm variance).

- Moisture Pre-Conditioning: Install a real-time moisture sensor at the grinder inlet. Implement a feedback loop to a pre-conditioning chamber that uses steam or dry air to normalize feedstock moisture to a target of 12% (±1%) before comminution.

- Calibration: Weekly, perform a feed-rate calibration using a standardized material (e.g., clean, dry corn stover) and adjust the variable frequency drive (VFD) to achieve the specified throughput (kg/hr).

FAQ 3: We suspect microbial spoilage during feedstock intermediate storage, which compromises fermentable sugar recovery. How can this be diagnosed and mitigated?

Answer: Spoilage is diagnosed by measuring a temperature rise (>10°C above ambient) within a storage silo/pile, a pH drop (>1 unit), and the production of organic acids (e.g., acetic, lactic) above 3% w/w of dry matter.

Experimental Protocol for Diagnosis:

- Insert temperature probes at the core and periphery of the storage unit.

- Extract core samples using a sterile auger at depths of 0.5m, 1.5m, and 2.5m.

- Homogenize 10g of each sample in 90ml sterile deionized water, filter, and measure pH.

- Analyze the filtrate using High-Performance Liquid Chromatography (HPLC) with a refractive index (RI) detector and an Aminex HPX-87H column to quantify organic acid profiles.

Mitigation Strategy: Apply a proven organic acid-based preservative (e.g., propionic acid at 1.5% w/w) during the densification (pelletizing/briquetting) step. Ensure storage atmosphere is maintained with <5% O2 using inert gas (N2) flushing.

FAQ 4: What are the most common points of failure in the feedstock receiving and sorting automation system?

Answer: Failures commonly occur at: (1) The tramp metal detector/separation system, leading to downstream equipment damage. (2) The optical sorter, due to dust accumulation on lenses or misconfigured rejection thresholds. (3) The belt weighing system, due to misalignment or build-up of material on load cells.

Troubleshooting Steps:

- Metal Detector: Test daily with a standard ferrous and non-ferrous test piece. If sensitivity drops below 3.0mm diameter, inspect and clean the aperture.

- Optical Sorter: Perform a bi-weekly calibration using "good" and "bad" feedstock samples. Clean air knives and camera lenses every shift.

- Load Cells: Zero the scale before each batch. Check for physical obstructions and ensure the belt is centered.

Table 1: Impact of Feedstock Moisture Content on Preprocessing Efficiency & Downtime

| Moisture Content (% wet basis) | Grinder Throughput (% of Rated Capacity) | Screen Clogging Frequency (events/8hr shift) | Average Particle Size CV* (%) | Estimated Sugar Yield Loss (%) |

|---|---|---|---|---|

| <10% | 95% | 1 | 15% | 0% |

| 10-15% (Target) | 100% | 0 | 10% | 0% |

| 15-20% | 85% | 3 | 22% | 5% |

| >20% | 60% | 7 | 35% | 15% |

*CV: Coefficient of Variation

Table 2: Comparative Analysis of Feedstock Preservation Methods for Long-Term (>90 days) Storage

| Preservation Method | Capital Cost Index | Operational Cost ($/ton) | Dry Matter Loss (%) | Fermentable Sugar Retention (%) |

|---|---|---|---|---|

| Untreated Pile | 1.0 | 0.0 | 25% | 68% |

| Ensiling (Anaerobic) | 2.5 | 8.5 | 12% | 85% |

| Chemical (Propionate) Additive | 1.8 | 12.0 | 5% | 94% |

| Drying & Pelletizing | 8.0 | 25.0 | 2% | 98% |

Experimental Protocols

Protocol 1: Standardized Test for Feedstock Contaminant Load

Objective: Quantify inorganic (ash, soil) and non-processible organic contaminant levels in a received feedstock lot. Materials: Drying oven, muffle furnace, desiccator, analytical balance (0.1mg), sieves (4mm and 2mm mesh). Methodology:

- Obtain a 5kg representative sample from the lot using a quartering method.

- Dry at 105°C to constant weight to determine moisture content.

- Sieve the dried sample through a 4mm sieve. Weigh the fraction retained (foreign matter >4mm, e.g., cobs, stones).

- Gently wash the sub-4mm fraction over a 2mm sieve with a known volume of water. Collect the washed solids, dry, and weigh. The mass loss represents soluble contaminants and fine soil.

- Ash the remaining washed, dried solids in a muffle furnace at 575°C for 3 hours. Cool in a desiccator and weigh. The residue is the inorganic ash content. Calculation: Report contaminants as percentage of dry matter for each fraction.

Protocol 2: Enzymatic Hydrolysis Saccharification Assay for Preprocessed Feedstock

Objective: Evaluate the effectiveness of preprocessing on sugar yield potential. Materials: 50mM Sodium citrate buffer (pH 4.8), commercial cellulase cocktail (e.g., CTec3), 50ml conical tubes, shaking incubator, HPLC system. Methodology:

- Mill preprocessed feedstock to pass a 1mm screen.

- Load 1.0g (dry weight equivalent) into a 50ml tube. Add 20ml citrate buffer.

- Add enzyme cocktail at a loading of 20mg protein/g glucan.

- Incubate at 50°C with agitation at 150 rpm for 72 hours.

- At 0, 24, 48, and 72 hours, take 1ml samples, centrifuge, filter (0.2µm), and analyze supernatant via HPLC for glucose, xylose, and inhibitor (furfural, HMF) concentrations. Calculation: Calculate total sugar yield as a percentage of the theoretical maximum based on initial feedstock composition.

Visualizations

Title: Feedstock Preprocessing Hub Flow & Failure Points

Title: Feedstock Quality Control & Decision Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Feedstock and Preprocessing Research

| Item & Example Product | Primary Function in Research Context |

|---|---|

| Portable NIR/MIR Spectrometer(e.g., Thermo Scientific microPHAZIR) | Rapid, non-destructive field analysis of feedstock moisture, carbohydrate, lignin, and ash content. Enables real-time quality decision-making at the receiving point. |

| Commercial Cellulase/Hemicellulase Cocktail(e.g., Novozymes Cellic CTec3, HTec3) | Standardized enzyme mixture for conducting reproducible enzymatic hydrolysis saccharification assays to evaluate the digestibility of preprocessed feedstocks. |

| Anaerobic Chamber or Bag System(e.g., Coy Lab Products Vinyl Anaerobic Chamber, Mitsubishi AnaeroPouch) | Creates an oxygen-free environment for studying microbial spoilage pathways during storage or for conducting fermentation assays with strict anaerobes on feedstock hydrolysates. |

| Organic Acid Standards & HPLC Column(e.g., Sigma-Aldrich Organic Acid Mix, Bio-Rad Aminex HPX-87H) | Quantification of fermentation inhibitors (acetic, formic, levulinic acid) and spoilage markers (lactic, propionic acid) in feedstock and process samples. |

| Moisture Analyzer(e.g., Mettler Toledo HE53 or equivalent halogen radiator-based) | Provides precise and fast (<5 min) determination of moisture content in solid feedstock samples, critical for process control and yield calculations. |

| Stem/Seed Grinder(e.g., Retsch SM 300 or Wiley Mini-Mill) | Produces a homogeneous, fine powder from diverse, fibrous feedstocks for accurate and representative downstream compositional and enzymatic analysis. |

| Lignin & Structural Carbohydrate Analysis Kits(e.g., NREL LAP procedures or commercial assay kits from Megazyme) | Accurate determination of glucan, xylan, arabinan, and acid-insoluble lignin content—the foundational data for mass balance and conversion efficiency calculations. |

| Trace Element & ICP-MS Standards(e.g., Inorganic Ventures custom multi-element standards) | Analysis of ash composition and trace metal content (e.g., K, Na, Ca, Mg, S) which can act as catalyst poisons in downstream thermochemical conversion processes or impact fermentation. |

Optimizing Multi-Sourcing and Feedstock Diversification Strategies

Technical Support Center

Troubleshooting Guides & FAQs

Q1: During a multi-sourced lignocellulosic hydrolysis, we observe inconsistent sugar yield. What are the primary troubleshooting steps? A: Inconsistent yields typically stem from feedstock compositional variability. Follow this protocol:

- Immediate Analysis: Perform a compositional analysis (NREL/TP-510-42618) on the current batch. Compare to your baseline feedstock profile in Table 1.

- Pre-treatment Check: Verify pre-treatment severity (e.g., Log Ro). Re-calibrate temperature and pH sensors.

- Enzyme Cocktail Adjustment: If inhibitor levels (furfurals, phenolics) are high, consider adjusting enzyme cocktail ratios or introducing a detoxification step.

- Protocol: Standardize with a Feedstock Variability Buffer Test. Run parallel hydrolysis on a 50/50 blend of the problematic batch and a known standard. If yield normalizes, the issue is inherent variability, necessitating blending or pre-processing.

Q2: Our catalyst performance degrades rapidly when switching between different waste oil feedstocks in biodiesel production. How can we diagnose this? A: Rapid catalyst deactivation points to feedstock contaminants.

- Test Free Fatty Acid (FFA) & Water Content: Use titration (AOCS Ca 5a-40) and Karl Fischer titration immediately upon receipt of feedstock. Values must be within catalyst tolerance (see Table 2).

- Check for Inorganic Contaminants: Perform ash content analysis (ASTM D482). High levels of phosphorus, calcium, or magnesium can poison acid catalysts.

- Solution: Implement a pre-treatment step. For high FFA (>2%), use an acid esterification pre-treatment. For water, employ a vacuum drying protocol. Establish strict feedstock specification sheets for all suppliers.

Q3: When modeling multi-sourcing supply chain resilience, how do we quantitatively account for regional disruption risks? A: Integrate a Regional Risk Index (RRI) into your model.

- Data Collection: For each sourcing node, gather current data on: political stability index, frequency of extreme weather events, and transportation infrastructure quality.

- Calculation: Assign a normalized weight (e.g., 0-1) to each factor based on your thesis context. Calculate a weighted RRI for each node.

- Model Integration: Use the RRI to modulate the probability of disruption (

p_disruption) in your network optimization or simulation model (e.g., a stochastic programming model). Nodes with RRI > 0.7 should be considered for redundancy.

Q4: Cell culture viability drops when testing extracts from a new alternative biomass source. How do we determine if it's a general toxin or a specific pathway inhibition? A: Execute a Dose-Response & Pathway Screening.

- Protocol: Treat cells with a dilution series of the extract. Perform an MTT assay at 24h and 48h to generate IC50 curves.

- Pathway Analysis: If IC50 is low, use a luciferase-based reporter array (e.g., NF-κB, AP-1, p53 pathways). Transfert cells with reporter constructs and treat with a sub-lethal dose (IC20) of the extract. A >2-fold change in luminescence indicates specific pathway activation/inhibition.

- Metabolomic Profiling: For uncharacterized extracts, run LC-MS on treated vs. untreated cell lysates to identify accumulated or depleted metabolites, pointing to the inhibited pathway.

Data Summaries

Table 1: Compositional Variability of Common Lignocellulosic Feedstocks (% Dry Weight)

| Feedstock Source | Cellulose | Hemicellulose | Lignin | Ash | Extractives |

|---|---|---|---|---|---|

| Corn Stover (Iowa) | 36.2 ± 2.1 | 22.8 ± 1.5 | 17.9 ± 1.2 | 5.1 ± 0.8 | 11.5 |

| Switchgrass (Oklahoma) | 34.7 ± 3.0 | 24.1 ± 2.2 | 20.1 ± 1.8 | 4.5 ± 0.6 | 9.2 |

| Miscanthus (Illinois) | 40.5 ± 1.8 | 26.3 ± 1.4 | 18.4 ± 1.0 | 3.2 ± 0.5 | 8.3 |

| Wheat Straw (Kansas) | 35.9 ± 2.5 | 24.8 ± 1.9 | 19.2 ± 1.5 | 7.5 ± 1.1 | 10.1 |

Table 2: Waste Oil Feedstock Specification Limits for Alkali-Catalyst Transesterification

| Parameter | Acceptable Limit | Analysis Method | Impact of Breach |

|---|---|---|---|

| Free Fatty Acid (FFA) | < 2.0 % | Titration (AOCS Ca 5a-40) | Soap formation, reduced yield, emulsion |

| Water Content | < 0.5 % w/w | Karl Fischer Titration | Hydrolysis, catalyst decomposition |

| Peroxide Value | < 5.0 meq/kg | AOCS Cd 8b-90 | Catalyst oxidation, side reactions |

| Insoluble Impurities | < 0.1 % w/w | Filtration & Gravimetry | Reactor fouling, filter clogging |

Experimental Protocols

Protocol: Feedstock Variability Buffer Test for Enzymatic Hydrolysis Objective: To determine if a new feedstock batch's performance deviation is due to inherent composition or process error. Materials: See Scientist's Toolkit. Method:

- Prepare biomass samples: a) Control Batch (CB), b) New Problematic Batch (NPB), c) 50:50 Blend (CB+NPB).

- Apply identical standard pre-treatment (e.g., 1% H2SO4, 160°C, 20 min).

- Neutralize and wash solids to pH 5.0.

- Load reactors with solids at 10% solid loading. Add cellulase cocktail at 15 FPU/g glucan.

- Hydrolyze at 50°C, 150 RPM for 72h. Sample at 0, 6, 24, 48, 72h.

- Analyze samples via HPLC for glucose and xylose concentration. Analysis: Plot sugar yield over time. If the blend's yield is midway between CB and NPB, the issue is compositional. If the blend's yield matches CB, the NPB may have an acute contaminant.

Protocol: Regional Risk Index (RRI) Calculation for Supply Nodes Objective: To generate a quantitative risk score for each potential feedstock sourcing node. Method:

- Select Indicators: For each geographic node

i, collect the most recent annual data for:P_i: Political Stability Index (World Bank, -2.5 to 2.5 scale). Normalize to 0-1.C_i: Climate Event Frequency (NOAA, count of severe events). Normalize to 0-1.T_i: Logistics Performance Index: Infrastructure score (World Bank, 1-5 scale). Normalize to 0-1.

- Assign Weights: Based on your model's sensitivity, assign weights (sum to 1): e.g.,

w_p=0.4,w_c=0.4,w_t=0.2. - Calculate RRI:

RRI_i = (w_p * P_i) + (w_c * C_i) + (w_t * (1 - T_i)). Note:(1 - T_i)inverts the score so poor infrastructure increases risk. - Classify: RRI < 0.3 (Low), 0.3-0.6 (Medium), 0.6-0.8 (High), >0.8 (Very High).

Diagrams

Feedstock Yield Issue Diagnostic Tree

Supply Chain Resilience Strategy Framework

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Biofuel Feedstock Research |

|---|---|

| Cellulase Enzyme Cocktail (e.g., CTec2) | Hydrolyzes cellulose to glucose. Critical for evaluating saccharification potential of lignocellulosic biomass. |

| Free Fatty Acid (FFA) Standard Kits | For accurate titration calibration to assess waste oil feedstock quality and pre-treatment needs. |

| Solid Phase Extraction (SPE) Columns (C18) | To remove inhibitors (phenolics, furans) from biomass hydrolysates prior to fermentation toxicity assays. |

| Internal Standards (e.g., Deuterated Succinic Acid) | For accurate quantification of fermentation products in complex broths via GC-MS or LC-MS. |

| Luciferase Reporter Plasmid Kits (NF-κB, Antioxidant Response) | To screen for specific cellular pathway activation/inhibition by novel feedstock extracts. |

| Logistics Performance Index (LPI) Datasets | Quantitative data for modeling transportation reliability and infrastructure quality of sourcing nodes. |

| Process Modeling Software (e.g., GAMS, AnyLogic) | For building stochastic or agent-based models of the multi-source supply chain under disruption. |

Implementing Strategic Buffer Stocks and Dynamic Rerouting Protocols

Technical Support Center: Troubleshooting Guides & FAQs

This support center addresses common technical issues encountered during experiments related to biofuel supply chain resilience research, specifically those investigating buffer stocks and dynamic rerouting to mitigate node disruptions (e.g., facility failures, feedstock supply interruptions).

FAQ 1: How do I calibrate the parameters for my dynamic rerouting model to reflect real-world biofuel feedstock transportation delays?

Answer: Inaccurate delay parameters can skew resilience metrics. Follow this protocol:

- Data Collection: Use historical GPS logistics data from your partnered carrier or from open datasets (e.g., U.S. DOT Freight Analysis Framework).

- Baseline Establishment: Calculate the mean (

μ) and standard deviation (σ) of travel times for each primary route segment under normal conditions over a 3-month period. - Disruption Simulation: Introduce a delay multiplier (e.g., 1.5x to 3x

μ) for segments adjacent to a simulated disrupted node. The multiplier should be based on the severity of the simulated disruption (e.g., facility closure = 3x, partial downtime = 1.5x). - Validation: Compare your model's total system delay output against a known historical disruption event. A variance of >15% indicates a need to re-calibrate the delay multipliers.

Table 1: Example Calibration Parameters for Corn Ethanol Route Segments

| Route Segment | Normal Mean Transit (Hours) (μ) | Std Dev (Hours) (σ) | Moderate Disruption Multiplier | Severe Disruption Multiplier |

|---|---|---|---|---|

| Farm Cluster A to Biorefinery X | 4.5 | 0.7 | 1.8x (μ) | 3.2x (μ) |

| Intermodal Terminal B to Biorefinery Y | 18.0 | 2.5 | 2.0x (μ) | Not Applicable (Route Closed) |

FAQ 2: My buffer stock optimization experiment is yielding unrealistically high or low safety stock levels. What are the likely causes?

Answer: This typically stems from incorrect input variables for the newsvendor or (s,S) inventory model commonly used.

- Cause A: Overestimated Demand Volatility. Double-check the standard deviation of your historical weekly feedstock demand. An outlier may be inflating this value.

- Cause B: Incorrect Service Level Target. A 99.9% service level will generate much larger buffers than a 95% level. Align this target with the criticality of the node in your thesis—non-substitutable catalytic enzyme supply may warrant 99%, while regional biomass might warrant 92%.

- Cause C: Underestimated Lead Time during Disruption. The "lead time" variable must account for the duration of a simulated node disruption (e.g., 14 days), not just standard order fulfillment time.

Experimental Protocol for Determining Buffer Stock Size:

- Define the desired service level (probability of no stockout).

- Gather 24 months of historical demand data for the specific feedstock or material at the node.

- Calculate mean (

D) and standard deviation (σ_D) of weekly demand. - Define the expected lead time (

L) in weeks during a disruption scenario. - Calculate safety stock (SS) using the formula:

SS = Z * σ_D * sqrt(L), whereZis the Z-score corresponding to your service level (e.g., Z=1.65 for 95%). - Buffer Stock Level =

(D * L) + SS.

FAQ 3: The dynamic rerouting algorithm fails to converge on a feasible solution when simulating multiple, simultaneous node disruptions.

Answer: This indicates a possible limitation in your algorithm's constraints or network design.

- Troubleshooting Step 1: Check Capacity Constraints. Verify that alternate routes and destination buffers have sufficient handling and storage capacity to absorb rerouted flows. The algorithm will fail if no viable path has enough capacity.

- Troubleshooting Step 2: Check Cost Constraints. An excessively low maximum allowable cost-per-ton will eliminate all alternatives. Temporarily increase the cost constraint by 25% to see if a solution appears.

- Troubleshooting Step 3: Simplify the problem. Run the simulation with a single disruption to verify base functionality. Then, iteratively add disruption events to identify the specific combination that causes failure.

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Materials for Supply Chain Resilience Experimentation

| Item / Solution | Function in Research |

|---|---|

| AnyLogistix or Simio Supply Chain Software | Primary platform for discrete-event simulation of the biofuel supply network, enabling disruption modeling and resilience KPI calculation. |

| Python (with Pandas, NetworkX libraries) | For custom scripting of rerouting algorithms, data analysis of logistics datasets, and automating buffer stock calculations. |

| Historical Feedstock Pricing & Logistics Datasets (e.g., USDA Ag Transport, EIA) | Provides real-world data for calibrating model parameters such as costs, transit times, and demand volatility. |

| Geographic Information System (GIS) Software (e.g., QGIS) | Maps physical supply network topology, analyzes geographic route alternatives, and visualizes disruption impacts. |

| Risk Assessment Matrix Template | A qualitative tool to prioritize which nodes to simulate for disruption based on failure probability and impact severity. |

Experimental Workflow Diagram

Title: Workflow for Biofuel Supply Chain Resilience Experimentation

Dynamic Rerouting Logic Diagram

Title: Dynamic Rerouting Protocol Decision Logic

Technical Support Center

FAQs & Troubleshooting for Biofuel Supply Chain Resilience Research

Q1: Our sensor data from feedstock quality monitoring is showing unexpected anomalies/spikes. How can we determine if this is a sensor fault, a cyber-intrusion (data manipulation), or a genuine biological anomaly?

- A: Follow this isolation protocol:

- Physical Layer Check: Perform manual calibration tests on the affected sensor nodes using standard reference materials.

- Network Layer Check: Isolate the sensor node from the network and dump raw, unprocessed data logs directly from its internal storage (if available). Compare to data received by the central SCADA/Historian.

- Data Integrity Check: Verify cryptographic hashes (e.g., SHA-256) of data files at source and destination if a secure data transfer protocol was implemented. Check for gaps in sequentially numbered data packets.

- Cross-Validation: Compare data with correlated metrics from independent sensors (e.g., if pH is anomalous, check correlated conductivity data).

- A: Follow this isolation protocol:

Q2: Our automated bioreactor control system (ICS/SCADA) is executing commands erratically. What are the immediate containment steps?

- A: Execute the following Incident Response (IR) workflow:

- Containment: Immediately switch critical bioreactor processes to manual, local control mode. Disconnect the affected Operational Technology (OT) network segment from the corporate IT network.

- Forensic Imaging: Create a bit-for-bit forensic image of the engineering workstation and PLC/programmable logic controller) programming terminals before any reboots.

- Log Aggregation: Secure logs from all devices: Windows event logs from HMIs, audit trails from the SCADA software, and network traffic logs (PCAP) from OT-aware firewalls.

- A: Execute the following Incident Response (IR) workflow:

Q3: A third-party lab's genomic sequencing data for a novel feedstock yeast strain appears corrupted. How do we verify data integrity and provenance?

- A: Implement a data receipt protocol:

- Provenance Verification: Request the full metadata, including sequencer model, run ID, sample preparation protocol hash, and the digital signature from the lab's Certificate Authority.

- File Integrity Check: Re-calculate the checksum (e.g., MD5, SHA-1) of the received FASTQ files and compare it to the checksum provided by the lab at the time of transfer.