Optimizing Biomass Supply Chains: Advanced Strategies to Overcome Feedstock Variability for Renewable Energy and Bio-Based Products

This article provides a comprehensive analysis of strategies to optimize biomass supply chains (BMSCs) against the critical challenge of feedstock variability.

Optimizing Biomass Supply Chains: Advanced Strategies to Overcome Feedstock Variability for Renewable Energy and Bio-Based Products

Abstract

This article provides a comprehensive analysis of strategies to optimize biomass supply chains (BMSCs) against the critical challenge of feedstock variability. Tailored for researchers and professionals in bioenergy and sustainable chemistry, it explores the foundational impact of spatial and temporal fluctuations in biomass yield and quality on production costs and output consistency. The content delves into advanced methodological approaches, including Mixed Integer Linear Programming (MILP) and hybrid AI models, for network design and strategic planning. It further offers practical troubleshooting and optimization techniques, such as flexible preprocessing depot networks and process intensification, and validates these solutions through comparative analysis of algorithms and real-world case studies, establishing a robust framework for building resilient and cost-effective biomass supply systems.

Understanding Feedstock Variability: The Core Challenge in Biomass Supply Chains

Frequently Asked Questions (FAQs)

FAQ 1: What are the primary sources of biomass feedstock variability? Biomass variability stems from multiple sources, which can be categorized as follows [1]:

- Source and Type: Innate differences exist between feedstock types (e.g., woody vs. herbaceous). For instance, ash content can increase by an order of magnitude from woody (~0.5%) to herbaceous (~5%) feedstocks, while lignin content drops by about half [1].

- Environmental Factors: Weather conditions, soil chemistry, and drought stress significantly impact yield and composition. For example, water stress can reduce crop yields by up to 48% and alter structural carbohydrate content [2] [3].

- Agricultural Practices: Harvest timing, method (e.g., single-pass vs. multi-pass), and storage conditions introduce variability. Multi-pass harvesting, for instance, increases ash contamination from soil compared to single-pass methods [4].

- Anatomical Fractions: Different parts of the same plant (e.g., leaves, stalks, cobs) have distinct physical and chemical properties, leading to variability when fractions are mixed [1].

FAQ 2: How does feedstock variability impact different bio-conversion processes? The impact of variability is highly dependent on the conversion pathway, as each process is sensitive to different biomass properties [5] [1].

Table 1: Impact of Feedstock Variability on Conversion Processes

| Conversion Process | Key Sensitive Parameters | Primary Impacts |

|---|---|---|

| Fermentation (Biochemical) | Structural carbohydrate (glucan, xylan) content; presence of inhibitors (e.g., lignin degradation products) [5] [1]. | Directly affects theoretical sugar and ethanol yield; inhibitors can deactivate enzymes or microbes [2] [1]. |

| Pyrolysis (Thermochemical) | Ash content (especially alkali metals), lignin content [5] [1]. | High ash reduces bio-oil yield and quality; can cause reactor fouling and catalyst poisoning [4] [1]. Lignin increases oil yield [1]. |

| Hydrothermal Liquefaction (HTL) | Ash content, moisture content, protein and lipid content [5]. | Ash and specific inorganics can affect biocrude yield and quality [5]. |

| Direct Combustion | Moisture content, ash content and composition (slagging elements like K, Cl) [1]. | Reduces combustion efficiency; increases slagging, fouling, and equipment corrosion [5] [6]. |

FAQ 3: What strategies can mitigate the risks associated with feedstock variability in the supply chain? Several strategic and technological approaches can be employed to manage variability [2] [4] [7]:

- Advanced Supply Chain Design: Implementing a network of distributed biomass preprocessing depots and centralized terminals. This system allows for blending different feedstocks to achieve a more consistent quality, emulating the grain commodity system [4].

- Selective Harvesting and Fractionation: Separating anatomical fractions (e.g., cob from leaf) during or after harvest to isolate high-ash components and create more homogeneous feedstock streams [3].

- Real-Time Monitoring and Machine Learning: Using near-infrared (NIR) spectroscopy for rapid quality assessment and machine learning models to predict supply, optimize logistics, and control conversion processes based on feedstock characteristics [7] [6].

- Incorporating Temporal Data in Planning: Using multi-year historical data on drought indices and yield during supply chain optimization to make biorefinery location and logistics decisions more resilient to climate variability [2].

Troubleshooting Guides

Problem: Inconsistent Conversion Yields in Biochemical Processing

Potential Cause 1: High Variability in Structural Carbohydrate Content Fluctuations in cellulose and hemicellulose content directly affect the maximum theoretical sugar yield.

- Diagnosis: Conduct detailed compositional analysis (e.g., using NREL/TP-510-42618 standard method) on incoming feedstock batches over time. A standard deviation of more than 2-3% (absolute) in glucan content is a strong indicator of problematic variability [2] [5].

- Solution:

- Implement Feedstock Blending: Mix high-carbohydrate and low-carbohydrate batches to achieve a more consistent average composition [4].

- Adjust Pre-treatment Severity: Use a real-time monitoring loop where compositional data informs pre-treatment conditions (e.g., temperature, acid concentration) to optimize sugar release for each batch [5].

Potential Cause 2: High Ash Content, Particularly Soil-Derived Inorganics Ash, especially silica and alkali metals, introduces introduced contaminants that can abrade equipment, inhibit enzymes, and increase waste [5] [3].

- Diagnosis: Measure ash content (via standard ASTM E1755) and perform X-ray fluorescence (XRF) to determine silica (SiO₂) levels. Ash content >5% and significant silica indicate high soil contamination [1].

- Solution:

Problem: Handling and Flowability Issues in Pre-processing Equipment

Potential Cause: High Biomass Cohesion Leading to Hopper Bridging and Clogging High ash content and moisture have been shown to increase the cohesive strength of biomass particles, making it difficult to handle [3].

- Diagnosis: Monitor equipment for frequent clogging, especially in hoppers and conveyors. Measure the bulk density and ash content of the material causing the issue.

- Solution:

- Control Moisture: Ensure biomass is dried to a consistent moisture level (e.g., below 15%) to reduce cohesion [1].

- Reduce Ash: As above, implement cleaning and fractionation to lower overall ash content [3].

- Equipment Modification: Install mechanical agitators or vibrators in hoppers to disrupt bridges and ensure continuous flow.

Experimental Protocol: Assessing Spatial and Temporal Variability

This protocol outlines a methodology to quantify spatial (location-to-location) and temporal (year-to-year) variability in biomass yield and quality, crucial for robust supply chain planning [2].

1. Objective: To characterize the spatial and temporal variability of biomass yield and key quality attributes (e.g., carbohydrate and ash content) over a multi-year period within a target supply region.

2. Materials and Equipment

- Biomass Samples: Representative samples (e.g., corn stover, switchgrass) collected from pre-defined counties or fields over multiple harvest seasons.

- Geospatial Data: U.S. Drought Monitor data or similar drought indices (e.g., DSCI - Drought Severity and Coverage Index) for the supply region [2].

- Lab Equipment:

- Forced-air oven and balance for moisture content (ASTM E871-82).

- Analytical balance and muffle furnace for ash content (ASTM E1755-01).

- Fiber Analyzer (e.g., ANKOM2000) or HPLC system for structural carbohydrate analysis (NREL/TP-510-42618).

3. Step-by-Step Procedure

Step 1: Experimental Design and Data Collection

- Define Supply Region: Select a target supply region (e.g., 100 counties in Kansas, Nebraska, and Colorado as in a cited study [2]).

- Collect Temporal Climate Data: Obtain historical (e.g., 10-year) drought index (DSCI) data for the growing season in each county from the U.S. Drought Monitor [2].

- Sample Collection: Annually collect biomass samples from fixed locations within the region. Record the precise location, harvest date, and harvest method.

Step 2: Sample Preparation and Analysis

- Prepare biomass samples by drying and milling to a consistent particle size (e.g., 2mm).

- Analyze each sample for:

- Moisture Content: Dry a sub-sample at 105°C until constant weight.

- Compositional Analysis: Determine the percentage of glucan, xylan, and acid-insoluble lignin using standard laboratory procedures (e.g., NREL/TP-510-42618) [5].

- Ash Content: Incinerate a sub-sample at 575°C and weigh the residual ash [1].

Step 3: Data Analysis and Modeling

- Statistical Analysis: For each year and location, calculate the average, standard deviation, and range for yield, carbohydrate content, and ash content.

- Correlation with Climate Data: Perform regression analysis to correlate biomass yield and quality parameters (e.g., carbohydrate content) with the drought index (DSCI) data [2].

- Supply Chain Modeling: Input the multi-year variability data into a biofuel supply chain optimization model to assess the impact on long-term feedstock cost and biorefinery viability [2].

The Researcher's Toolkit: Key Reagents & Materials

Table 2: Essential Research Reagents and Materials for Feedstock Variability Analysis

| Item Name | Function / Application | Technical Notes |

|---|---|---|

| Standard Reference Biomaterials | Calibrate analytical equipment (e.g., NIR spectrometers); serve as controls in compositional analysis. | Ensure they cover a range of relevant compositions (e.g., low/high ash, lignin) [1]. |

| NIR Spectrometer & Calibrations | Rapid, non-destructive prediction of biomass composition (moisture, ash, carbohydrates). | Must be calibrated against primary wet chemistry methods for accurate results [6]. |

| Laboratory Reactors (e.g., Parr) | Simulate pre-treatment and conversion processes (pyrolysis, HTL) at bench scale to test feedstock performance. | Allow for precise control of temperature, pressure, and atmosphere [5]. |

| U.S. Drought Monitor Data (DSCI) | Quantitative spatial-temporal data on drought severity for correlation with yield and quality studies. | A key external dataset for understanding environmental drivers of variability [2]. |

| Analytical Standards for HPLC | Quantify sugar monomers (glucose, xylose) and degradation products (e.g., furfural, HMF) after hydrolysis. | Essential for accurate compositional analysis and inhibitor detection [5] [1]. |

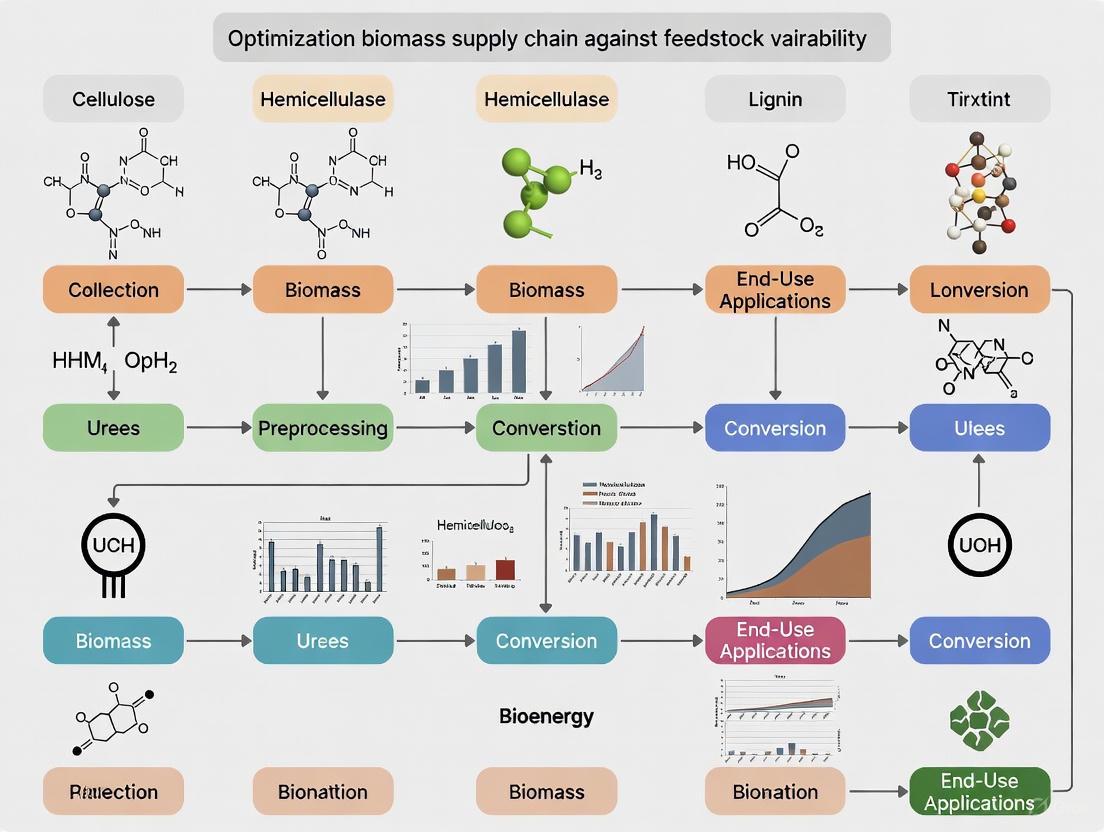

Visualizing the Supply Chain Optimization Framework

The following diagram illustrates an integrated framework for optimizing the biomass supply chain against feedstock variability, incorporating key mitigation strategies.

The Impact of Spatial and Temporal Factors on Biomass Availability

Frequently Asked Questions (FAQs)

How do spatial factors influence biomass availability for my research? Biomass yield and chemical composition are not uniform across a supply region. Spatial variability is influenced by local factors such as soil characteristics, landscape topography, and historical field management practices [2]. This means that biomass sourced from different geographic locations, even within the same general area, can have significantly different quantities and qualities, directly impacting the reproducibility and scalability of your experiments [2].

Why is temporal variability a critical consideration in biomass supply chain planning? Temporal variability refers to changes in biomass yield and quality over time, primarily driven by inter-annual weather patterns and the increasing frequency of extreme events like drought [2]. For instance, a nationwide drought in 2012 caused a 27% yield reduction for corn grain and significantly altered biomass carbohydrate content [2]. Ignoring this multi-year variability can lead to a significant underestimation of long-term biomass supply costs and disrupt steady biorefinery operations [2].

What is the primary climatic factor affecting biomass yield and quality? Drought is a primary factor. Water stress caused by low precipitation can reduce crop yields by up to 48% and shorten crop life cycles [2]. Furthermore, drought stress alters the plant's chemical composition, often leading to lower levels of structural sugars like glucan and xylan, which are critical for biofuel conversion processes [2].

My experiments are sensitive to feedstock quality. How variable can biomass quality be? Variability can be substantial. Studies on corn stover have shown that carbohydrate content can fluctuate significantly from year to year, closely aligning with drought indices [2]. Lower carbohydrate content and higher ash content negatively impact theoretical ethanol yield and increase operational costs by causing downtime and equipment wear during pre-processing [2].

What strategies can I use to mitigate supply risks related to this variability? Advanced supply chain systems, such as using distributed biomass processing depots instead of a single centralized facility, can reduce operational risk by 17.5% [2]. Optimizing the supply chain design by incorporating long-term spatial and temporal data on yield and quality makes the system more resilient to disruptions caused by climatic conditions [2].

Troubleshooting Guides

Problem 1: Inconsistent Experimental Results Due to Variable Biomass Feedstock

- Symptoms: High fluctuation in conversion process yields (e.g., ethanol production); unpredictable levels of inhibitors or ash affecting catalyst performance; difficulty replicating experiments over time.

- Underlying Cause: The biomass feedstock used in experiments has high spatial and temporal variability in its chemical composition (e.g., carbohydrate and lignin content) and physical properties [2].

- Solution:

- Characterize Feedstock Thoroughly: For every batch of biomass received, perform standard proximate and ultimate analysis (e.g., cellulose, hemicellulose, lignin, and ash content) before initiating experiments [2].

- Implement Blending Strategies: Source biomass from multiple, distinct geographic locations within your supply shed and create blended feedstock batches. This can help average out spatial variability and create a more consistent material [2].

- Adopt Dynamic Experimental Protocols: Develop flexible experimental protocols that can account for a range of biomass qualities, rather than being fixed to a single feedstock specification.

Problem 2: Biomass Supply Shortfall for Pilot-Scale Research

- Symptoms: Inability to secure sufficient biomass to run continuous experiments; supply interruptions.

- Underlying Cause: Unanticipated yield losses due to temporal factors, most notably extreme weather events like drought, which can reduce biomass availability [2].

- Solution:

- Incorporate Temporal Risk Analysis: During project planning, analyze historical drought index data (e.g., from the U.S. Drought Monitor) and biomass yield data for your supply region over at least a 10-year period to understand worst-case scenarios [2].

- Diversify Supply Shed: Establish contracts with suppliers across a wider geographic area to minimize the risk that a localized drought affects your entire supply [2].

- Consider Buffer Stock: Maintain a strategic reserve of biomass to buffer against short-term supply disruptions.

Summarized Quantitative Data

Table 1: Impact of Drought Stress on Biomass Yield and Composition

| Biomass Type | Maximum Yield Reduction | Carbohydrate Change | Key Study Findings |

|---|---|---|---|

| Corn Grain | 27% [2] | Not Specified | $30 billion in losses during 2012 U.S. drought [2]. |

| General Crops (Meta-analysis) | Up to 48% [2] | Starch reduced by up to 60% [2] | Harvest index reduced by 28%; life cycles shortened [2]. |

| Miscanthus, Switchgrass, Corn Stover | Significant losses [2] | Lower structural sugars (glucan, xylan) [2] | Increased extractive components and soluble sugars; potential for lower recalcitrance [2]. |

Table 2: Economic and Operational Impact of Biomass Variability

| Factor | Impact | Management Strategy |

|---|---|---|

| Ignoring Spatio-temporal Variability | Significantly underestimates long-term delivery cost [2]. | Use multi-year optimization modeling for supply chain planning [2]. |

| Low Biomass Quality | Increases operational cost, causes downtime, equipment wear, and decreases conversion yield [2]. | Incorporate quality parameters into supply chain optimization [2]. |

| Supply Chain Configuration | Switching to a distributed supply system can reduce operational risk by 17.5% [2]. | Evaluate centralized vs. distributed depot models [2]. |

Experimental Protocols

Protocol 1: Assessing Spatial and Temporal Variability in Biomass Supply Sheds

Objective: To quantify the spatial and temporal variability in biomass yield and quality within a defined geographic region to inform stable supply chain design [2] [8].

Methodology:

- Define the Supply Region: Delineate the geographic boundary of your biomass supply shed (e.g., a 100-mile radius around a potential research facility).

- Data Collection:

- Yield Data: Collect historical data on biomass yield (e.g., corn stover) at a county or finer spatial resolution for a minimum of 10 years from agricultural statistical yearbooks or satellite-derived datasets [2] [8].

- Climate Data: Obtain corresponding historical data for key climatic variables, with a focus on drought indices like the Drought Severity and Coverage Index (DSCI) during the growing season [2].

- Quality Data: Where available, gather data on biomass chemical composition (e.g., carbohydrate, ash content) linked to the same spatial and temporal scales [2].

- GIS Integration and Analysis: Use a Geographic Information System (GIS) platform to integrate the statistical data with spatial layers (e.g., land cover, soil type) [8]. Models like Net Primary Productivity (NPP) from satellite data can be used to disaggregate and optimize the spatial distribution of biomass potential [8].

- Statistical Modeling: Apply time-series analysis (e.g., ARIMA models) to understand trends and predict future biomass availability [8]. Use correlation analysis (e.g., Gray Correlation Analysis) to assess the influence of various drivers like drought on yield and quality [8].

Protocol 2: Incorporating Variability into Supply Chain Optimization Models

Objective: To develop a resilient biomass supply chain strategy that accounts for fluctuations in feedstock availability and quality.

Methodology:

- Model Framework: Develop a multi-period or multi-stage stochastic optimization model. The model's objective is typically to minimize total supply chain cost while meeting biomass demand [2].

- Incorporate Stochastic Parameters: Model key uncertain parameters—such as biomass yield, quality (carbohydrate content), and drought index—as random variables with probability distributions derived from the historical data collected in Protocol 1 [2].

- Scenario Generation: Use methods like Monte Carlo simulation to generate a large number of possible future scenarios representing different combinations of yield and quality outcomes [2].

- Optimization and Decision-Making: Solve the optimization model to determine the optimal supply chain configuration (e.g., biorefinery location, storage depot locations, logistics) that performs robustly across the range of generated scenarios [2].

Biomass Variability Analysis Workflow

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 3: Key Materials and Tools for Biomass Supply Chain Research

| Item | Function in Research |

|---|---|

| Geographic Information System (GIS) | A spatial analysis platform used to map, analyze, and model the geographic distribution of biomass resources, incorporating layers like yield data, land cover, and transportation networks [8]. |

| U.S. Drought Monitor (DSCI Data) | Provides standardized weekly drought index data at the county level, which is a primary input for correlating and predicting temporal variability in biomass yield and quality [2]. |

| Statistical Software (R, Python) | Used for performing time-series analysis (ARIMA), stochastic optimization scenario generation (Monte Carlo simulation), and correlation analysis (Gray Correlation) on biomass data [2]. |

| Stochastic Optimization Model | A computational model that incorporates uncertainty (e.g., in yield) to design supply chains that are resilient to spatial and temporal variability, minimizing cost and risk [2]. |

| Standard Biomass Analytical Methods | Laboratory protocols (e.g., NREL methods) for determining the chemical composition of biomass (carbohydrates, lignin, ash) to quantify quality variability and its impact on conversion processes [2]. |

Frequently Asked Questions: Biomass Supply Chain Resilience

What are the primary climate-related hazards threatening biomass supply chains? Climate change introduces multiple hazards, including increased mean temperatures, changes in precipitation patterns, heightened climate variability, and more frequent extreme weather events like floods, droughts, and storms [9]. These hazards can impact every stage of the supply chain, from feedstock production to transportation and storage, primarily by disrupting supply, damaging infrastructure, and reducing labor productivity [9].

Which biomass feedstock attributes are most critical for economic viability under climate uncertainty? Moisture content and spatial fragmentation are two dominant attributes. High moisture content significantly increases transportation costs and can reduce feedstock stability during storage, especially under hotter and more humid conditions [10]. Spatial fragmentation, where biomass resources are dispersed across a landscape, increases collection and transportation distances, complicating logistics and raising costs, particularly after disruptive events like wildfires which can further fragment resources [10] [11].

What strategies can enhance the resilience of biomass supply chains to disruptions? Implementing a combination of proactive (pre-disruption) and reactive (post-disruption) strategies is key. Effective proactive strategies include:

- Multi-sourcing: Sourcing feedstock from multiple, geographically dispersed suppliers to avoid a single point of failure [12].

- Coverage distance policies: Pre-defining maximum distances between facilities and suppliers to ensure a distributed and robust network [12].

- Backup facility assignment: Identifying and contracting backup processing facilities that can be activated if a primary facility is disrupted [12].

How does climate change impact the logistical phase of the biomass supply chain? Higher temperatures and increased humidity can accelerate the degradation of biomass during storage, leading to dry matter losses and reduced quality [9]. Extreme weather events can damage transportation infrastructure (e.g., roads, bridges) and directly disrupt transport operations, while also creating less predictable trade patterns that strain logistics systems [9] [12]. Heat stress can also affect the health and productivity of labor involved in transportation and handling [9].

Troubleshooting Guides for Common Scenarios

Scenario 1: Managing Feedstock Supply Disruptions from Wildfires

Problem: A major wildfire has impacted a key sourcing region, causing partial and complete disruptions in feedstock availability from multiple suppliers.

Experimental Protocol for Assessment & Mitigation:

Rapid Geospatial Impact Assessment:

- Objective: To quantify the available biomass in unaffected areas and identify new potential sourcing zones.

- Methodology: Utilize GIS software and satellite imagery (e.g., Landsat, Sentinel) to map the burn severity and extent. Overlay this with data on pre-fire biomass resource density and land ownership. Calculate the remaining accessible biomass volume in the region.

- Data Required: Burn severity indices (e.g., dNBR), pre-fire forest inventory data, land cover maps, road network data.

Supply Chain Model Re-optimization:

- Objective: To re-configure the supply network to meet biorefinery demand at the lowest possible cost post-disruption.

- Methodology: Input the new supply constraints and available backup suppliers into a Mixed-Integer Linear Programming (MILP) model of your supply chain. The model should re-optimize for total cost, determining the optimal quantities to transport from each remaining and backup supplier to the facility.

- Key Variables: Feedstock availability per supplier, transportation costs, facility demand, and capacity constraints.

Implementation of Resilience Strategies:

- Action: Activate backup suppliers and adjust transportation logistics as per the optimized model.

- Action: If not already in place, begin negotiating multi-sourcing agreements with suppliers in diverse geographic regions to mitigate the impact of future, localized disasters [12].

Scenario 2: High Moisture Content in Feedstock Leading to Cost Overtuns

Problem: Received biomass batches have consistently higher-than-specified moisture content, leading to increased transportation costs per unit of dry mass, potential spoilage during storage, and reduced conversion efficiency.

Experimental Protocol for Analysis & Correction:

Feedstock Attribute Analysis:

- Objective: To quantitatively determine the cost impact of high moisture content.

- Methodology: Weigh a representative sample of incoming feedstock upon delivery. Dry the sample in a calibrated oven at 105°C until a constant weight is achieved. Calculate the moisture content as (wet weight - dry weight) / wet weight * 100.

- Data Analysis: Re-calculate the effective cost per dry ton of feedstock, factoring in the paid price (based on wet weight) and the actual dry mass received. Model how this attribute affects the overall biofuel production cost, as high moisture is a dominant cost driver [10].

Logistics System Troubleshooting:

- Checkpoint: Review harvesting and collection timing. Is biomass being collected during or immediately after rainfall?

- Checkpoint: Assess on-site storage conditions. Is feedstock properly covered and ventilated to allow for passive drying?

- Checkpoint: Evaluate preprocessing options. Investigate the economic feasibility of deploying mobile pelletization or torrefaction units near the source to reduce moisture and densify the feedstock before long-haul transport [13].

The following workflow outlines the core experimental and decision-making process for managing these climate-related risks in a biomass supply chain.

Scenario 3: Facility Disruption Due to Extreme Weather Event

Problem: A key biorefinery or storage depot is temporarily incapacitated due to a flood, disrupting the entire downstream supply chain.

Experimental Protocol for Continuity Management:

Business Impact Analysis:

- Objective: To determine the production shortfall and prioritize critical operations.

- Methodology: Immediately assess the facility's downtime estimate. Calculate the lost production volume per day. Identify and communicate with the most critical customers (e.g., those with fixed contracts).

Activation of Backup Protocols:

- Objective: To re-route feedstock and activate alternative processing capacity.

- Methodology: Execute the pre-established backup assignment plan [12]. Contact the designated backup facility and negotiate capacity sharing. Re-route inbound and outbound logistics to the backup facility. Use the supply chain optimization model to re-calculate optimal transportation routes under the new network configuration.

Table 1: Quantitative Impact of Key Biomass Feedstock Attributes on Biorefinery Economics

| Attribute | Impact on Optimal Biorefinery Scale | Impact on Biofuel Production Cost | Key Risk Factor |

|---|---|---|---|

| Moisture Content | Varies with cost structure; high moisture penalizes larger scales [10] | Dominant cost driver; significantly increases transport cost per dry ton [10] | Increased under higher humidity and precipitation variability [9] |

| Spatial Fragmentation | Limits cost-competitive scale due to increased logistics cost [10] | Increases pre-processing and transportation costs [10] | Exacerbated by disruptive events like wildfires [11] |

| Resource Yield Density | Higher density enables larger, more cost-effective scales [10] | Reduces unit cost of collection and transport [10] | Threatened by climate-induced reductions in agricultural yields [9] |

Table 2: Resilience Strategies for Biomass Supply Chain Disruptions

| Strategy Type | Specific Tactic | Function | Implementation Consideration |

|---|---|---|---|

| Proactive (Pre-disruption) | Multi-sourcing [12] | Reduces reliance on a single supply basin, mitigating localized disruption impact. | Requires developing relationships with multiple suppliers; may involve slightly higher base costs. |

| Proactive (Pre-disruption) | Coverage Distance Policy [12] | Limits maximum distance to suppliers, creating a denser, more robust network. | Helps manage transportation costs and ensures quicker response times during disruptions. |

| Proactive (Pre-disruption) | Backup Facility Assignment [12] | Pre-identifies alternative processing facilities. | Requires pre-negotiated agreements and data sharing to ensure operational compatibility. |

| Reactive (Post-disruption) | Post-disruption Re-optimization | Re-routes material flows and re-allocates resources after a disruption occurs. | Dependent on having real-time data and agile modeling capabilities. |

| Reactive (Post-disruption) | Salvage Harvesting | Recovers value from biomass in fire-affected areas, aiding restoration [11]. | Logistically complex; requires careful assessment of wood quality and safety protocols. |

The Scientist's Toolkit: Key Research Reagent Solutions

| Tool / Solution | Function in Biomass Supply Chain Research | Relevance to Climate Risk |

|---|---|---|

| GIS (Geographic Information Systems) | Mapping and analyzing spatial data on biomass availability, logistics routes, and climate hazard exposure (e.g., wildfire risk maps) [10] [11]. | Critical for assessing exposure of supply chain infrastructure to climate hazards and planning resilient siting. |

| Mixed-Integer Linear Programming (MILP) Models | Optimizing the design and operation of the supply chain network for cost, efficiency, and resilience under uncertainty [12]. | Allows for scenario analysis to test how supply chains perform under various climate disruption scenarios. |

| Life Cycle Assessment (LCA) Software | Quantifying the environmental footprint of biofuel production, including greenhouse gas emissions [10]. | Essential for ensuring that resilience strategies do not inadvertently increase the carbon footprint of the final biofuel product. |

| Remote Sensing Data (Satellite Imagery) | Monitoring crop health, estimating yields, and assessing near-real-time impacts of extreme weather (e.g., drought, fire) on feedstock supply [11]. | Provides rapid, large-scale data for post-disruption impact assessment and feedstock availability forecasting. |

| Scenario Planning Frameworks | Developing and evaluating strategies against a wide range of possible climate futures, including low-probability, high-impact events [9]. | Helps build supply chains that are robust across different climate projections, not just a single forecast. |

Troubleshooting Guides & FAQs

Feedstock Quality and Preprocessing

Q: Our biorefinery is experiencing inconsistent sugar yields despite using the same pretreatment protocol. What could be causing this, and how can we mitigate it?

A: Inconsistent sugar yields are frequently a direct result of unmanaged feedstock variability. Key material attributes such as moisture content, ash content, and structural carbohydrate composition can vary significantly between and within biomass batches, directly impacting enzymatic hydrolysis efficiency [14] [2].

- Diagnosis & Solution:

- Analyze Feedstock Composition: Implement rapid analytical techniques (e.g., NIR spectroscopy) to monitor incoming biomass for key variability drivers like carbohydrate and ash content [15] [2].

- Adjust Preprocessing: For high ash content, consider employing air classification [15]. For high moisture content, assess the cost-benefit of additional drying steps, as moisture is a major logistics cost driver [10].

- Adapt Pretreatment Severity: For feedstocks with high innate recalcitrance, consider increasing pretreatment severity. Studies on Deacetylation and Mechanical Refining (DMR) have shown that higher deacetylation severity can mitigate the negative impacts of feedstock variability on sugar yields [14].

Q: What is the single most significant feedstock attribute impacting production costs, and how can it be managed?

A: Quantitative analyses identify moisture content as a dominant cost driver, significantly impacting transportation expenses and feedstock cost competitiveness. Furthermore, spatial fragmentation of biomass resources increases logistics costs and sourcing distances [10].

- Diagnosis & Solution:

- Implement Moisture Control: Establish moisture specifications for incoming biomass and invest in covered storage or pre-drying protocols at collection points to reduce weight and transportation costs [10].

- Optimize Sourcing Strategy: Use geospatial modeling to account for biomass resource density. Sourcing from areas with higher yield density can dramatically reduce logistics costs compared to fragmented agricultural landscapes [10].

Supply Chain and Operational Planning

Q: How does year-to-year variability in biomass yield affect our biorefinery's economic viability, and how can we design a more resilient supply chain?

A: Annual fluctuations in biomass production, often driven by drought and other climatic factors, pose a significant risk. When supply is insufficient, biofuel production decreases while fixed operating costs remain, leading to higher per-unit costs. Excess supply results in added storage costs [16] [2].

- Diagnosis & Solution:

- Model Temporal Variability: Incorporate multi-year historical data on drought indices and biomass yields into supply chain planning rather than relying on single-year averages. This prevents underestimating long-term delivery costs [2].

- Develop Flexible Sourcing: Consider a multi-feedstock strategy that blends different biomass types (e.g., corn stover with sorghum or switchgrass). This diversifies supply risk across crops with different harvest windows and environmental resilience [14] [2].

- Utilize Intermediate Depots: Investigate a distributed supply system with preprocessing depots that can densify biomass (e.g., into pellets) for more economical long-distance transport and as a buffer against supply shocks [16].

Q: We are facing frequent equipment wear and unplanned downtime. Could feedstock variability be a contributing factor?

A: Yes. Variability in biomass physical properties, such as increased abrasive inorganic (ash) content, is a primary cause of equipment wear in handling and preprocessing machinery like grinders and conveyors [15] [17].

- Diagnosis & Solution:

Process Scale-Up and Control

Q: What are the critical challenges when scaling up a biorefinery process from pilot to demonstration scale?

A: Scaling up introduces complex interdependencies. Key challenges include managing feedstock variability at a larger volume, selecting appropriately scaled equipment, overcoming changes in reaction kinetics and heat/mass transfer due to different volume-to-surface ratios, and ensuring process robustness and control [18].

- Diagnosis & Solution:

- Pilot Plant Testing: Utilize demo-scale facilities (e.g., Borregaard's Biorefinery Demo plant, Bio Base Europe Pilot Plant) to test your process with variable, real-world feedstocks [18].

- Process Optimization: Employ statistical methods like Response Surface Methodology (RSM) to identify the optimal combination of process variables (e.g., temperature, pH, chemical loadings) for consistent performance at larger scales [18].

- Develop Advanced Control Strategies: Implement a control strategy that includes both in-line process control and off-line analysis to maintain product quality despite feedstock variations [18].

The following tables consolidate key quantitative findings on the impacts of feedstock variability.

Table 1: Impact of Biomass Attributes on Production Cost and Optimal Scale [10]

| Biomass Attribute | Impact on Production Cost | Impact on Optimal Biorefinery Scale |

|---|---|---|

| Moisture Content | Dominant cost driver; increases transportation expenses. | Significantly influences unique optimal scale for each feedstock. |

| Spatial Fragmentation | Increases logistics costs and sourcing distances. | Limits resource consolidation, constraining maximum viable scale. |

| Resource Yield Density | Higher density reduces cost per ton and improves competitiveness. | Enables larger, more cost-effective industrial-scale operations. |

Table 2: Sugar Yield and Production Cost from Different Feedstocks and Pretreatments [14]

| Feedstock | Pretreatment Method | Glucose Yield (%) | Sugar Production Cost ($/lb) |

|---|---|---|---|

| Single-Pass Corn Stover (SPCS) | DDA | 91.0 | 0.2286 |

| Single-Pass Corn Stover (SPCS) | DMR | 95.3 | 0.2490 |

| Multi-Pass Corn Stover (MPCS) | DDA | Lower than SPCS | Higher than SPCS |

| Sorghum (SG) | DDA | Lower than SPCS | Higher than SPCS |

| Switchgrass (SW) | DDA | Lower than SPCS | Higher than SPCS |

| Feedstock Blends | DDA & DMR | ~Weighted average of constituents | ~Weighted average of constituents |

Table 3: Economic and Environmental Impact Range for a Pyrolysis Biorefinery [19]

| Metric | Range |

|---|---|

| Minimum Sugar Selling Price (MSSP) | $66 - $280 per Metric Ton |

| Net Greenhouse Gas (GHG) Emissions | -0.56 to -0.74 kg CO₂e per kg biomass processed |

Detailed Experimental Protocols

Protocol 1: Evaluating Feedstock Blends using Deacetylation and Dilute Acid (DDA) Pretreatment

Objective: To determine the interactive effects of blending different biomass species on sugar yield and production costs under standardized DDA pretreatment conditions [14].

Materials:

- Feedstocks: Single-pass corn stover (SPCS), multi-pass corn stover (MPCS), switchgrass (SW), sorghum (SG).

- Equipment: 90-L paddle reactor, knife mill, enzymatic hydrolysis reactors.

- Reagents: Sodium hydroxide (NaOH), dilute acid (e.g., H₂SO₄), commercial enzyme cocktails (e.g., Novozymes Cellic CTec3/HTec3).

Methodology:

- Feedstock Preparation: Size-reduce all feedstocks to pass a 19.1-mm screen using a knife mill.

- Blend Formulation: Create bi-blends (e.g., 60/40 MPCS/SPCS) and quad-blends (e.g., 25/35/35/5 MPCS/SPCS/SW/SG) based on dry weight.

- Deacetylation: Load 5 kg (dry weight) of biomass into the paddle reactor with 45 kg of NaOH solution. Perform at varying severities (e.g., 50, 100, 150 kg NaOH/ODMT) at 80°C for 2 hours.

- Dilute Acid Pretreatment: Transfer deacetylated solids to a pretreatment reactor and process with dilute acid at optimal conditions (e.g., 158°C, 5.2 min, 0.98% H₂SO₄).

- Enzymatic Hydrolysis: Perform hydrolysis on the pretreated slurry at 20% solids content with an enzyme loading of 12 mg total protein/g glucan (80:20 CTec3:HTec3) for 7 days.

- Analysis: Quantify monomeric sugar concentrations (glucose, xylose) via HPLC. Calculate percent theoretical yields.

Protocol 2: Techno-Economic Analysis (TEA) Incorporating Feedstock Variability

Objective: To quantify the impact of biomass attribute variability on biorefinery production costs and optimal scale using a bottom-up modeling framework [10] [19].

Materials:

- Data: GIS data on biomass spatial distribution and yield, feedstock compositional data, biorefinery process model, capital and operating cost data.

- Software: Process modeling software (e.g., Aspen Plus), statistical analysis software, machine learning libraries (e.g., for Generative Adversarial Networks).

Methodology:

- Feedstock Data Generation: Use machine learning models (e.g., Generative Adversarial Networks, Kernel Density Estimation) to generate a large, representative dataset of feedstock biochemical compositions (cellulose, hemicellulose, lignin) from a smaller empirical sample set [19].

- Process Simulation: For each feedstock composition in the dataset, run a process simulation (e.g., in Aspen Plus) to determine mass and energy balances, product yields, and utility demands [19].

- Cost Calculation: Calculate total production cost for each scenario, incorporating feedstock cost (including logistics based on spatial fragmentation and moisture), capital depreciation, operating costs, and conversion efficiency [10].

- Optimization & Sensitivity Analysis: Determine the biorefinery scale that minimizes the per-unit production cost for each feedstock type. Perform sensitivity analysis to identify the most influential cost drivers (e.g., moisture content, carbohydrate yield) [10].

Visualizations

Diagram 1: Feedstock Variability Impact on Biorefinery Viability

Title: Feedstock Variability Impact on Biorefinery Viability

Diagram 2: DDA vs DMR Experimental Workflow

Title: DDA vs DMR Experimental Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Reagents and Materials for Variability Research

| Reagent/Material | Function in Experimentation |

|---|---|

| Sodium Hydroxide (NaOH) | Primary reagent for deacetylation pretreatment; removes acetate and lignin to reduce recalcitrance [14]. |

| Dilute Sulfuric Acid (H₂SO₄) | Common catalyst for dilute acid pretreatment; hydrolyzes hemicellulose to soluble sugars [14]. |

| Commercial Enzyme Cocktails (e.g., Cellic CTec3/HTec3) | Complex mixtures of cellulases and hemicellulases for saccharification of pretreated biomass into fermentable sugars [14]. |

| Iron (II) Sulfate (FeSO₄) | A pretreatment additive in thermochemical pathways (e.g., pyrolysis) that can facilitate lignin depolymerization and increase sugar production [19]. |

| Lignocellulosic Biomass Blends | Custom-formulated mixtures of different feedstocks (e.g., corn stover, sorghum, switchgrass) used to study and mitigate supply and quality risks [14] [2]. |

The Critical Link Between Feedstock Quality and Final Product Output

Troubleshooting Common Feedstock Quality Issues

Q1: Why does my biomass feedstock cause inconsistent conversion yields and process inefficiencies?

A: Inconsistent conversion yields are frequently caused by natural variations in the biomass's chemical composition (carbohydrate, lignin, ash content) and physical properties (moisture content, particle size, density) [2]. These variations alter the reaction kinetics and mass transfer during conversion.

- Carbohydrate Variability: Fluctuations in cellulose and hemicellulose content directly impact the theoretical maximum yield of biofuels like ethanol [2]. Research on corn stover shows carbohydrate content can vary significantly year-to-year, closely linked to drought conditions [2].

- Ash Content: High ash levels, particularly in agricultural residues, can significantly increase operational costs, cause equipment wear, and reduce conversion efficiency [2].

- Moisture Content: Overly wet fuel requires more energy for drying, burns inefficiently, and reduces net energy output. Excessively dry fuel can cause temperature control issues [20].

Diagnostic Protocol: Implement a routine characterization protocol tracking these key parameters:

- Weekly sampling from incoming feedstock batches.

- Standardized laboratory analysis for structural carbohydrates and lignin (e.g., NREL/TP-510-42618).

- Rapid moisture and ash analysis using loss-on-ignition or similar methods.

Q2: What are the primary causes of biomass flow problems in handling systems, and how can I resolve them?

A: Flow obstructions like bridging, ratholing, and segregation are common in biomass due to its fibrous, irregular nature and interlocking particles [21]. These issues cause feed interruptions, leading to process instability and downtime.

Resolution Strategies:

- Material Characterization: Conduct shear cell tests to measure cohesive strength and wall friction against hopper materials. This data is essential for proper equipment design [21].

- Equipment Modifications: Install mass flow hoppers with steep, smooth walls and flow-promoting devices (e.g., vibrators, air blasters) to ensure uniform, first-in-first-out flow [21].

- Pre-processing Adjustments: Implement drying and size reduction (shredding, grinding) to achieve more uniform particle size distribution, which improves flowability [20] [21].

Q3: How does feedstock variability impact the economic viability of a biorefinery operation?

A: Feedstock variability directly impacts profitability through multiple channels [22]:

- Supply Chain Costs: Temporal and spatial variability in yield and quality increases transportation and preprocessing costs. Models show that ignoring this variability can lead to significant underestimation of true biomass delivery costs [2].

- Conversion Performance: Inconsistent biomass leads to suboptimal conversion conditions, reducing output and potentially increasing catalyst consumption or enzyme loading [23] [22].

- Operational Reliability: Unplanned downtime from feedstock handling problems or quality excursions increases maintenance costs and reduces plant availability [21].

Mitigation Approach: Develop a resilient supply chain strategy incorporating long-term (10+ years) spatial and temporal yield/quality data, considering climate variability and extreme weather events [2].

Biomass Feedstock Quality Parameters and Specifications

Table 1: Key Biomass Quality Parameters and Their Impact on Conversion Processes

| Parameter | Optimal Range/Desired Value | Impact of Deviation | Standard Test Method |

|---|---|---|---|

| Moisture Content | Typically 10-20% (w.b.) for thermal conversion [20] | High: Reduced net energy value, combustion issues [20]. Low: May cause overly rapid combustion [20]. | ASTM E871 / ASTM D4442 |

| Ash Content | <5% preferred; >10% can be problematic [2] | High: Slagging/fouling, equipment erosion, lower conversion yields, increased catalyst poisoning risk [2]. | ASTM E1755 |

| Carbohydrate (Glucan/Xylan) Content | Consistent levels are critical [2] | Low/Variable: Directly reduces theoretical biofuel yield, causes process instability [2]. | NREL/TP-510-42618 |

| Particle Size Distribution | Consistent and system-specific [20] | Too Large: Handling/feeding problems, incomplete conversion [20]. Too Small: Dust, flowability issues [21]. | ASTM E828 / ASTM E1109 |

Table 2: Common Biomass Feedstock Categories and Characteristic Challenges

| Feedstock Category | Common Examples | Characteristic Quality Challenges |

|---|---|---|

| Agricultural Residues | Corn stover, wheat straw, rice husks | High ash and silica content, seasonal availability, high spatial variability in yield and composition [22] [2]. |

| Energy Crops | Switchgrass, Miscanthus, fast-growing trees | Variable composition based on harvest time, drought stress can reduce yield and alter cell wall structure [2]. |

| Woody Biomass | Forest residues, sawmill waste | Variable moisture, bark content, potential for contaminants (soil, rocks), bridging in hoppers [23] [21]. |

| Organic Wastes | Municipal solid waste, food processing waste | Highly heterogeneous, high moisture, potential chemical contaminants, odor, and spoilage [24]. |

Experimental Protocols for Feedstock Quality Assessment

Protocol 1: Comprehensive Biomass Characterization for Conversion Suitability

Objective: To determine the proximate, ultimate, and compositional properties of a biomass feedstock sample for conversion process optimization.

Workflow:

Procedure:

- Representative Sampling: Collect biomass samples from multiple locations within a lot using a standardized sampling plan. For solid biofuels, follow ASTM E829.

- Sample Preparation: Air-dry samples to a stable moisture content. Mill using a knife mill and sieve to obtain a homogeneous sample with particle size <2mm.

- Moisture Content: Determine moisture content by drying in a forced-air oven at 105°C until constant mass (ASTM E871).

- Ash Content: Measure ash content by combusting a known mass of dried sample in a muffle furnace at 575°C±25°C until constant mass (ASTM E1755).

- Compositional Analysis: Quantify structural carbohydrates (glucan, xylan, arabinan), lignin, and ash using NREL's Laboratory Analytical Procedure (LAP) "Determination of Structural Carbohydrates and Lignin in Biomass" (NREL/TP-510-42618).

Protocol 2: Monitoring Temporal Variability in Biomass Quality

Objective: To track and document seasonal and year-to-year variations in biomass quality linked to environmental factors.

Workflow:

Procedure:

- Geospatial Planning: Establish fixed sampling points across the supply region, tagged with GPS coordinates.

- Temporal Sampling: Collect biomass samples at critical physiological stages (e.g., flowering, maturity) and post-harvest over multiple years.

- Environmental Data Collection: Obtain corresponding meteorological data (e.g., precipitation, temperature, drought indices like DSCI) for the growing season and locations [2].

- Statistical Analysis: Use multivariate analysis (e.g., PCA, regression modeling) to correlate environmental factors with key quality parameters (e.g., carbohydrate content, ash).

- Model Validation: Validate predictive models with new seasonal data to refine forecasting accuracy for supply chain planning.

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Key Reagents and Materials for Biomass Feedstock Quality Analysis

| Item Name | Function/Application | Technical Specification Notes |

|---|---|---|

| NREL LAP Standards | Reference procedures for compositional analysis | Provides standardized, validated methods for determining structural carbohydrates, lignin, and ash [2]. |

| Anhydrous Glucose & Xylose | HPLC calibration for sugar analysis | High-purity (>99%) standards essential for accurate quantification of hydrolysis products. |

| Sulfuric Acid (72% & 4% w/w) | Primary hydrolysis reagent in compositional analysis | High-purity grade required to minimize interference from contaminants. |

| Forced Draft Oven | Determination of moisture content and sample drying | Must maintain uniform temperature (±2°C) at 105°C per ASTM E871. |

| Muffle Furnace | Determination of ash content | Capable of maintaining 575°C±25°C with good temperature uniformity, per ASTM E1755. |

| Mechanical Sieve Shaker | Particle size distribution analysis | Equipped with a standard set of sieves for objective, reproducible size classification. |

| Drought Severity Index Data | Correlating environmental stress with biomass quality | Publicly available data (e.g., U.S. Drought Monitor) for understanding temporal variability [2]. |

Strategic Modeling and Analytical Approaches for Resilient Supply Chain Design

Frequently Asked Questions (FAQs)

1. Why is my large-scale Biomass Supply Chain (BSC) MILP model taking too long to solve? Solving large-scale MILP models for BSC optimization can be computationally challenging. Performance issues often arise from four main areas [25]:

- Poor Model Formulation: A "weak" formulation with a large gap between the MILP solution and its linear programming (LP) relaxation can cause the branch-and-bound algorithm to explore too many nodes.

- Numerical Instability: Problems with ill-conditioned data or large scaling differences between coefficients can slow down the linear programming (LP) solves at each node.

- Insufficient Cuts or Preprocessing: The solver may not be generating enough effective cutting planes to tighten the LP relaxations, or preprocessing may not be effectively reducing the problem size.

- Lack of Progress in Bounds: The solver might struggle to find good feasible solutions (improving the upper bound for minimization) or to prove optimality by raising the lower bound.

2. How can I model the impact of biomass quality variability (e.g., moisture, ash content) on my supply chain? Biomass quality attributes like moisture and ash content directly impact conversion yields, transportation costs, and pre-processing requirements [26]. To model this:

- Stochastic Programming: Develop a two-stage stochastic programming model where first-stage decisions are strategic (e.g., biorefinery locations), and second-stage decisions are tactical (e.g., transportation, quality control) based on random realizations of biomass quality [26].

- Quality-based Costing: Move beyond cost per dry ton to cost per unit of convertible carbohydrate, integrating quality-based penalties or incentives into the objective function [26].

- Yield Correlation: Incorporate data on how environmental factors like drought indices correlate with both biomass yield and key quality components like carbohydrate content [2].

3. What is the difference between MILP and MINLP in the context of BSC, and when should I use each? The choice depends on the nature of the relationships between variables in your supply chain model [27].

- MILP (Mixed-Integer Linear Programming): Used when all relationships are linear. It is suitable for problems like facility location, transportation routing, and capacity planning. Most BSC problems related to network design are formulated as MILPs [28] [29].

- MINLP (Mixed-Integer Nonlinear Programming): Necessary when the model contains nonlinear relationships. This is common when you are integrating the BSC network design with the optimization of conversion process variables (e.g., thermodynamic conditions in a Steam Rankine Cycle) or modeling nonlinear cost functions [27].

4. How can I make my BSC model more resilient to disruptions like wildfires or feedstock variability? A combined simulation-optimization framework is an effective approach to enhance resilience [28].

- Optimization for Planning: First, use an MILP model to generate an optimal resource allocation and logistics plan.

- Simulation for Testing: Then, use Discrete Event Simulation (DES) to test this plan against various disruptive scenarios (e.g., wildfires affecting supply nodes). This evaluates the plan's robustness using Key Performance Indicators (KPIs) [28].

- Replanning: Use the optimization model as a real-time replanning tool to generate new feasible plans when a disruption is detected or simulated [28].

Troubleshooting Guides

Guide 1: Improving MILP Performance for BSC Models

Slow MILP performance is often due to model formulation. Follow this workflow to identify and rectify common issues [25]:

Recommended Actions:

- If the lower bound is stagnant:

- Tighten "Big-M" Constraints: Use the smallest possible value for M in disjunctive constraints to make the LP relaxation tighter [25]. Use constraint-specific M values (M_i) instead of a single, large M [30].

- Add Tighter Formulations: Incorporate problem-specific valid inequalities or cutting planes. For instance, use the Dantzig-Fulkerson-Johnson formulation for routing subproblems instead of the weaker Miller-Tucker-Zemlin formulation [30].

- If the upper bound is stagnant:

- Employ Heuristics: Use solver-built-in heuristics (e.g., Feasibility Pump) or develop custom ones to find good-quality feasible solutions earlier in the process [25].

- If node throughput is slow:

- Improve Numerical Hygiene: Address ill-conditioning by scaling the coefficient matrix so that the non-zero coefficients are around 1. Avoid mixing very large and very small numbers in constraints [25].

Guide 2: Incorporating Biomass Quality Variability

Ignoring the spatial and temporal variability of biomass yield and quality can lead to underestimated costs and non-robust supply chain designs [2]. Follow this methodology to integrate these critical factors.

Experimental Protocol for Data Integration [26] [2]:

- Data Collection:

- Historical Yield Data: Gather at least 10 years of historical biomass yield data for your supply region.

- Quality Parameters: Collect data on key quality attributes (e.g., carbohydrate, moisture, and ash content) correlated with the yield data.

- Climate Indices: Obtain relevant climate data, such as the Drought Severity and Coverage Index (DSCI), which is a primary factor contributing to yield and quality variability [2].

- Scenario Generation:

- Use the multi-year data to generate a set of scenarios. Each scenario represents a possible realization of yield and quality across the supply region, capturing both spatial and temporal variability.

- Assign a probability to each scenario based on historical frequency.

- Model Formulation:

- Develop a two-stage stochastic programming model.

- First-Stage Variables: Decide on strategic, here-and-now decisions (e.g., biorefinery locations, technology selection, capacity) that are fixed across all scenarios [26].

- Second-Stage Variables: Model tactical, wait-and-see decisions (e.g., biomass sourcing, transportation, pre-processing intensity) that are adaptive to each specific yield/quality scenario [26].

- Objective Function: Minimize the total cost, which includes the first-stage investment cost and the expected second-stage operational cost over all scenarios.

Guide 3: Choosing Between MILP and MINLP for an Integrated BSC

The decision to use MILP or MINLP hinges on whether you are solely designing the supply chain or also optimizing the internal conversion process.

Decision Logic for Model Selection:

Key Considerations:

- Stick with MILP if: Your problem involves linear relationships for network design, transportation, and facility location. This includes most classic BSC optimization problems [29].

- Switch to MINLP if: You are integrating the supply chain with the optimization of the biomass conversion process itself. For example, if you are simultaneously optimizing the supply network and the operating conditions (e.g., temperature, pressure) of a Steam Rankine Cycle plant, the process model will introduce nonlinearities, necessitating an MINLP [27].

The Scientist's Toolkit: Research Reagent Solutions

This table details key computational and data resources essential for modeling and optimizing biomass supply chains.

| Item | Function in BSC Optimization |

|---|---|

| Commercial Solvers (CPLEX, Gurobi) | Software packages used to solve MILP models. They implement advanced versions of the branch-and-bound and branch-and-cut algorithms [25]. |

| L-Shaped Algorithm | A solution procedure for two-stage stochastic programs. It decomposes the large problem into a master problem (first-stage) and multiple subproblems (second-stage), solving them iteratively [26]. |

| Geographic Information System (GIS) | A tool for capturing and analyzing spatial data. It is critical for accurately modeling the geographical distribution of biomass, transportation routes, and potential facility locations [27]. |

| Discrete Event Simulation (DES) | A modeling technique for simulating the operation of a system as a discrete sequence of events in time. Used to test the robustness of an optimal BSC plan against disruptions like wildfires [28]. |

| Drought Severity and Coverage Index (DSCI) | A data metric that quantifies drought levels. It serves as a key input parameter for modeling the spatial and temporal variability of biomass yield and quality in a supply region [2]. |

Integrating Fixed and Portable Preprocessing Depots for Enhanced Flexibility and Cost Reduction

This technical support center provides targeted troubleshooting and methodological guidance for researchers designing and operating biomass supply chains (BMSCs) that integrate fixed and portable preprocessing depots. Framed within a broader thesis on optimizing biomass supply chains against feedstock variability, this resource addresses the key technical and logistical challenges identified in contemporary research. The following sections offer foundational concepts, detailed experimental protocols, and solutions to common operational problems to support scientists and engineers in developing more resilient and cost-effective bioenergy systems.

Conceptual Foundations and System Architecture

Core Definitions and Functions

- Fixed Depots (FDs): Permanent preprocessing facilities with consistent processing capabilities, benefiting from economies of scale and lower per-unit processing costs. They are optimally located in areas of high, consistent biomass density [31].

- Portable Depots (PDs): Mobile or temporarily sited preprocessing units that can be relocated to areas with seasonal or varying biomass availability. They provide remarkable flexibility and adaptability to preprocess biomass before delivery to energy conversion plants [31] [32].

- Preprocessing Function: Converts raw biomass (e.g., forest residues, agricultural waste) into higher-quality, more transportable intermediates like chips, pellets, briquettes, or bio-oil through operations like chipping, drying, torrefaction, or fast pyrolysis. This enhances biomass bulk density, energy density, and feedstock quality [31] [32].

System Workflow and Biomass Flow

The following diagram illustrates the typical biomass flow and decision points in a hybrid FD/PD network:

Biomass Preprocessing Workflow

Experimental Protocols and Methodologies

Protocol 1: Mixed-Integer Linear Programming (MILP) Model Formulation for Strategic Network Design

This methodology enables the optimal design of a BMSC that includes both FDs and PDs, serving as a decision support tool for both brownfield and greenfield projects in the renewable energy sector [31].

1. Objective Function Formulation:

- Primary Objective: Minimize total supply chain cost or maximize profit.

- Cost Components: The objective function must incorporate harvesting costs at watersheds (

H_it), transportation costs from supply locations to depots (C_ij) and from depots to plants (C_jk), fixed costs for establishing FDs (F_j) and PDs (G_m), and preprocessing costs at depots (P_jt) [31].

2. Decision Variable Definition:

- Binary variables for FD location selection (

y_j) and PD activation (z_mt). - Continuous variables for biomass flow from supply locations to depots (

x_ijt) and from depots to plants (x_jkt). - Continuous variables for inventory levels at depots (

I_jt) [31].

3. Constraint Specification:

- Biomass Availability: Total biomass shipped from a supply location

iin periodtmust not exceed available biomassA_it. - Demand Fulfillment: Total biomass shipped to a plant

kin periodtmust meet demandD_kt. - Capacity Limits: Flow through an FD

jmust not exceed capacityCAP_j; PDmin periodtmust not exceed capacityCAP_m. - Processing Balance: Biomass inflow to a depot equals outflow plus inventory change (considering conversion factor

α). - Logical Constraints: Biomass can only flow through opened FDs or activated PDs [31].

4. Model Implementation and Solving:

- Implement the MILP model in optimization software (e.g., GAMS, CPLEX, Python with Pyomo).

- Input parameter values derived from case study data (e.g., biomass availability, distances, cost factors).

- Solve using standard MILP solvers to obtain optimal network configuration and biomass flows [31].

Protocol 2: Matheuristic Approach for Dynamic Operational Planning (mFLP-dOA)

This protocol combines mathematical optimization with heuristic procedures to address large-scale, dynamic procurement problems under biomass variability, implementing flexibility strategies like dynamic network reconfiguration and operations postponement [33].

1. Problem Mapping and Model Formulation:

- Formulate as a mobile Facility Location Problem with dynamic Operations Assignment (mFLP-dOA).

- Define sets for supply nodes, potential temporary intermediate node locations, and time periods.

- Incorporate decisions on opening/closing temporary intermediate nodes and postponing chipping operations from supply to intermediate nodes [33].

2. Matheuristic Procedure Development:

- Decomposition: Break the complex MIP model into smaller, manageable subproblems.

- Fix-and-Optimize Algorithm: Iteratively fix a subset of integer variables (e.g., location decisions) and solve the resulting subproblem for continuous variables and remaining integers.

- Neighborhood Search: Define neighborhoods around current solutions to explore improved configurations [33].

3. Scenario Generation and Risk Modeling:

- Generate multiple instances reflecting different risk scenarios (e.g., seasonal operation bans, wildfire prevention policies, fluctuating biomass availability).

- Incorporate spatial and temporal uncertainty in raw material availability parameters [33].

4. Performance Evaluation:

- Apply the matheuristic to real-case instances (e.g., from Central Portugal).

- Benchmark against static solutions without flexibility strategies.

- Measure key performance indicators: total cost reduction, operational responsiveness, non-productive machine time, and computational time [33].

Quantitative Performance Data

Table 1: Comparative Performance of Flexible vs. Traditional Configurations

| Configuration / Metric | Traditional Fixed-Only | Hybrid FD/PD Network | Source |

|---|---|---|---|

| Total Cost Reduction | Baseline | Up to 17% reduction | [33] |

| Transportation Costs | Higher (concentrated flow) | Reduced via localized preprocessing | [31] [32] |

| Feedstock Aggregation | Limited by FD catchment | Maximizes aggregated volumes | [31] |

| Responsiveness to Variability | Low | High (dynamic reconfiguration) | [33] |

Table 2: Key Parameters for Biomass Preprocessing Depot Modeling

| Parameter Type | Description | Considerations for Modeling |

|---|---|---|

Biomass Availability (A_it) |

Quantity available at supply source i in period t |

Model seasonality, growth curves, and uncertainty [32] |

Conversion Factor (α) |

Mass output/mass input after preprocessing | Account for moisture loss and densification [31] |

FD Capacity (CAP_j) |

Maximum throughput of fixed depot j |

Strategic decision based on capital investment [31] |

PD Capacity (CAP_m) |

Maximum throughput of portable depot m |

Tactical decision for mobile units [31] [33] |

| Relocation Cost | Cost of moving a PD between sites | Minor share of total transport costs [32] |

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials and Computational Tools for BMSC Research

| Item / Resource | Type | Function / Application | Representative Examples / Notes |

|---|---|---|---|

| Mixed-Integer Linear Programming (MILP) Solver | Software | Core engine for solving optimization models for network design | GAMS, CPLEX, Gurobi, Python-Pyomo [31] [32] |

| Geographic Information System (GIS) | Software/Tool | Spatial analysis for resource assessment, facility siting, and route planning | ArcGIS, QGIS; used for mapping biomass availability and transport routes [34] [32] |

| Machine Learning (ML) Libraries | Software/Library | Forecasting biomass supply/demand, optimizing real-time operations | Random Forest, Neural Networks (e.g., via Python Scikit-learn, TensorFlow) [7] |

| Fast Pyrolysis Unit (Mobile/Fixed) | Physical Technology | Converts biomass to denser bio-oil for easier transport and storage | Key portable preprocessing technology; produces bio-oil, biochar, syngas [32] |

| Mobile Chipper/Densifier | Physical Technology | Portable preprocessing to increase biomass density at forest landing sites | Redizes transportation costs; used in forest biomass procurement [33] |

| Forest Residues | Biomass Feedstock | Representative feedstock for supply chain modeling | Low bulk density, high moisture content [31] [33] |

| Miscanthus | Biomass Feedstock | Representative dedicated energy crop for supply chain modeling | Modeled on marginal lands; has specific growth/yield profile [32] |

Troubleshooting Guide: Frequently Asked Questions

FAQ 1: Under what conditions is a hybrid FD/PD network superior to a fixed-only network? A hybrid configuration demonstrates superior performance, with cost reductions up to 17% [33], under these specific conditions:

- High Biomass Spatial Dispersion: Biomass resources are spread across large, geographically diverse regions [31].

- Significant Seasonal Variability: Biomass availability or accessibility fluctuates seasonally (e.g., due to harvesting seasons or weather-related operation bans) [33].

- Uncertain Supply Forecasts: Difficulty in accurately predicting the location, timing, and quantity of raw material availability [33].

FAQ 2: How do I determine the optimal number and location for Fixed Depots (FDs) in my model? The optimal FD placement is a strategic decision output by the MILP model, driven by:

- Long-Term Biomass Density: Position FDs in watersheds or regions with the highest and most stable biomass density to exploit economies of scale [31].

- Proximity to Conversion Plant: Consider distance to major bioenergy plants to minimize final transport costs for processed feedstock.

- Infrastructure Availability: Factor in existing road networks, utilities, and labor availability at potential FD sites.

FAQ 3: Our model results show high transportation costs despite using PDs. What could be the issue? High transport costs may persist due to:

- Suboptimal PD Relocation Schedule: PDs must be dynamically reconfigured. Implement operations postponement and dynamic network reconfiguration strategies, where PD locations and activation schedules are optimized across multiple time periods, not just annually [33].

- Insufficient Storage: A lack of intermediate storage capacity forces immediate transportation after preprocessing. Incorporate storage nodes to enable better shipment consolidation and wider operational time windows [32].

- Inaccurate Relocation Costing: Ensure your model accurately captures PD relocation costs based on distance travelled, though these typically represent a minor share of total transport costs [32].

FAQ 4: How can we effectively model and mitigate the risk of biomass supply variability? Incorporate the following flexibility strategies into your optimization model:

- Dynamic Network Reconfiguration: Model the ability to open and close temporary intermediate nodes (PDs) over the planning horizon to adapt to changing supply patterns [33].

- Operations Postponement: Allow the model to decide whether to preprocess biomass at the source or at an intermediate node, delaying the processing decision until more accurate supply information is available [33].

- Multi-Scenario Analysis: Run the optimization model under a range of risk scenarios (e.g., different biomass yield profiles, summer fire bans) to design a robust network that performs well across various potential futures [33] [32].

FAQ 5: What is the role of Machine Learning (ML) in optimizing these hybrid supply chains? ML complements traditional optimization (MILP) by addressing specific complexities:

- Forecasting: Use ML models (e.g., Random Forest, Neural Networks) to more accurately predict biomass supply and bioenergy demand by analyzing historical data and real-time inputs like weather and market trends [7].

- Real-Time Scheduling: Apply Reinforcement Learning to handle real-time, online scheduling and routing problems with multiple constraints, an area where traditional MILP struggles [7].

- Parameter Prediction: Simplify optimization models by using ML (e.g., decision trees, SVM) to classify biomass feedstocks or predict optimal facility locations [7].

Operational Decision Framework

The following diagram outlines the decision logic for implementing flexibility strategies in response to biomass supply chain disruptions:

Flexibility Strategy Decision Logic

Troubleshooting Guides

Frequently Asked Questions (FAQs)

Q1: My biomass supply chain model is computationally expensive and fails to find a solution for large-scale problems. What methods can I use?

- Problem: Standard optimization packages become impractical for large-scale biomass supply chain models due to high computational load and memory requirements [35].

- Solution: Employ a Simulation-Based Optimization approach. This hybrid method combines the evaluative power of simulation with optimization algorithms to find good solutions for large-scale problems within practical computation time, though it does not guarantee optimality [35].

- Protocol:

- Develop a discrete-event simulation model (e.g., using Matlab Simulink) that captures the dynamic behavior and uncertainties of your supply chain, such as variations in biomass supply and logistics [35] [36].

- Formulate an optimization model (e.g., a Mixed-Integer Linear Program) that defines the core decision variables [36].

- Integrate the models so the optimization model uses parameters estimated by the simulation model to find improved solutions iteratively [35] [36].

Q2: How can I handle the high uncertainty in biomass feedstock quality and supply in my optimization model?

- Problem: Biomass feedstock varies in moisture content, ash, and supply yield, leading to inefficient and costly supply chain operations if not properly accounted for [37].

- Solution A: Implement a Two-Stage Stochastic Programming model. This approach allows you to make "here-and-now" decisions (e.g., depot locations) before uncertainty is resolved, and "wait-and-see" decisions (e.g., biomass flow) after specific scenarios (e.g., weather conditions) are known [37].

- Solution B: Develop a Data-Driven Robust Optimization model. This method uses support vector clustering (SVC) to depict uncertain sets from data, reducing conservatism and providing decision-makers with trade-off solutions based on their risk preferences [38].

- Solution C: Apply a Fuzzy Inference System (FIS). Fuzzy logic is powerful for handling imprecise data and subjective information, making it suitable for managing uncertainties in parameters like biomass moisture content during conversion processes [39] [40].

Q3: My strategic-level biomass supply chain plan is not feasible at the operational level. How can I ensure consistency across planning levels?

- Problem: Strategic plans that do not account for tactical (seasonal) and operational (weather, machine breakdowns) variations can be unattainable in practice [36].

- Solution: Use a Hybrid Optimization-Simulation model, specifically a Recursive Optimization-Simulation Approach (ROSA) [36].

- Protocol:

- Develop a Mixed-Integer Linear Programming (MILP) model that integrates strategic and tactical decisions [36].

- Develop a discrete-event simulation model that incorporates operational variations and constraints, such as weather-related delays and machine interactions [36].

- Couple the models recursively. The optimization model provides a solution, which the simulation model tests and validates. The results from the simulation are then used to inform and improve the optimization model in the next iteration [36].

Q4: How can I optimize a specific process variable, like the grinding of biomass, which is critical for conversion efficiency?

- Problem: Biomass grinding is energy-intensive, and its outcomes (particle size, density) are critical for subsequent conversion processes but depend on multiple interacting variables [41].

- Solution: Use Response Surface Methodology (RSM) combined with a Hybrid Genetic Algorithm [41].

- Protocol:

- Design experiments to understand the impact of key process variables (e.g., corn stover moisture content and grinder speed) on response variables (e.g., bulk density, specific energy consumption) [41].

- Develop response surface models from the experimental data to draw surface plots and understand interaction effects [41].

- Optimize the response surface models using a hybrid genetic algorithm to find the parameter values (e.g., 17-19% moisture content, 47-49 Hz grinder speed) that maximize desired outcomes [41].

Advanced Algorithm Selection and Tuning

Table 1: Guide to Selecting and Troubleshooting AI-Driven Optimization Methods

| Method | Best Suited For | Common Challenges | Tuning Parameters & Solutions |

|---|---|---|---|